Personable Robotics – U R Dancing’

In this project we explored methods in which music could be translated into robot movements (or dances)

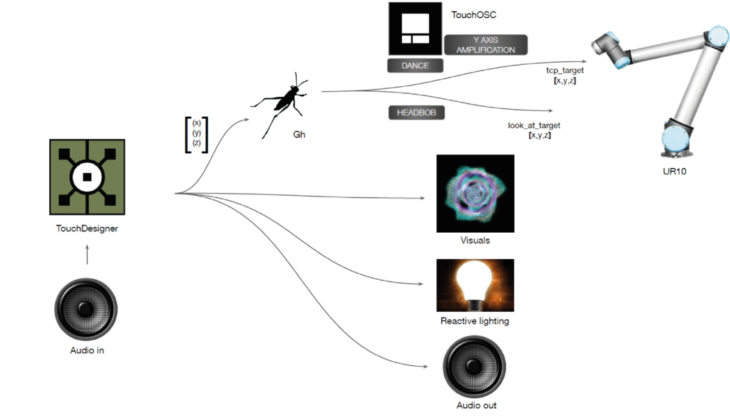

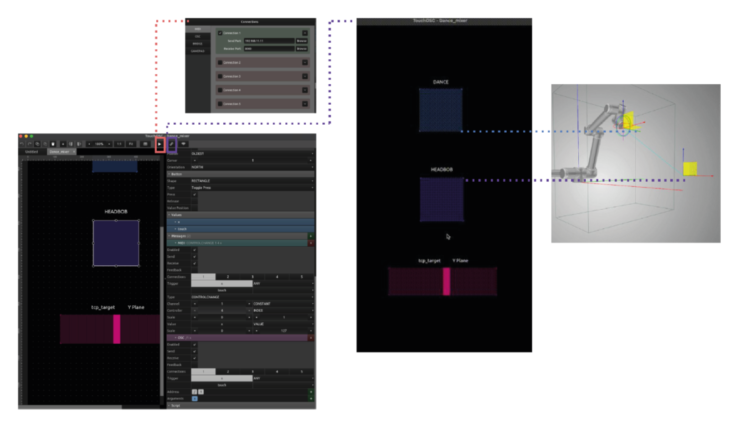

Network architecture

In order to acheive this, we leveraged the versatility of touch designer to extract useful information from an input song. We analysed sound waves from a few different songs and were able to extract highs, mids, lows, treble, bass and other musical characteristics. those values were remapped into values as target points for the robot in its working area.

Setup 1

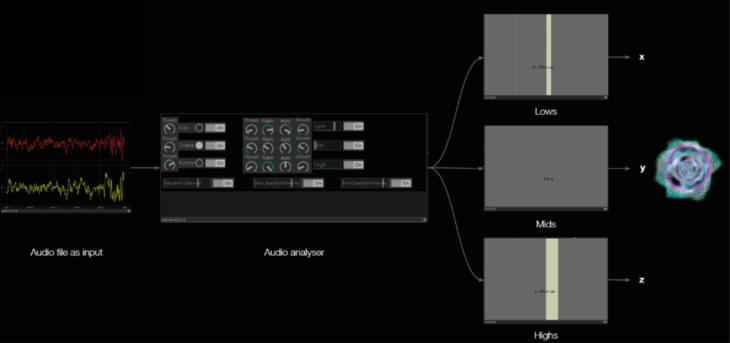

The first method we tried was to extract and filter out the lows, mids and highs and remap them into x,y,z coordinates to be sent to the robot as target planes.

Setup 2

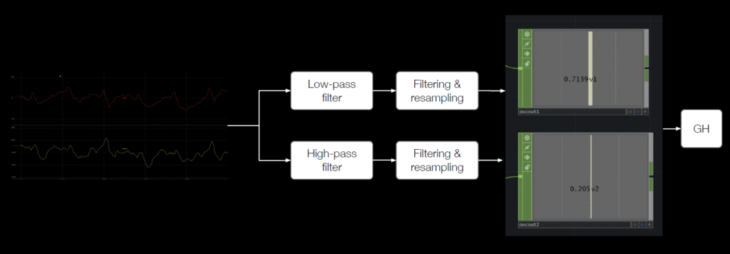

In the second method, similar values from the highs and lows of an audio sample would be first sent to grasshopper and some post processing would be done to a chieve a more stable motion.

Workflow

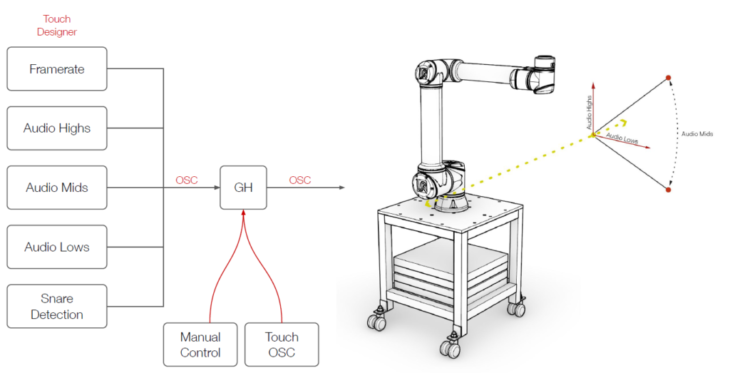

Audio analysis values as well as manual values from the TouchOSC app are sent over OSC protocol to grasshopper. As the robot sways incrementally from left to right and back, those input values affect the robots z and y axes, allowing it to move more intensely during more active parts of a song and more gently during slow moments.

These planes are sent to the robot in realtime as those planes are first converted to joint motions and streamed to the robot at a rate of 200 frames per second. All pathplanning is done at that rate in the backend using IK-Fast with the help of the custom script developed by Madeline Gannon.

TouchOSC

TouchOSC sliders and buttons are added to give the user manual realtime “VJay” control in order to exagerate or decrease the robots movements and toggle robot dancing styles.

U R Dancing is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Masters in Robotics and Advanced Construction, in 2021/2022

by:

Students: Alfred Bowles, Grace Boyle, Libish Murugesan, Andrea Najera & Mit Patel

Faculty: Madeline Gannon, Daniil Koshelyuk, Aslinur Taskin