Workshop 2.2 / Multispectral Cognification – Drone Control and Path Planning

</p>

Introduction

The aim of this workshop is to have a drone autonoumously fly a pre-determined path in order to use its front camera to scan the thermal properties of a 3D printed heating system.

We use the Mavros package for ROS for drone control and path planning and PX4 autopilot which is an open-source autopilot system.

In this blog post, we will outline the methods used to control the drone in order to optimize it’s path to get the best camera angles for thermal detectinon as well as 3D photogrammetry reconstruction which will be explained further in the other blog posts of this course.

Setup

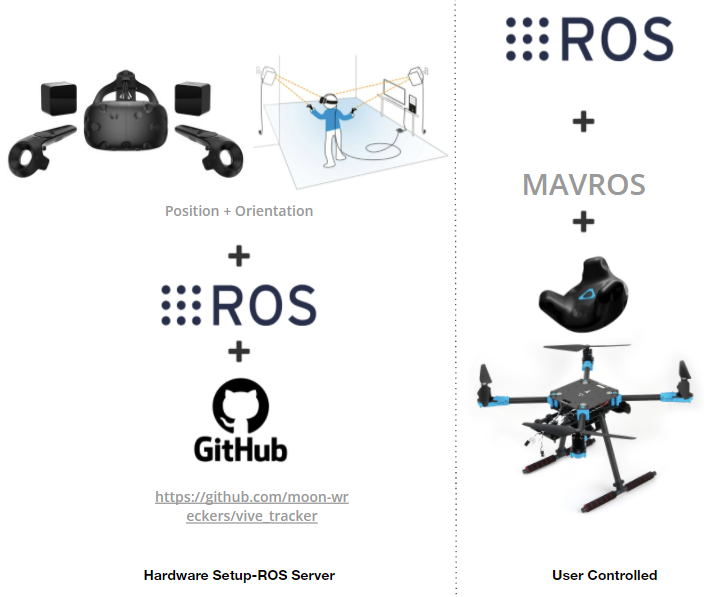

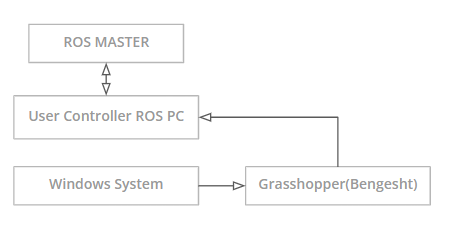

The following diagram shows our setup where from the hardware side we have a pixhawk drone, a front facing RGBD camera, a thermal camera and HTC vive tracker for localization.

ROS Dependencies

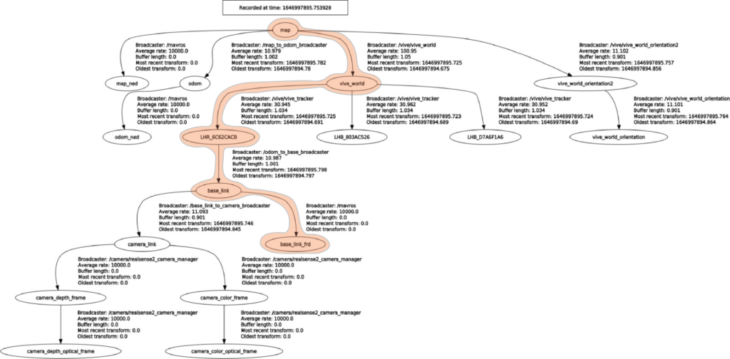

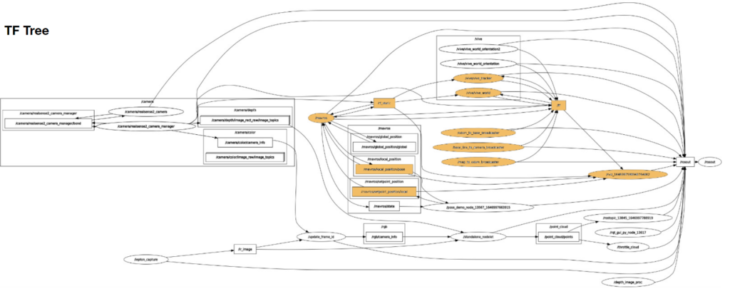

The following rqt graph and TF tree outline the connected nodes and topic in use.

Path Planning

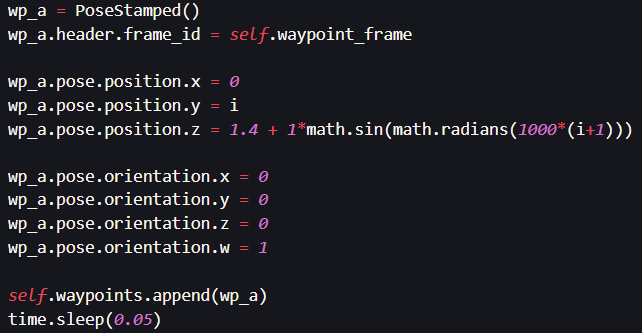

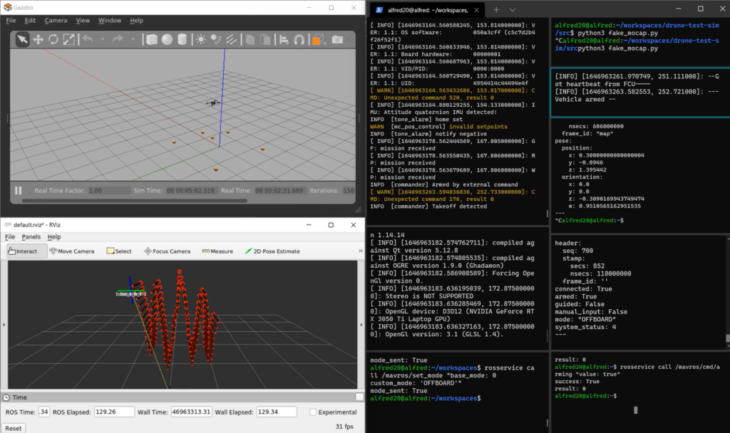

Method 0: Sine Wave generated by an equation in the python script

We start by writing a simple sine wave function and loop through the points to generate waypoints for the drone. This python code publishes the path points to the drone localizer.

Method 1: Sine Wave with constant yaw

We then wanted to have more flexibility with the way we design the drone trajectory.

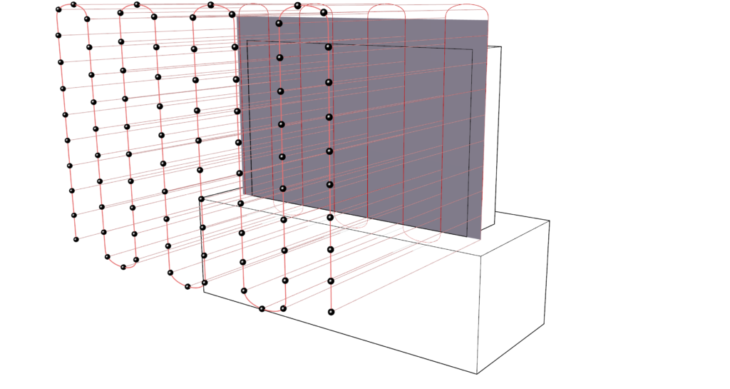

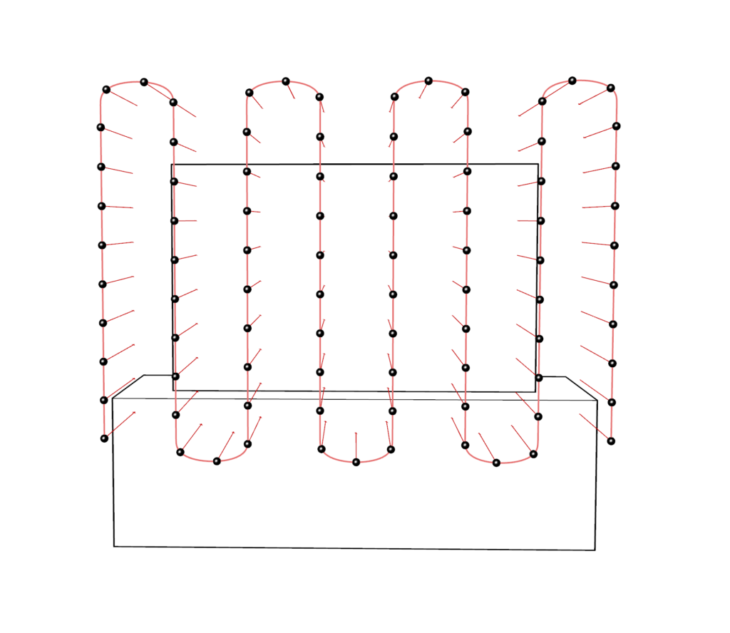

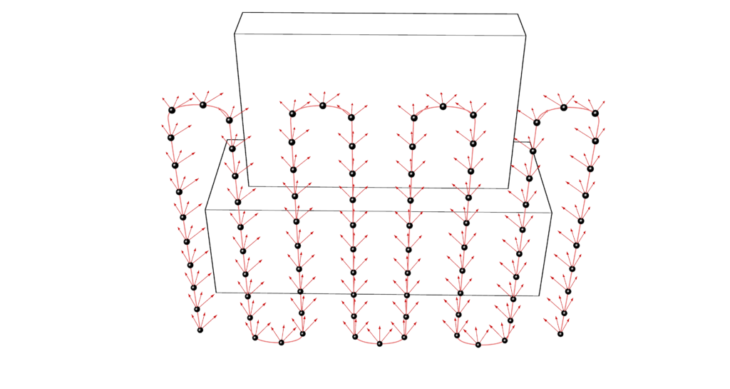

We contour the front face of the subject, and create waypoints for the drone which are exported as a txt file from grasshopper and loaded into the python script

Drone follows waypoints imported as a txt file from grasshopper

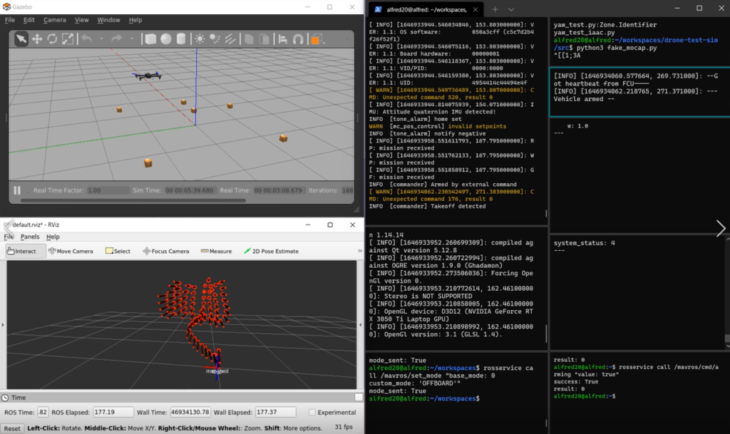

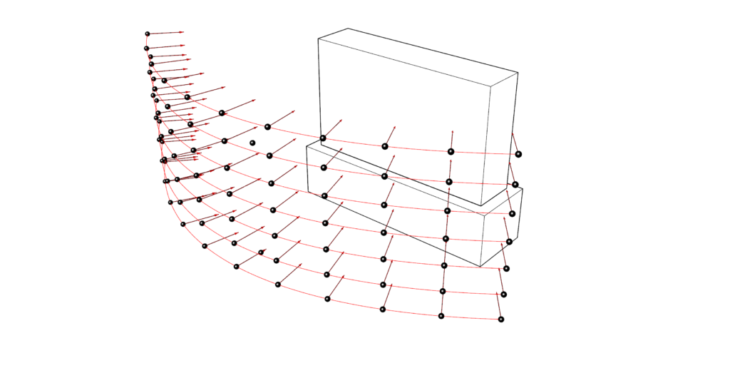

Method 1.5: Spiral path with constant yaw

- Spiral loop

- Replay this loop from 3 different angles

Method 2: Sine wave trajectory with side-to-side panning

At each point we loop through 3 different orientations by controlling the yaw rotation in order to have more perspectives for photogrammetry

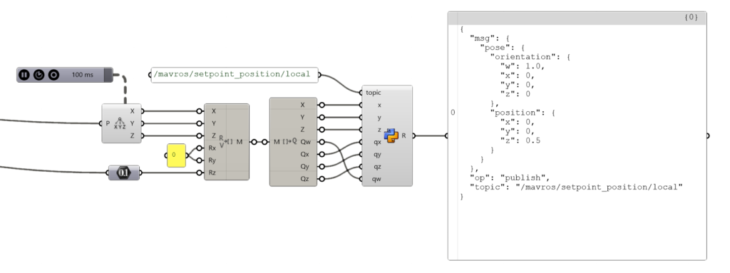

Method 3: Waypoint publishing by WebSocket in Grasshopper using the Bengesht plugin

- Waypoints manually controlled by reading and decomposing the point xyz coordinates

- Target pose published in real time to the topic: mavros/setpoint_position/local

Method 3.5: Waypoint publishing by WebSocket in Grasshopper using the Bengasht plugin

- Waypoints generated by dividing the curves and are published as xyz coordinates

- Orientations are extracted from angle between perpendicular and focus object direction

- Orientations converted from euler angles to quaternions

- Target pose published to the topic: mavros/setpoint_position/local

Localization Issues

HTC Vive tracker had some issues keeping track of its pose. Reasons involved:

- Infrared interference from the RealSense IR emitter

- Drone vibrations

- Calibration

Future Developments

In the future, we could extend this technology to send drones on pre-determined paths to autonoumously record a thermal pointclud of a building or space in order to understand its thermal properties on a more detailed level than current methods. Thermal leaks could be identified more clearly leading to a better overview of the building properties and hopefully a reduction in energy use.

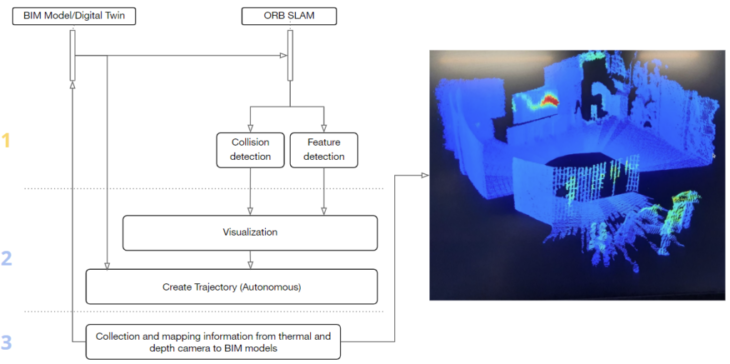

In order to do this at a larger scale, the drone would need to be able to navigate complex paths in several different scenariosl, meaning we would need to use SLAM (Simultaneous Localization and Mapping) in order to safely navigate the space.

Multispectral Cognification – Drone Control and Path Planning is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Masters in Robotics and Advanced Construction, in 2021/2022

by:

Students: Alfred Bowles, Tomas Quijano, Vincent Verster, Abanoub Nagy, Arpan Mathe

Faculty: Sebastian kay, Ardeshir Talaei, Vincent Huyghe.