The challenge

The challenge for the project was to address the design issues related to urban regeneration. As cities are in perpetual motion through creation of new and destruction of old inevitably every now and then voids will be generated into the existing urban fabric. As an architect you are then in front of a design decision of which approach to apply when creating a new building to fill that void. There are 2 extremes to choose from: either to imitate the existing architectural language or the opposite, contrast. Our proposal is to use machine learning as a tool to guide an architect in finding a golden middle way by learning from the existing architectural language to generate new forms that fit into the context.

Data preparation

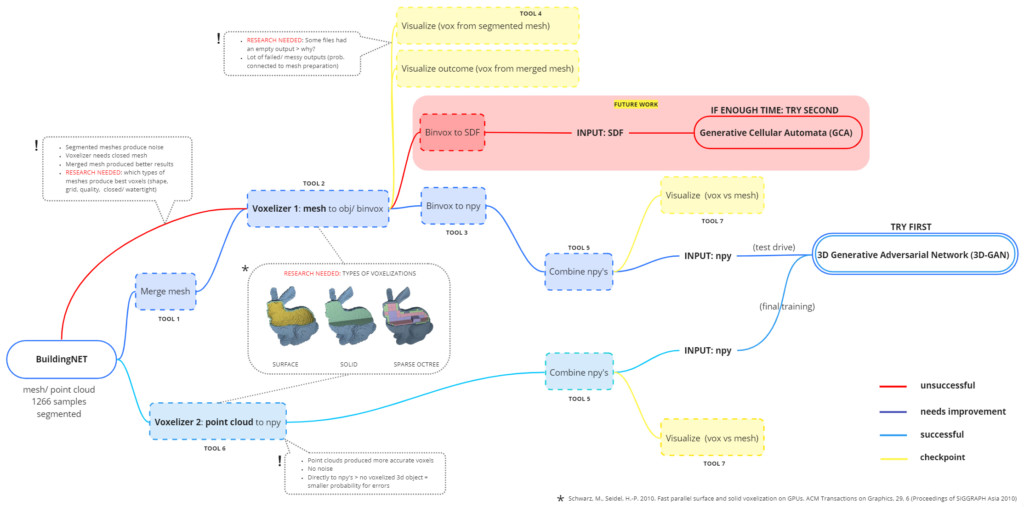

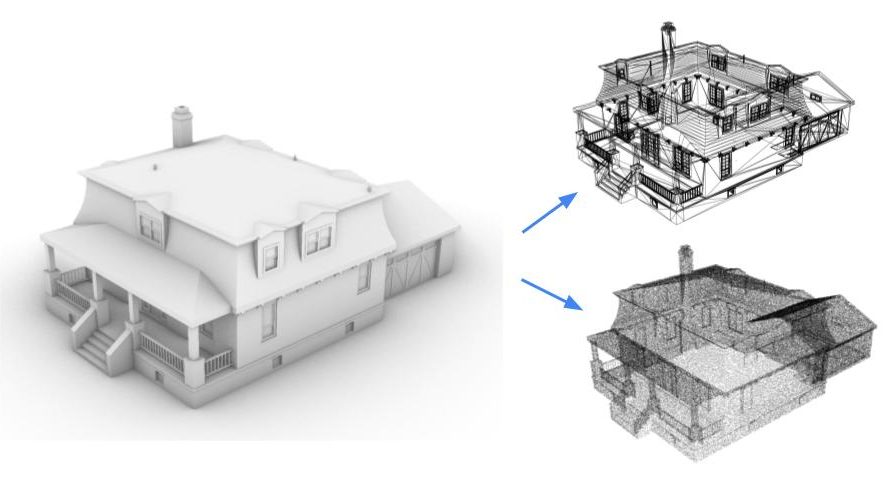

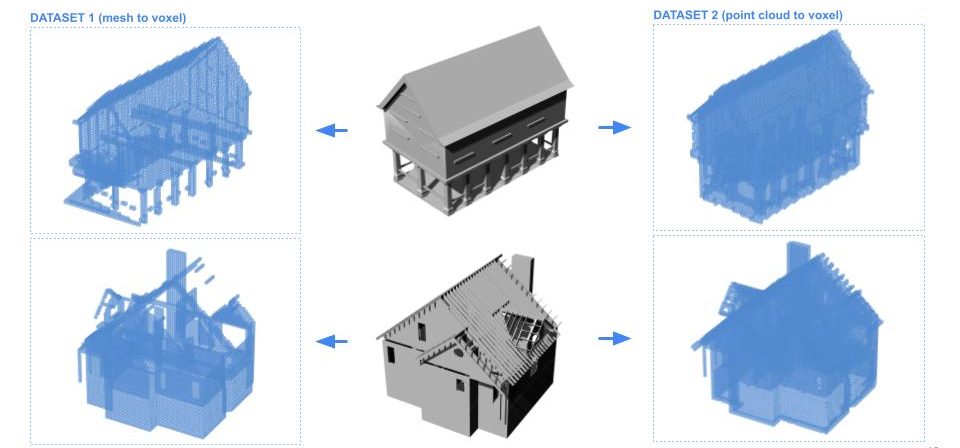

At first we considered experimenting with 2 different ML models: generative cellular automata and 3d generative adversarial network, that could learn the architectural language of existing buildings. With this in mind we started to prepare our dataset. As a case study, we acquired a dataset of residential buildings from BuildingNET which consisted of 1266 segmented samples of mesh and point cloud models. In order to satisfy the input data requirements of both ML models we needed to convert the mesh models into voxels and then to NumPy arrays.

However due to complex meshing of building models the dataset created faulty voxelizations and at this point the choice was made to switch rather to the point clouds that were expected to produce more ordered voxel models. But that meant that we could proceed only with 3d GAN ML model.

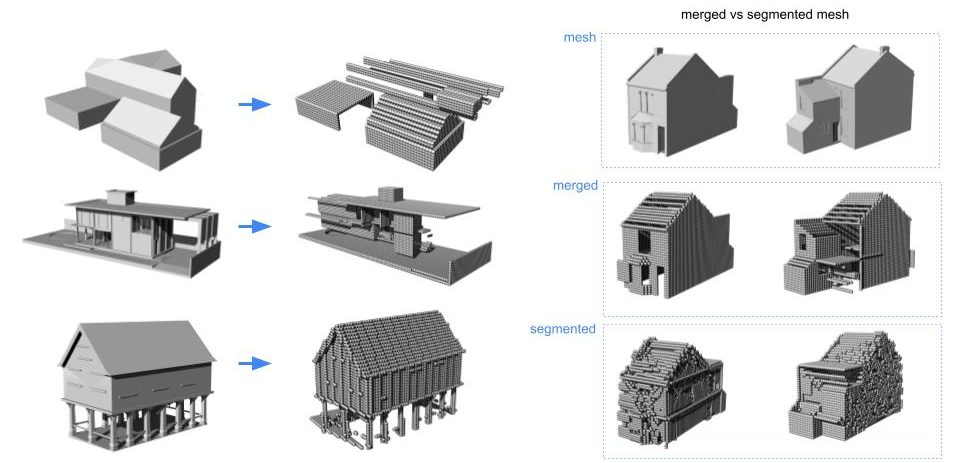

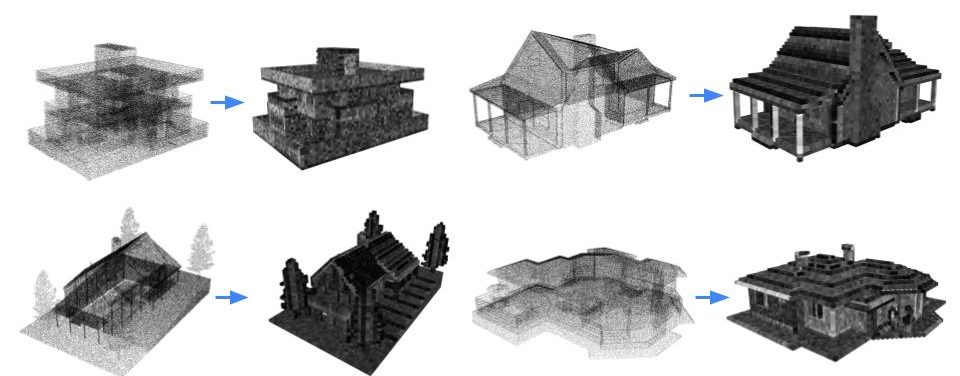

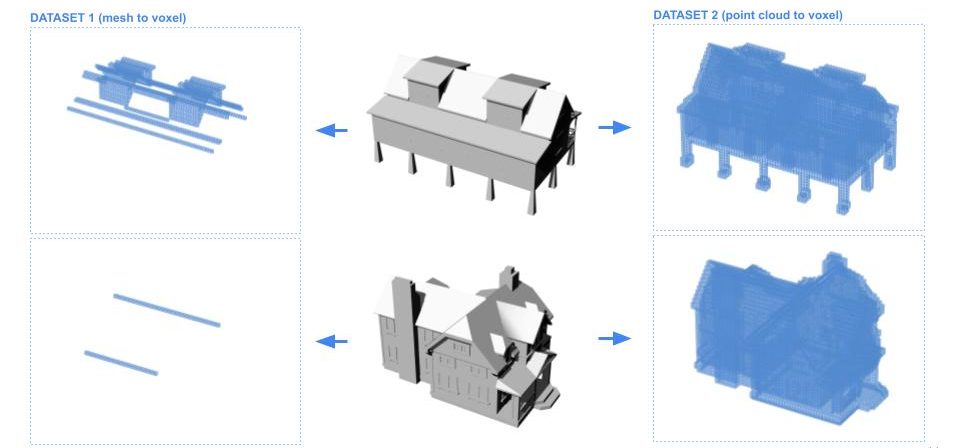

The dataset manipulation and preparation was done fully in python to save time in dealing with such large dataset. First we visualized all the mesh models to have an overview of what kind of models our dataset consisted of. Quickly it became obvious that the dataset was not as clean as we had hoped for. There were many samples with trees, cars, people and gardens attached to the buildings, and some were even missing a roof. But due to time constraints it was not possible to clean all the 1266 samples. To illustrate the differences with the voxelization result we can see here how noisy and faulty results were creates with mesh to voxel process. One small improvement was achieved with merging the segmented meshes. Whereas the point clouds created correct and clean results.

Whereas the point clouds created correct and clean results.

For the better comparison we visualized the created NumPy arrays of both datasets where it becomes clear the limitations of mesh to voxel process.

Machine Learning with 3D Generative Adversarial Network (3D-GAN)

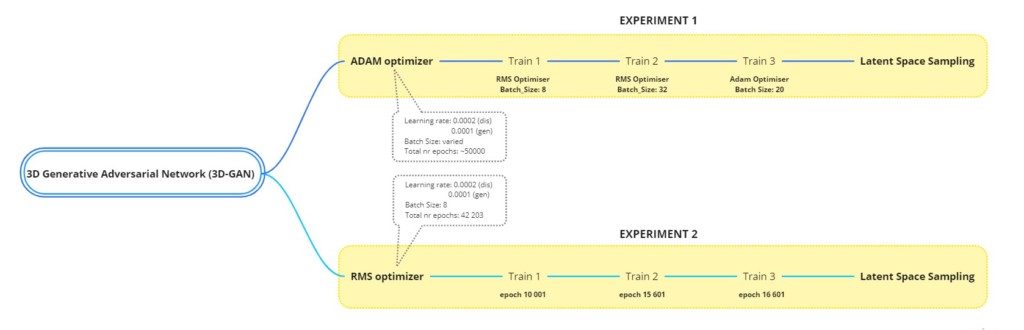

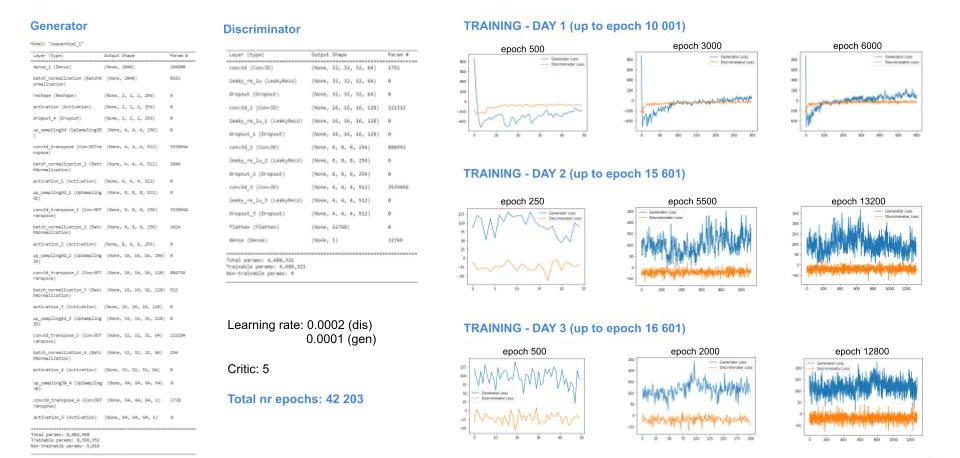

We had 2 parallel workflows during the training where the experiment 2 is the one set with the standard formula and trains with the same parameters throughout while experiment 1 is in contrast where we are more exploratory and test out the consequences of certain training alterations. The model architecture of both remains the same.

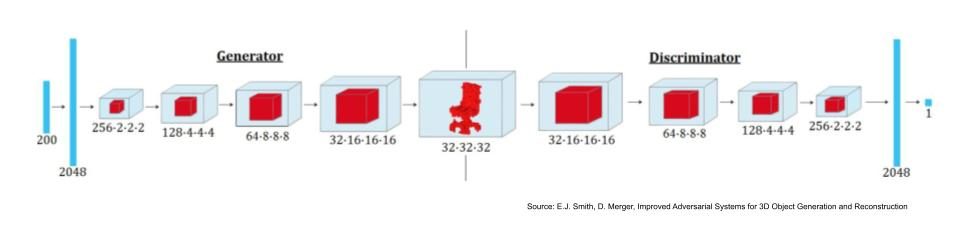

For our machine learning we used the 3D GAN model that outputs 64x64x64 voxels in three dimensional space through its dense network. In this model the generator and discriminator are in constant battle for learning the create more and more realistic 3d voxel models (generator) while at the same time learning to better distinguish between fake and real 3d voxel models fed into the 3D GAN (discriminator). The goal is to train the model to generate close to realistic and varied 3d shapes.

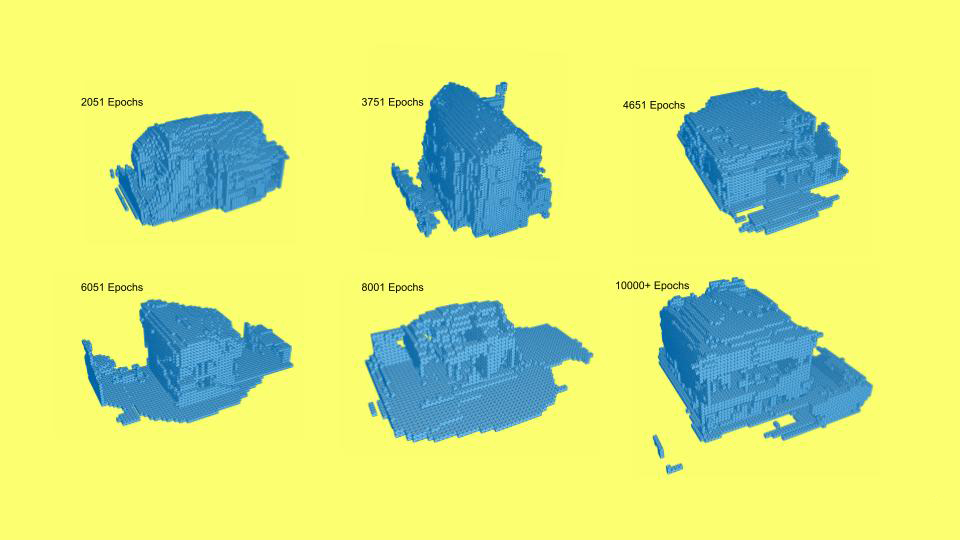

Experiment 1

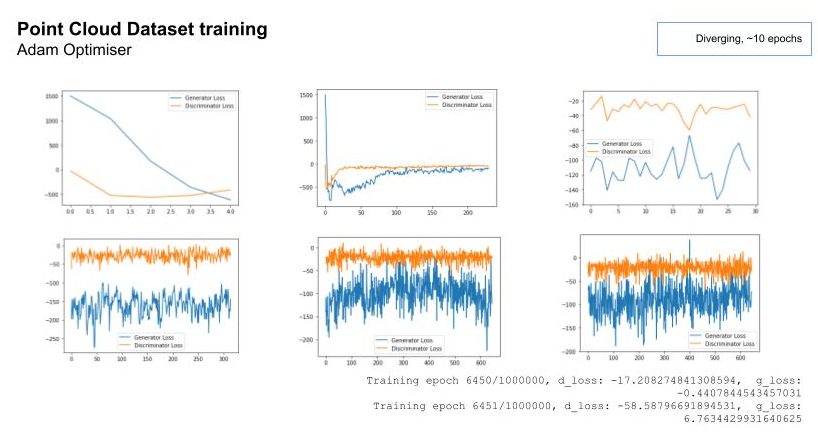

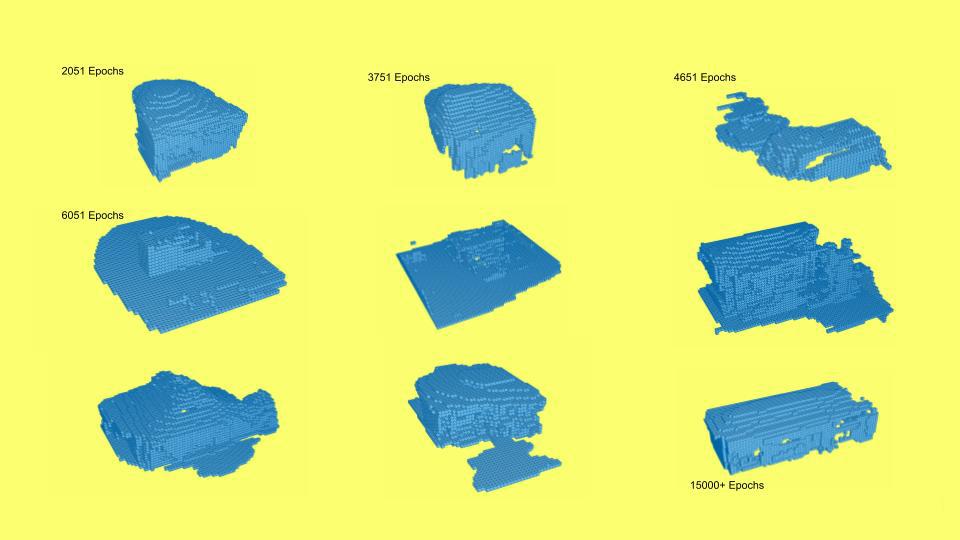

First, the model was trained on the mesh to voxel dataset with RMS and then ADAM optimizer. We see that the RMS never converges and in case of Adam, converges right away, but after a point, has no generator losses. We took a step back and considered our dataset, upon visualization, conclude errors in the conversion process from mesh to voxels that had to be fed in the model. So, as mentioned earlier we consider switching to the point cloud dataset that seem to progress through the training seamlessly. Using the previous settings with Adam optimizer the losses are seen getting reduced.

And outputs considerable 3d results.

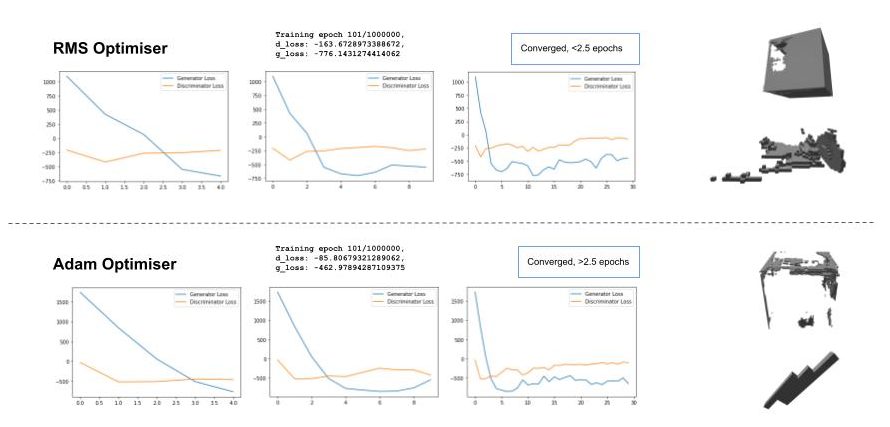

However we become vary of not testing the RMS vs ADAM optimizer on our point cloud dataset, of which, the graphs convey not much difference in training, although the gap between generator and discriminator differs slightly. Priority was given to the object generation, where one clearly differs from the other. The RMS had more closely packed voxels.

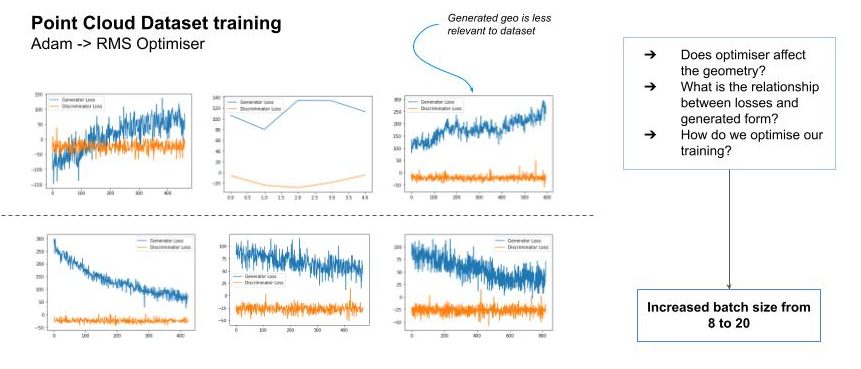

Giving the RMS a go we speed up learning using a batch size of 32, but soon figure that the graph just proceeds to diverge and the generated geometry is less relevant or in sync with our starting dataset. We decide to go back to ADAM, increase the batch size to 20, and not 32 since it wasn’t acceptable to the Google Colab GPU limitations. It is noticeable how quickly the losses are directed to converge.

The learning is heavily improved. The output is detailed intricately, there are porches, windows, entranceways and hopefully it will get better with a few more days of training.

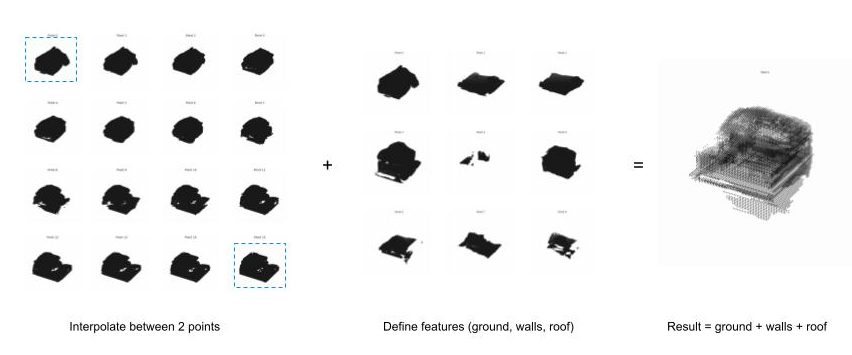

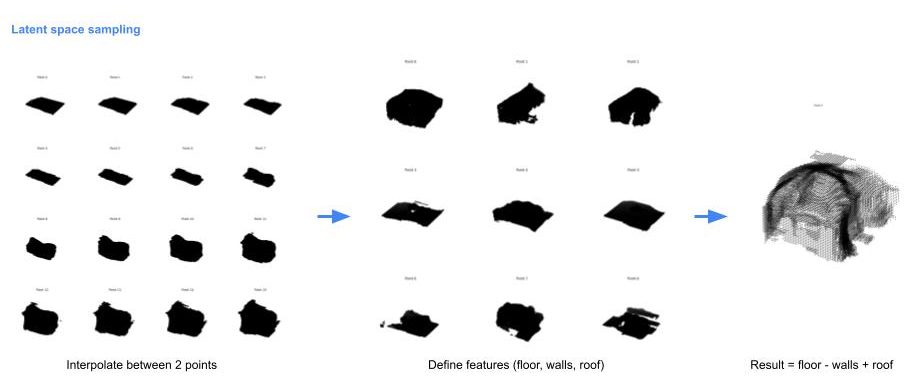

This was then sampled in the latent space, where interpolation was initiated from A to B. Features were selected in terms of ground walls and roof. with a successful end product.

Experiment 2

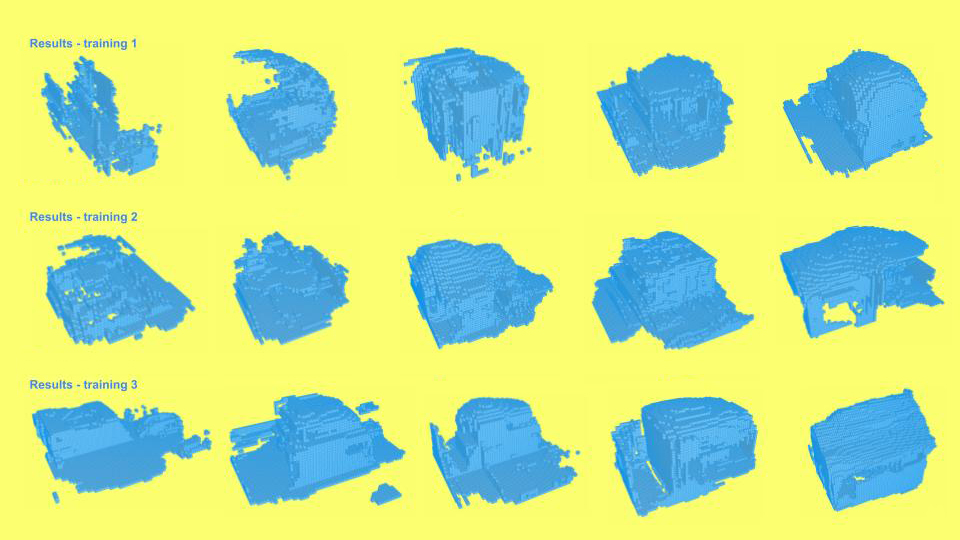

As a second experiment we used only RMS optimizer through the whole process. The training was done in 3 phases and resulted with a bit more than 42 000 epochs in total. In all 3 trainings the generator and discriminator behaved a bit differently from other trainings, with in general discriminator keeping fairly stable loss function whereas generator was more fluctuating, but still following the discriminator.

The results show slight improvement in learning through training sessions by generation of more precise and clean voxel models. It was clearly visible that the walls and gardens were more prominent features, and that the later training started to learn more the different roof shapes.

In the latent space sampling the floor, walls and roof were extracted as most distinctive features, but the exercise didn’t produce very clear results.

Conclusions

- Dataset and model input formats:

- Dataset should be cleaned from extra objects

- More knowledge needed for mesh to voxel manipulations (shape, grid, quality, closed/ watertight, segmented/ merged)

- Point clouds produced more accurate voxels and no noise

- Directly to npy’s > no voxelized 3d object = smaller probability for error

- Refinement of workflow with help of further experiments:

- Need to train more to verify which optimizer works better

- Running 1 experiment with ADAM only

- Longer training could produce even more accurate models

- Application of 3D GANs in architecture

- Lack of precision

- Computationally expensive

- At this point best applied for form and massing studies

Urban Regeneration – Designing Infills with Respect to Genius Loci is a project of IAAC Institute for Advanced Architecture of Catalonia developed at Master in Advanced Computation for Architecture & Design (MaCAD) in 2021-2022 by:

Students: Hanna Läkk, Maryam Deshmukh, Faezeh Pakravan

Faculty: Oana Taut, Aleksander Mastalski