Abstract

Artificial Intelligence can make the design process more efficient by analyzing complex data to inform design. Traditionally, architects must analyze factors, such as sunlight, wind and acoustics. This process can be tedious, and only a limited amount of data can be considered at one time. Machine learning is more efficient, allowing the architect to automate these tasks. The data that can be analyzed by computers is also limitless.

With respect to architectural acoustics, in particular, machine learning may prove indispensable. Architectural acoustics deals with the principles of sound generation, propagation and reception. The physical properties of certain materials can assist in the absorption, reflection or transmission of sounds. The interaction between people and sound is an important early design consideration for architects. In certain scenarios it is desirable for sound to travel and reverberate as in the case of a theater or performance space. In other situations, mitigation strategies are necessary to reduce noise pollution from traffic or other sources. Machine learning may prove useful in creating acoustical models that can assist the designer with decisions, and detect underlying patterns and relationships that might not be obvious to us.

The objective of the Sonic Tectonics experiment is to generate an urban noise map to create a driver for early design decision making. Sounds can have an impact on how comfortable we find a space and may even affect the way we behave. For example, studies have shown that piping classical music into urban areas has a direct correlation to a reduction in crime rates. In this experiment, a convolution machine learning model was developed to draw relationships between the types of sound in the surrounding urban morphologies. Audio files were categorized, and a weight or score was applied to the unique sounds. Another machine learning model was created to generate vectors for different mapped areas throughout the city of London. A third machine learning model will be created to embed the audio within the maps. Finally, the model will be tested on a new city to see if the model can generate a sound map or prediction for that city. This paper will explain the methodology of the experiment as well as a critical analysis of the process and results.

Methodology

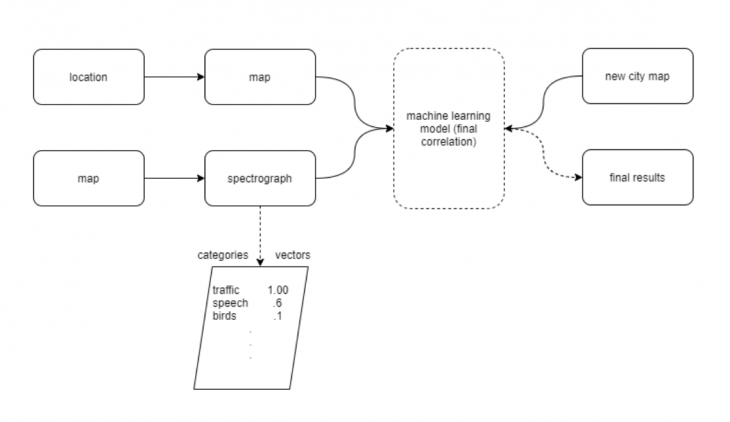

The first step in the experiment was to obtain audio files from specific locations throughout the city of London, our test city. The next phase consisted of creating an ANN regression model that was used to parse out the unique sounds and categorize them. The same script was used to apply weights or scores between zero and one to each of the categories. The lower end of the range was considered desirable or good, while the higher range was applied to noise pollution, such as traffic. A new list that included the unique sounds and their weights was generated. Another machine learning model was created in the second part of the process. Maps were resized and loaded into the model, so that vectors could be generated based on the type of area and noise associated with it. In the next phase of the machine learning experiment, a third model will be created allowing the weighted sounds to be applied to specific locations on the maps. Once the machine has been trained to make these associations, a new city map will be tested to predict the soundscape of an urban area (fig. 1). The final model is expected to help inform early design decisions with respect to form and materials.

fig. 1

fig. 1

Critical Analysis

Initially, the process was not well defined, as there did not appear to be a more efficient or straight forward model to obtain the final result. There may be alternative methods for this experiment that would be more efficient and produce similar results. The accuracy of the model is not clear at this point, since the third model is yet to be run.

An individual’s perception of sound can vary from different cultural backgrounds, so perhaps a study of these differences would affect the weights that should be applied to the sounds. The urban soundscape prediction would be skewed if the cultural differences were significant. Cultural perceptions of sound may be something to research for future development of the experiment.

The weights of the sounds were manually applied by the authors. A better option might be to modify the experiment, so that crowdsourcing can be used to generate scores for the unique sounds. Another issue is the fact that the sounds are somewhat generalized by location. The precise location of the source of the sound and fall off are not factored into the equation at all. An example of how this might affect design is that as the building rises in height, the sound from the street diminishes, and mitigation strategies may not be required at the upper levels of the structure. This would result in a more cost effective solution, since materials would not be wasted where the noise pollution is reduced.

Conclusion

The first two machine learning models were successful in categorizing the audio files and generating vectors from the maps. The final machine learning model is expected to apply the sounds to the maps and train on this data, so that a new city map can be used as an input to predict the urban soundscape in order to inform design decisions. The process was rather involved, requiring three different machine learning models to achieve the final result. However, once the final model is successfully trained, it should be a relatively simple process to then feed the model a new map to predict the urban soundscape. However, there were limitations to the model. The experiment could be developed further by taking some of the aforementioned steps to use crowdsourcing to gather more accurate data, and to find a method to identify the precise location of the sound source. The improvements to the Sound Tectonics experiment would assist in decision making and better cost control in the early design phases of a project.

Sources:

As, Imdat, et al. “Artificial Intelligence in Architecture: Generating Conceptual Design via Deep Learning.” International Journal of Architectural Computing, vol. 16, no. 4, 2018, pp. 306–27. Crossref, doi:10.1177/1478077118800982.

Friedman, Noah. 5 Key Characteristics of Vibrant Places , Medium, 7 Sept. 2020, medium.com/vibemap/5-key-characteristics-of-vibrant-places-a97d691c6274.

Llorca-Bofi, Josep. Listening to the City: Architecture as Soundscape, Research Outreach , 2019, researchoutreach.org/wp-content/uploads/2020/08/Josep-Llorca-Bofi.pdf.

Rawes, I.M. “London Life in Sound.” London Sound Survey, I.M. Rawes, 2020, www.soundsurvey.org.uk/.

Schulte-Fortkamp, Brigitte & Young, Jin & Genuit, Klaus. (2021). Urban design with soundscape -Experiences of a Korean-German team.

SONYC Sounds of New York City, New York University, 2020, wp.nyu.edu/sonyc/.

Credits:

Sonic Tectonics is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at the Master of Advanced Computation for Architecture and Design in 2020/21 by:

Students: Shelley Livingston, Alexander Tong

Faculty: Zeynep Aksöz, Mark Balzar