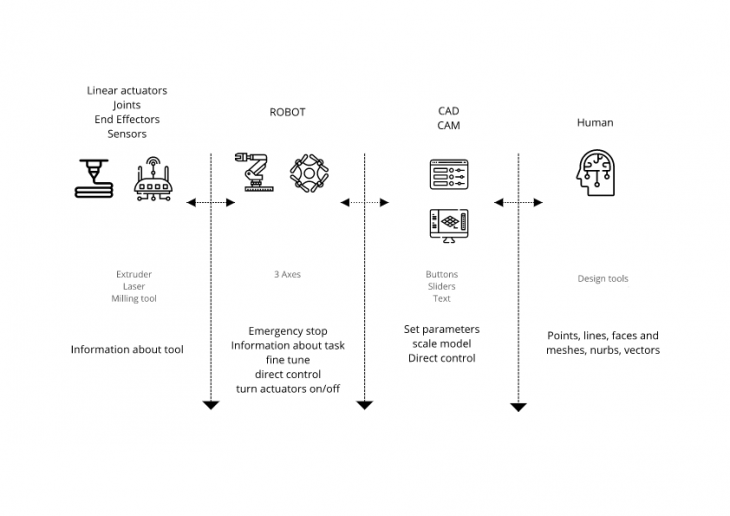

The goal of this post is to identify the key components of an experimental UX for controlling a robot in creative applications.

Human – Digital manufacturing interfaces will be getting more and more used by non technical users.

For example, there are already some robotics application for cooking tasks , robots that chop, robots that cut or mash something. There are also robots painting, drawing or even dancing.

How can we define different types of movement?

Generally speaking we control robots with CAM tools for specific tasks, this CAM tools tend to calculate most things on a black box so the user only sees an output. Not allowing to control some aspects of the toolpath calculations. Being able to have control of every step in the movement with a parametric approach can allow for more creative applications of the robot. Tools like Kuka PRC use Rhino3D and Grasshopper that have allowed a wider user base like designers, architects and non robotic experts to use this robots on creative applications.

Usually 3D software tools use points, lines, curves, etc. We can use this same objects to define set of instructions as movements we could also define this movement “styles”.

How does a line commonly looks like?

For example to draw a line in 3D space we could tell a robot to draw a line from P1(X,Y,Z) to P2 (XYZ) but other layers of information are required in order to emulate a “human” movement like acceleration, force, speed , etc. The first complexity is to standardize and determine this different movement “types” Gcode deals with this by adding extra parameters in a movement but its is still limited. EG, G0 [E<pos>] [F<rate>] [X<pos>] [Y<pos>] [Z<pos>].

Being able to DRAW this movements and curves directly on the 3D application can be an interesting approach to control this robots, predefined movement types with different color representations can help identify each movement by its type.

In order to simulate a set of movements we need a timeline that allows us preview the the order and simulate movement on real time in the computer and the robot.

Some main parameters can be defined as a Global variable based on each application.

Direct control of the robot from the interface with the ability to control it from external sensors or information can also be usefull, for example move the robot based on a sound for dancing, or move the robot on an axis until a trigger is activated.

the robot itself should be able to override any external communication for security and display information in case of failure, understanding and communicating with the tool should also be possible for fine running and understanding failure scenarios. Is the milling tool to hot? Should the user decrease speed of the cutting tool? this can be programmed into the tool definition to make robots safer.