NEON LANDSCAPES

Neon Landscapes was inspired by the generative art used in the Synthwave / Vaporwave music genre. The fantasy cityscapes and landscapes in combination with the iridescent colours provided for an ideal dataset that could be used for the further generation of fantasy architectural images. This way, generative art becomes truly generative by propagating itself. Two approaches were used, StyleGAN and Pix2Pix. Although there were compelling results generated through the use of StyleGANs, it took multiple days to train, therefore in the interest of time and also to have more intention in the image generation, the project was continued using Pix2Pix. The dataset was generated through web scraping, and further developed for pix2pix using canny edges.

Neon Landscapes Dataset (16 of 190 images)

The dataset sample above shows 16 of the 190 images that were scraped from Google Images using custom scripts harnessing the Selenium library. From a set of 190 images, 150 were used for training and 40 were used for testing.

Neon Landscapes Canny Edges

The requirement of image pairs was fulfilled by utilizing OpenCV Canny Edges. These were then joined together to create the AtoB image pairs for training and testing.

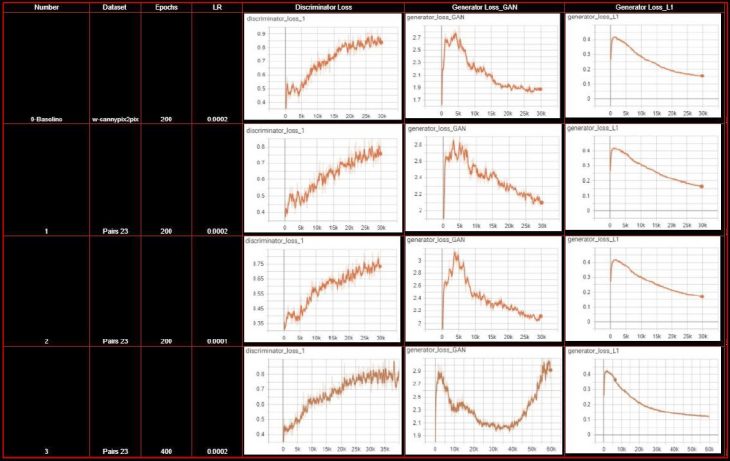

Training Results

In the table above we compiled the results of all our iterations. Our baseline iteration was based on the parameters from the technical paper that formed the basis of the script (arxiv.org/abs/1611.07004). For the other ones we changed the number of epochs, learning rate as well as the canny edge threshold. From those 4 we picked 3 to present and include in our poster shown below.

Neon Landscapes Poster

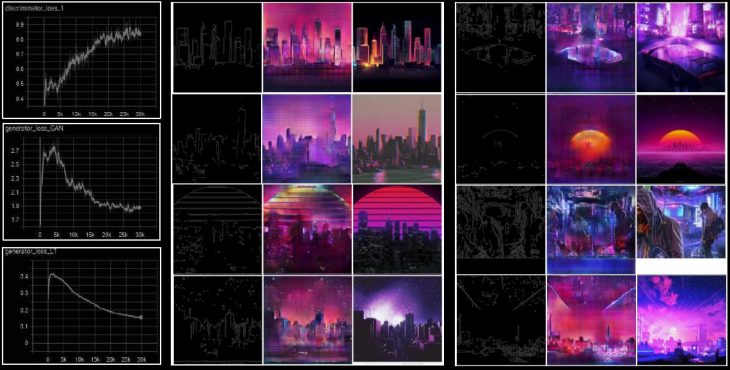

Run 01

For the parameters used in our first iteration in the image above, we assumed the parameters from the pix2pix paper. We ran the training for 200 epochs and kept the learning rate of 0.0002.

Looking at the loss diagrams we can observe decreasing L1 loss which looks good. The generator loss is decreasing while discriminator loss is increasing, but the curves seem to plateau after the middle of the training.

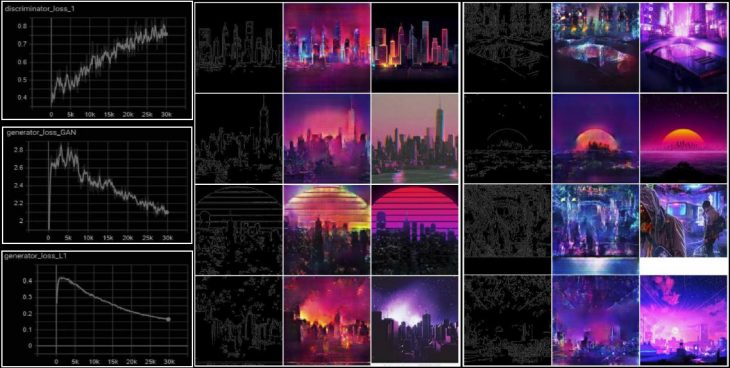

Canny Edge Threshold Change

After this iteration we started to wonder if the number of canny edges is enough for some of the images to generate good results. We decided to update the threshold for canny edge generation. As you can see above, this did not change the input image drastically, but for the image on the right, we did get more features visible for the inputs of the training.

The next iteration used the same parameters of pix2pix, however we used the updated input images in the dataset. The loss diagrams look very similar to the previous iteration, with a slight change in the end values, the generator loss is higher and the discriminator loss is lower than previously.

Visually we can see improvement in the quality of the output images, especially in those that did not have enough edges in the previous trial.

Run 02

In our third iteration we ran the training for 400 epochs, with all the other parameters unchanged. Looking at the generator loss diagram we can see that while the discriminator loss continues to plateau as we observed in the previous trials the generator loss starts to trend upwards after around 200 epochs, The L1 loss however continues to go down.

Run 03

In the above comparison, while we see Generator loss is losing and L1 loss is still winning, we can observe that some of the output images are missing sharpness. Due to the L1 loss still winning, the places that are missing edges get filled with more fuzziness. For every 1 cGAN, there are 100 L1’s working together.

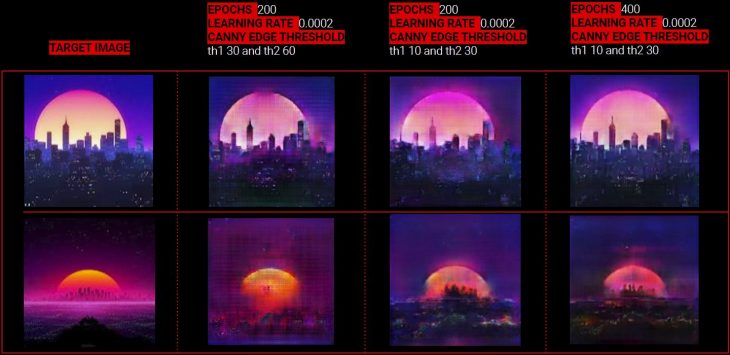

Comparison

In the case of the first 2 images, we can see improvement in each iteration that we tried. In the case of the other 2 images, we see the changes between the iterations, however it’s less obvious which ones are performing the best. The 400 epoch output for the first image is an example where we can see the impact of L1 winning over cGAN loss, due to the missing edges are filled with fuzziness.

Neon Landscapes Implementation

There are currently 2 possibilities of implementing this project. One is by making hand sketches and then generating a Synthwave cityscape as shown in the image set above at the top. The second is to create canny edges out of existing cityscapes and then convert to Synthwave cityscapes as shown in the samples above at the bottom.

In conclusion, it would be of interest to further explore StyleGANs to create a direct connection with pix2pix, and also to explore other image masking and manipulation methods to create more variations. A larger dataset would also prove to be useful for the training with added cleaning of data to make the images more cohesive.

As another iteration, a StyleGAN version of the project was also attempted as shown in the video below.

Neon Landscapes is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at the Master in Advanced Computation for Architecture and Design in 2020/2021 by students: Aleksandra Jastrzebska and Sumer Matharu, and faculty: Stanislas Chaillou and Oana Taut.