Abstract

Technology has been subservient to the creative process of the architect to shape reality resulting in a physical form. It follows a traditional design path developed using a digital design process to reach an end product. But what if technology was the building itself, not only the method to achieve a physical form? What if we used a digital system to create and evolve spaces around us? Making architecture a cyber-physical system.

At the moment, we are far from it as we experience digital tech in buildings through a very clunky and primitive interface. The evolution of architecture and digital technologies have not advanced at the same pace.

The 3rd Industrial Revolution resulted from integrating electronics into an industrial production system. The 4th industrial revolution in architecture would use a cyber-physical feedback system to process information in real-time and respond to that information, which requires a certain amount of intelligence. This cyber-physical architecture lies at the intersection of media and space. We usually perceive media as a sequence of images, not a space.

However, with the proliferation of cyber-physical tech combined with a low-level Ai, new types of computational frameworks and how an interface interacts with the body in a visual or tactile make you realize we are at the cusp of media becoming architecture.

There are different layers to this reality; we are looking at an ecosystem of technologies like procedural modelling, computational systems, software, and robotics working together to interact and respond to the environment.

We are talking about a shift of thinking of computational design as a system for producing form into producing behaviour. This is what we call spatial intelligence. It is primarily a cyber-physical experience that allows us to simultaneously change the material world and the virtual world. We can label it architecture 4.0, envisioning architecture as a platform of presence and action rather than purely a material construct.

Architecture is the oldest human construct, but it is primarily based on physical materials. We are envisioning a less material construct but still interact with that construct through material systems.

State of the art

We were inspired by Refik Anadol and Benhaz Farahi’s works where the human-machine interface is relevant in the graphical and reactive aspect, where the amalgamation of technology and interactivity are pertinent in the human-centred design.

Concept

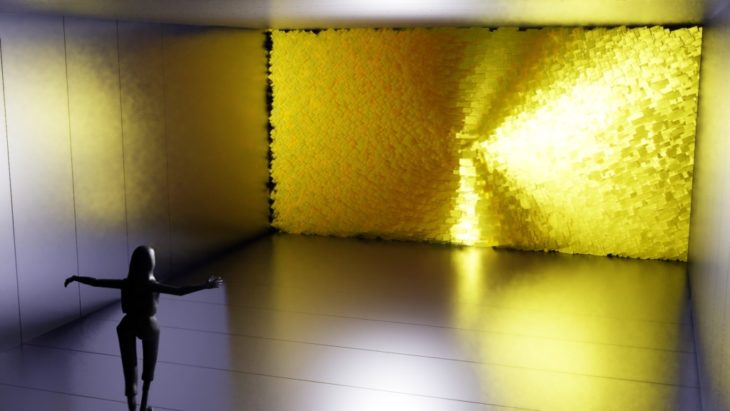

The idea was to create a digital room that generates art with people’s facial emotions, a visual installation that develops in a sensorial way with a man-machine interface to create a reflection of people’s emotions.

Workflow

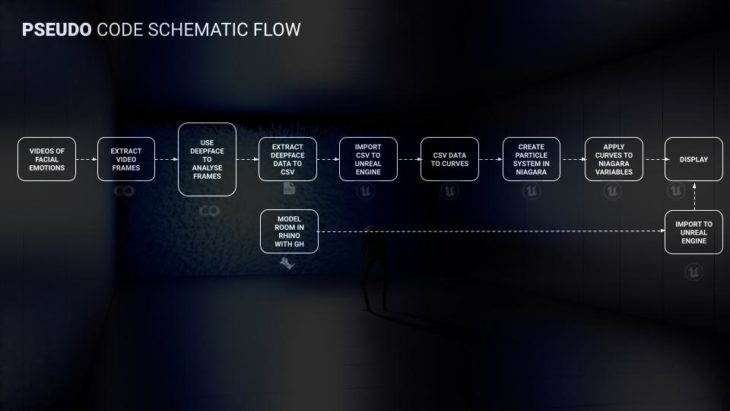

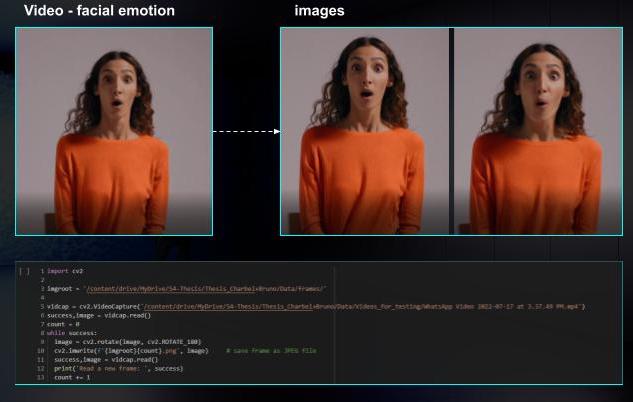

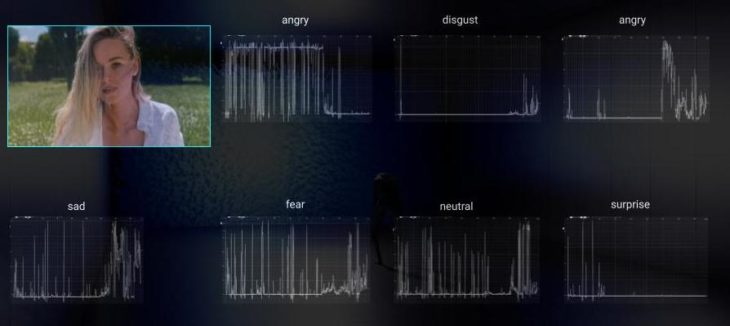

We started by collecting videos of people with different facial emotions (fear, happiness, surprise, neutral, disgust, sadness, angriness …).

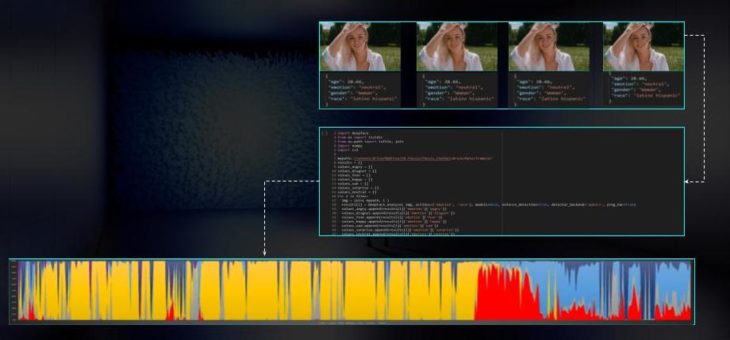

Using OpenCV the videos were framed into numerous images and were later analyzed using DeepFace python package. The algorithm assigns a number varying from zero to a hundred to the image, the more relevant the emotion the higher its number.

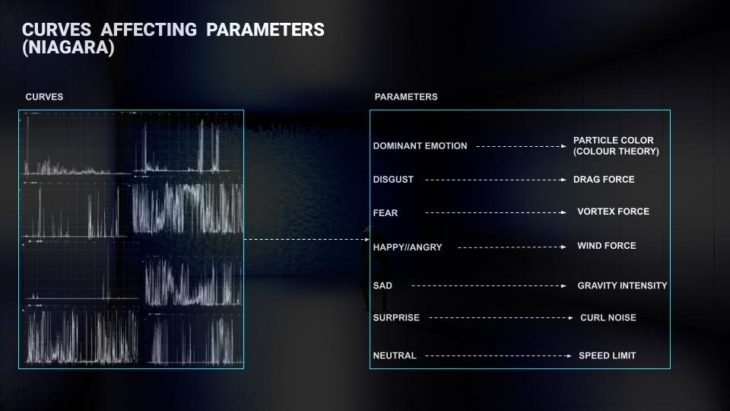

Thereafter the numbers were extracted in CSV format. Later on, we imported the CSVs to Unreal Engine and mapped them into curves, the curves will affect parameters that will define the generated art pattern.

These parameters are colour, drag force, vortex force, curl noise, speed limit, and gravity force. To transform the curves, data and parameters into visual art a plugin for Unreal Engine called Niagara was used, it is the primary tool used for visual effects (VFX) inside Unreal Engine 5 (UE5).

Therefore, following the various facial emotions, a different pattern would be generated, allowing to create an ever-changing installation depending on the facial emotion of the people accessing the room.

Results

Although the analysis frame by frame of the videos wasn’t ideal as it was giving false positives in certain frames (happy frames been interpreted as sad in some cases where the facial expression was in between changes), the results were better than expected as we managed to turn the emotional data into generative art, creating the reactive room we had in mind.

Further steps

We would like to use this same workflow in a realtime environment in the future, this would pose a challenge for the DeepFace Algorithim and significant processing power.

We would also like to build the physical display and present it in a festival in Germany called Bright Festival.

Credits

MOMENTUM is a project of IAAC, Institute of Advanced Architecture of Catalonia, developed at Master In Advanced Computation For Architecture & Design in 2021/2022 by students: Bruno Martorelli and Charbell Baliss. Thesis Advisor: Gabriella Rossi.