Lost In Cloud

Project Overview

The context of this work is set inside a collaborative sharing environment located on the first floor of IaaC’s P59 Atelier. This place is used by multiple work groups from different backgrounds and with various tools and physical models scattered all over the place.

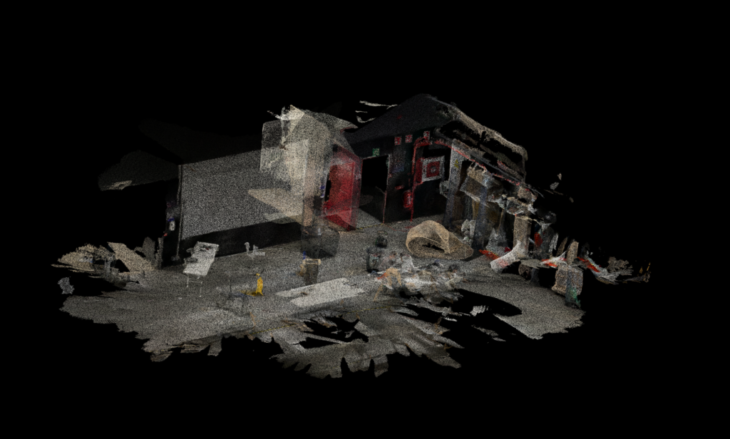

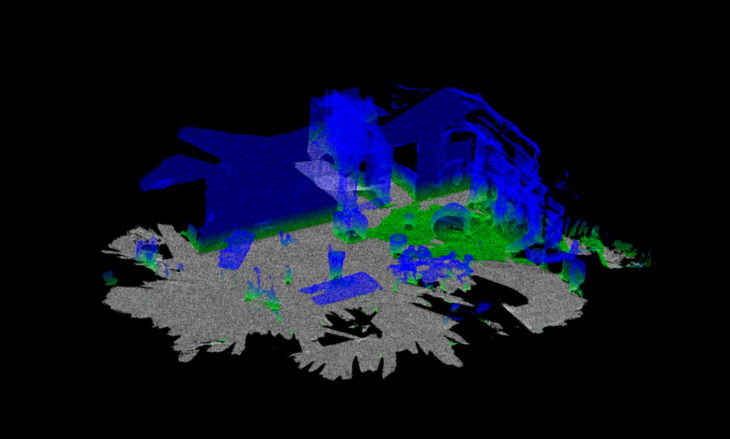

On this project we achieved creating a real time control navigation system that first scanned the environment to work at, then detected a series of objects in that space and recorded their class displayed on a visual point cloud digital model.

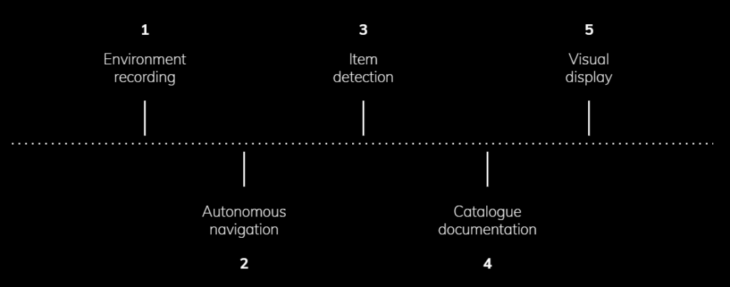

The logic process works as follows:

Environment

We set an obstacle circuit to do our test navigation.

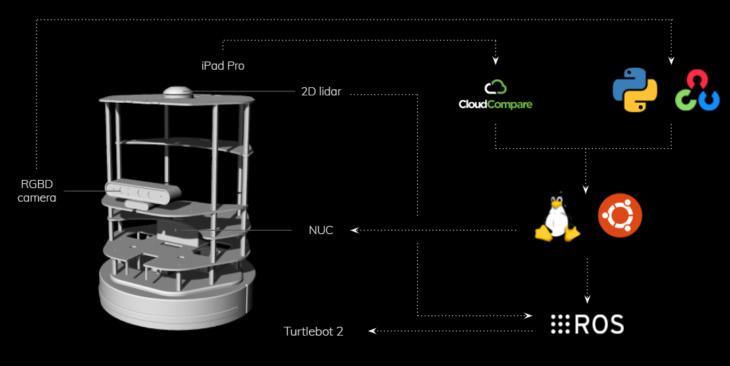

Hardware

The hardware-software framework we used in this project to generate an autonomous system was set on a turtle bot, connected to a NUC, a 2D lidar and an RGB depth camera. The software we used was ROS to communicate and navigate the robot, run on Ubuntu on the NUC, Cloud Compare to operate with the point cloud obtained from the scanning and Mobile Net from OpenCV, python based to detect and catalogue the visual information obtained.

Navigation

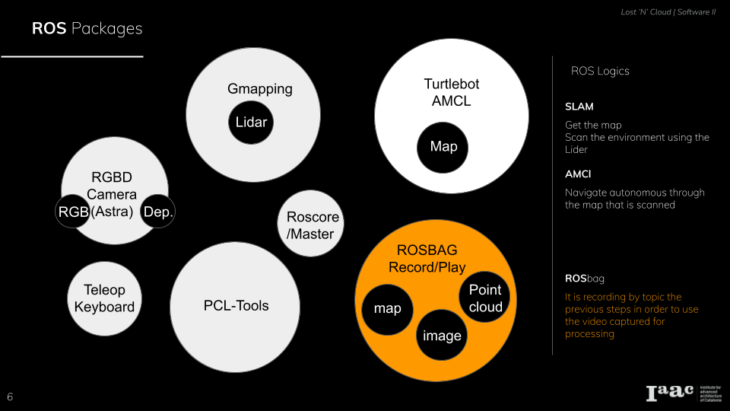

Gmapping

As for the hardware we used a lidar scanner to get the floor plan and gmap the surroundings.

Teleop keyboard

To Guide the turtlebot to get this first floor plan scan. We used a node in a package , the teleop keyboard node .

AMCL

Using the scanned floor plan , we set a path for the turtle bot to navigate autonomously. For the autonomous navigation we used a package AMCL.

Astra camera

Using the ROS package Astra camera. We used Astra camera as a device of choice to record rosbags of different topics.

Rosbag

Rosbag is recorded during the autonomous navigation. The topics that are recorded as rosbag are navigation path, point cloud, video recording and Images. These rosbag topics are unpacked to be post processed to extract required data.

Proof of Concept

</p>

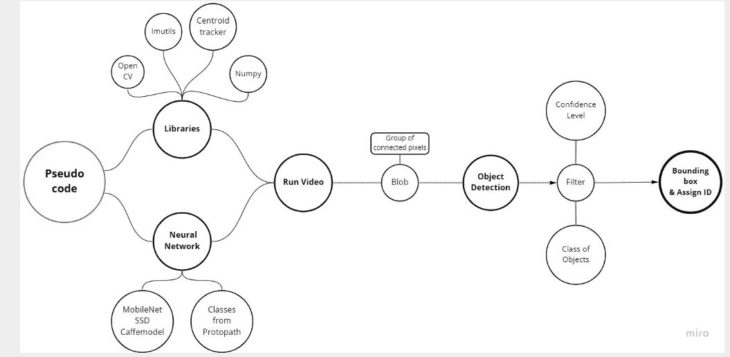

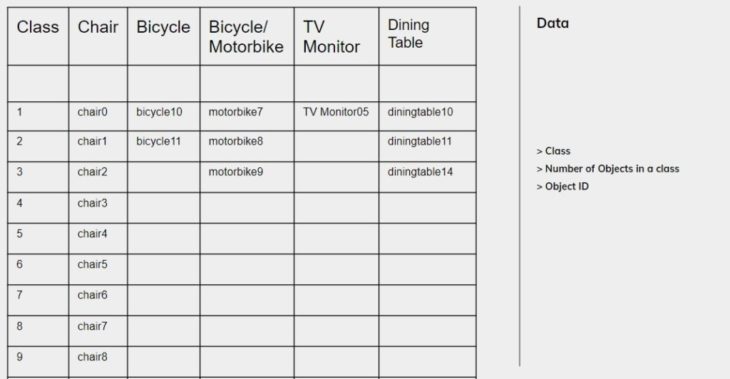

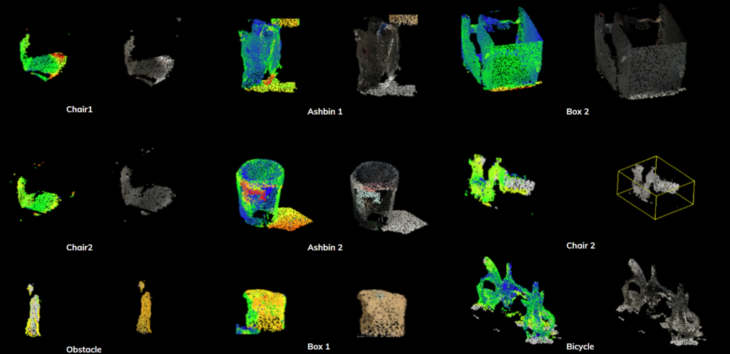

Data Processing

The video data acquired from the turtle bot is processed through an object detection neural network called Mobile Net with weights taken from Caffe model. Each object detected is assigned an unique ID and the class of the object marked. These detected objects are cataloged in an excel format. Through this method the number of objects of a particular class can be quantified and documented.

Pseudo code

</p>

Resultant catalog of data from the video acquired through autonomous navigation.

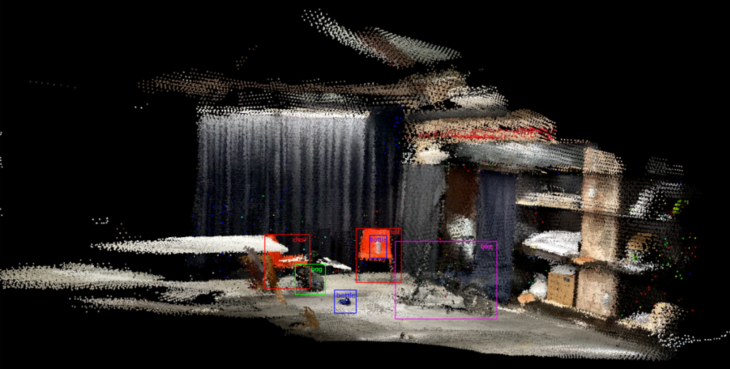

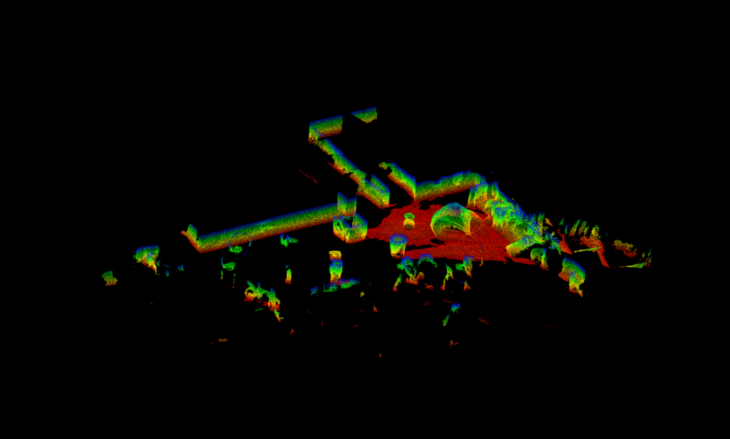

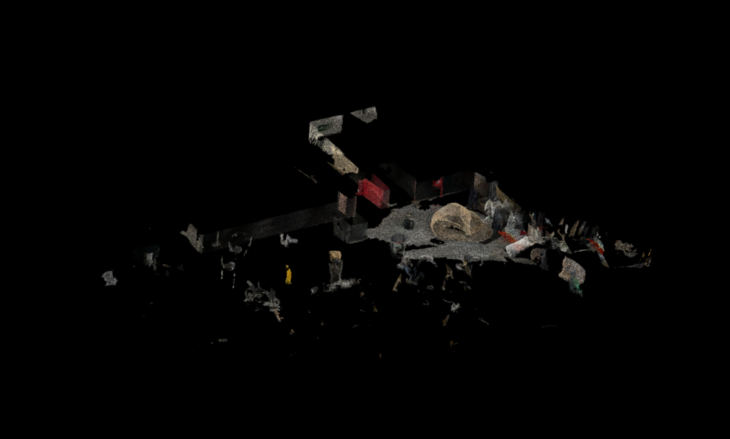

Point cloud segmentation

Segmentation procedure

> Step 1:

Height Ramp

> Step 2:

Euclidean Cluster Extraction

>Step 3:

Color to Grayscale Conversion

> Step 4:

Labeling objects

Color Scale

Current: Blue > Green > Yellow > Red

Steps : 256

Display ranges: 0.25 to 255

Color Scale

Current: Blue > Green > Yellow > Red

Steps : 256

Display ranges: 0.25 to 255

Extract Indices filter to extract a subset of points from a point cloud based on the indices output by a segmentation algorithm.

Euclidean Cluster Extraction

A simple data clustering approach in an Euclidean sense can be implemented by making use of a 3D grid subdivision of the space using fixed-width boxes, or more generally, an octree data structure.

The Lost in Cloud project is developed by Abanoub Nagy, Alberto Martinez, Huanyu Li & Shamanth Thenkan for a 2 month software seminar at the Institute for Advanced Architecture of Catalonia.

Faculty: Carlos Rizzo, Vincent Huyghe