identifying vernacular

hypothesis

Vernacular architecture is the product of specific climate, culture, construction methodologies and access to material.

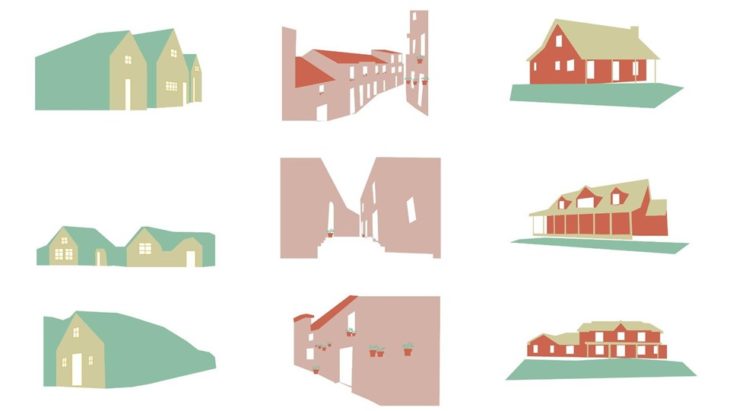

Our architectural task for this project is to reveal that the cultural context inherent in each place can be transferred into form and material and has the potential to be identified by an Artificial Intelligence.

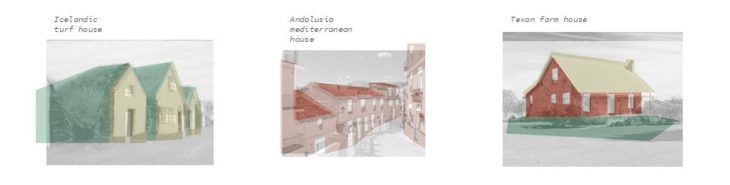

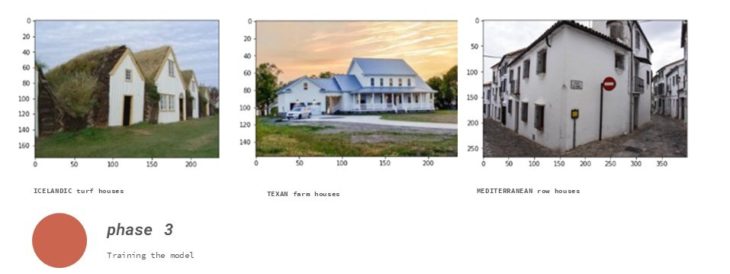

We approached this effort by looking at three different case studies. We selected the vernacular styles of our regional locations: Iceland, Andalusia, and Texas

Using a Convolutional Neural Network (or CNN) classification model, we can examine distinctive features in 2d pictures.

Once we have a trained model, we can use a cycleGAN model to evaluate if those features can be transferred from an image of one vernacular style to another.

With the rise in BIM and automation, we see a correlation with the decline in vernacular architecture. As global practices continue to supersede local architecture, and generations pass, we may lose our connection with these traditional methods. As such, it can be relevant to have a model that can identify a local vernacular style.

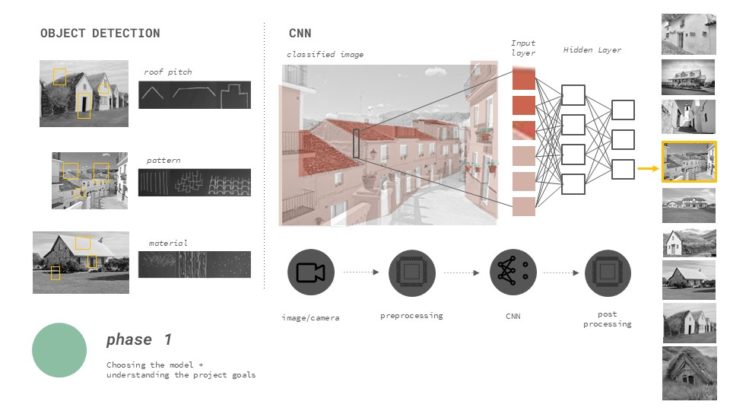

Our project can be broken down into 4 phases. In the first phase we determined the best model for our project goals. Next, we gathered our data and performed pre-processing on the images. Then we trained the CNN model. Lastly, we applied the trained model to a cycleGAN model to further explore the possibilities of Generative Architecture

phase 1

In the first phase, we assumed we would use an object detection model, so we began identifying key features that embody a vernacular style, such as roof pitch, patterns, and materials. We soon realized that we don’t need object detection because we don’t need to tell the model where certain elements are located. These features can be identified by a CNN model through convolutions

We determined that a CNN classification model is best because we just need one label, the image class, rather than multiple labels per image.

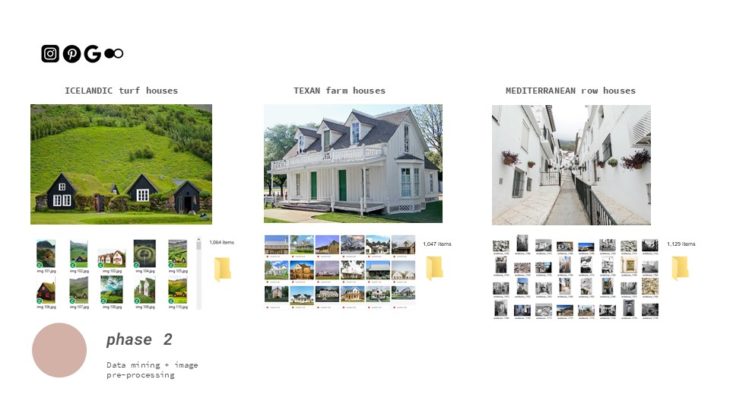

phase 2

In phase 2, we used a google chrome plugin to quickly scrape the web for images of our 3 case studies. We gathered over 1000 images of each class from Instagram, Pinterest, Google and Tumblr and we organized them into separate folders. This folder organization will later serve to identify the image classification in the machine learning phase.

When scraping images for the Texan farmhouse, we were quite selective with the images, as we were concerned with the possible failure of the model. Conversely, we were more liberal with the images we included in the Icelandic and Mediterranean dataset.

phase 3

image classification using CNN in PyTorch

Our initial research led us to find an image classification model using CNN in PyTorch.

The pseudocode for the PyTorch model is as follows:

- Load images dataset

- Transform images from 0-255 to 0-1

- CNN Network

- Optimizer and loss function: Adam

- Model training and Evaluation at Epoch 9

- Train Loss: 0.23

- Train Accuracy: 0.96

- Test Accuracy: 0.88

- Output best checkpoint model

- Perform predictions

The PyTorch model was quite successful in correctly identifying the images.

However, the scope of this seminar introduced us to Keras in Tensorflow, so we wanted to experiment with the Keras model as well.

image classification using Keras Tensorflow

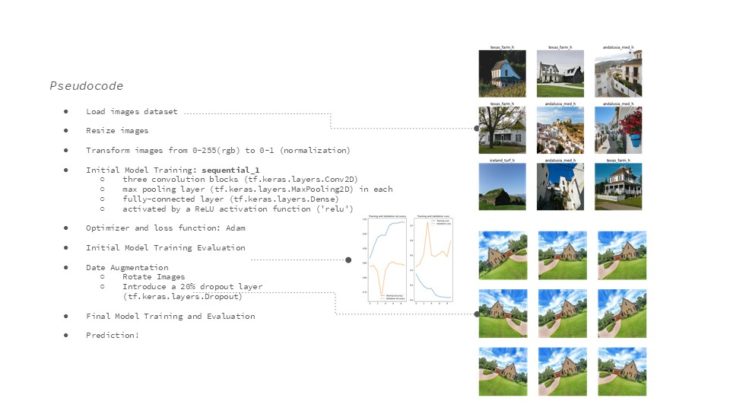

The pseudocode for the Keras model is as follows.

- We load the image dataset and create classes based on their folder names

- Resize the images

- Normalize the images.

- Then we did some initial model training using three convolution blocks, a max pooling layer in each, and a fully connected layer activated by a ReLU function.

- This model uses the Adam optimizer and loss function.

- At this stage, we reviewed the results, and we can see the model was underfitting.

- So, we proceeded with the data augmentation stage.

- In this stage, we rotated the images. This is the equivalent of giving it new images and introducing more variety. A model only reads pixels, so changing the position of the pixels is like giving it a new image to train with.

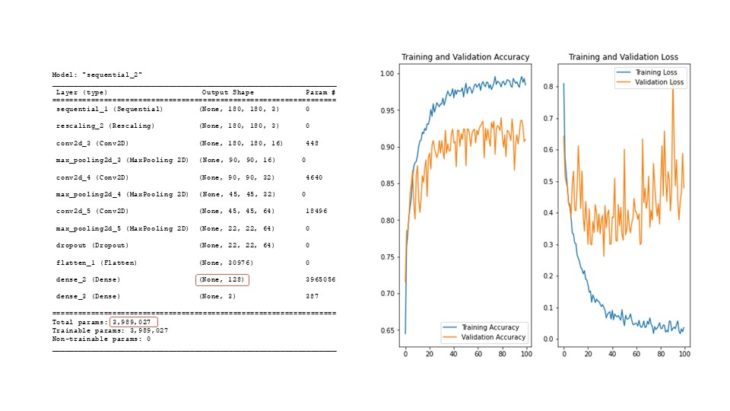

- We also introduced a 20% drop out layer – for further regularization. This is a Simple Way to Prevent Neural Networks from Overfitting.

model comparison using Keras Tensorflow

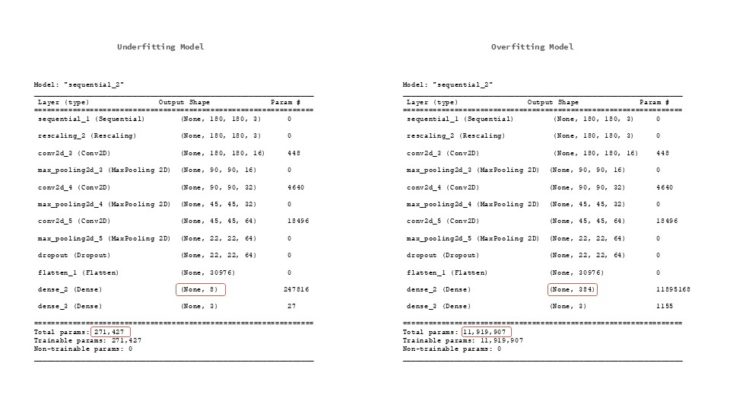

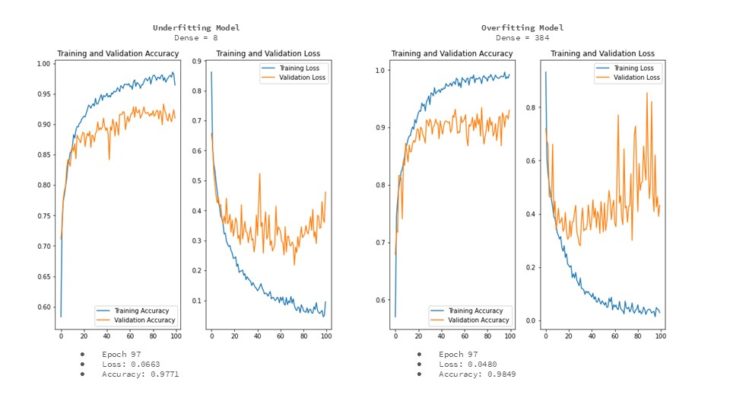

Using the Keras model, we tried 3 different variations and here are the results of the 2 models we didn’t go with. We only varied the dense layers, so we can see the direct impact it has on the model.

model comparison using Keras Tensorflow

The lower dense layer of 8 results is a slightly underfitting model, with a loss of 0.0663 and an accuracy of 0.9771

The higher dense layer of 384 results is an overfitting model, with a loss of 0.048 and an accuracy of 0.9849

image classification prediction results

The final model has a dense layer of 128, a loss of 0.0169 and an Accuracy: 0.9957. We can see from the plot that the model is neither underfitting nor overfitting.

In the GIF, you can see the prediction, along with a percent confidence is ranging between 99 and 100%.

phase 4

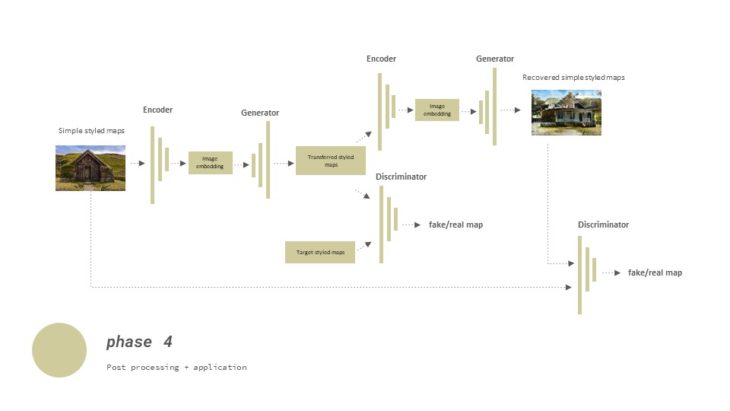

image transfer using a cycleGAN model

After training this model with much success, we wanted to explore an application that can take the project a step further by generating new images.

The cycleGAN model is popularized by the example of an image transfer from a Horse to a Zebra. We wanted to test if we can transfer images from one vernacular to another.

The way this works is the models are trained through unsupervised learning using a collection of images from the source and target domain that do not need to be related in any way.

cycleGAN image transfer model results

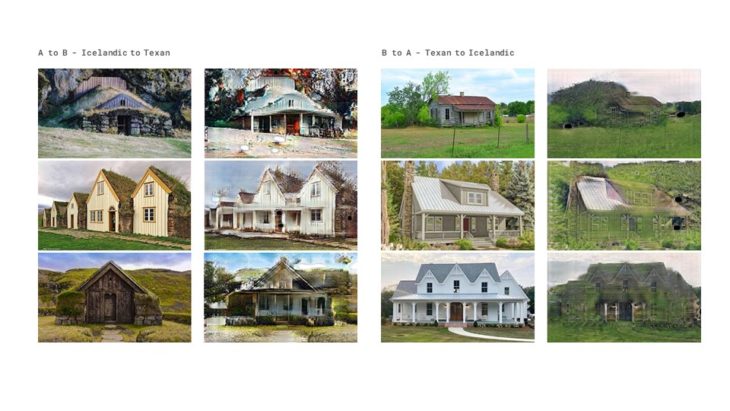

A to B – Icelandic to Texan

After only 50 epochs the model has started to create a porch on the ground floor of each dwelling, a typical element of the Texan type of architecture.

B to A – Texan to Icelandic

Although the inverse image transfer produces less accurate results for the same number of epochs, the model clearly tries to reduce the façade and replicate the distinctive green roofs.

cycleGAN observations

Approximately 17 hours are needed to train 50 epochs. The more the architectural forms resemble each other, the better the result. But the results are still not conclusive. About 100 epochs and a larger training data set would be needed. Despite this minimal training, from 40 epochs on, the model can detect patterns of vernacular architecture and start translating them. It would be interesting to study more examples, other architectures, and even mix more than one style in the translation of images. In general, we found that working with GANs is still too experimental for the tools at our disposals.

conclusions

Our initial hypothesis was to reveal the cultural context inherent in each place, which is embodied in the architecture of buildings and can be identified by an Artificial Intelligence. Reviewing the results of our final model, we see the predictions are mostly correct and the average confidence rate is very high. Thus, we can conclude with great certainty that our hypothesis is correct.

This initial case study only included three regions and the model was successful in distinguishing between their vernacular styles. If we could do this project again, we would be less selective with the images, especially since the three vernacular categories are quite different. That said, we are curious to redo the study with a dataset of very similar categories and see how it would perform. Finally, it would be interesting to expand the study to several regions and determine if there are some styles that the model simply cannot learn to identify.

credits

identifying vernacular is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Master in Advanced Computation for Architecture & Design in 2021/22 by

Students: amanda gioia . lucía leva . zoé lewis

Faculty: oana taut . aleksander mastalski