First Iteration

Hand gesture recognition and virtual object interaction with AR

Abstract

The aim of this workshop was to use HoloLens in conjunction with the Grasshopper component Fologram, to explore and create new workflows with AR.

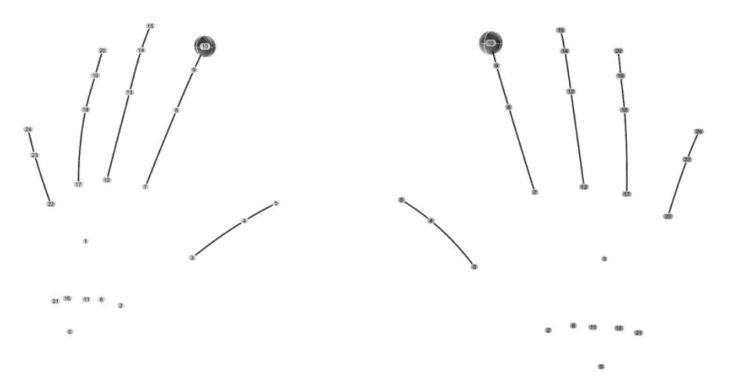

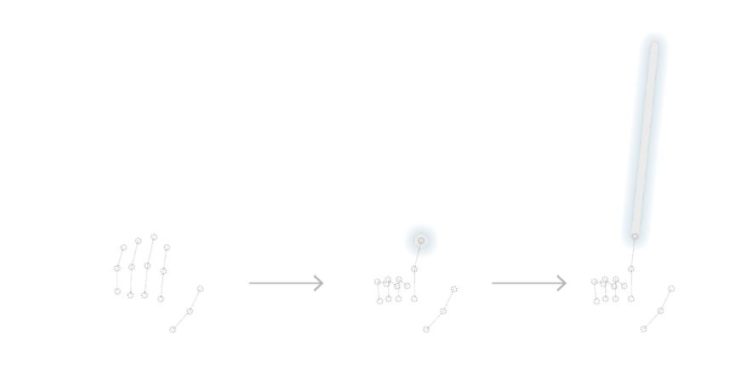

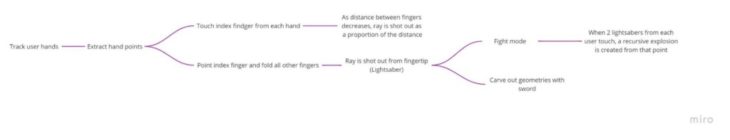

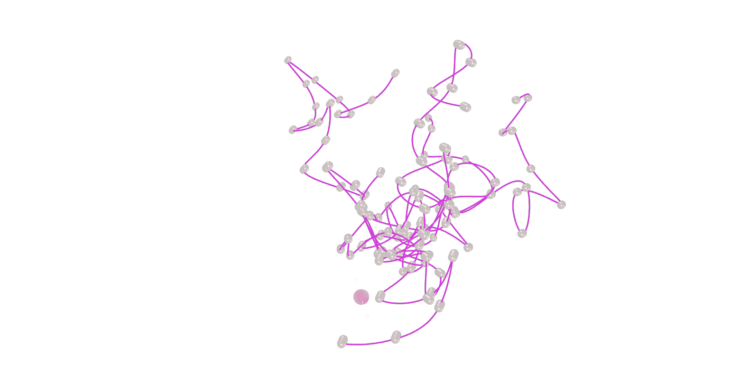

The HoloLens detects several key features to be used in interfacing with the device. In this instance, the fingers – represented here with nodes – appear in HoloLens as a consistent array. Using filters, we can first establish the left and right hands of the user.

From there, we’re able to gather the key nodes of interest by determining their indices.

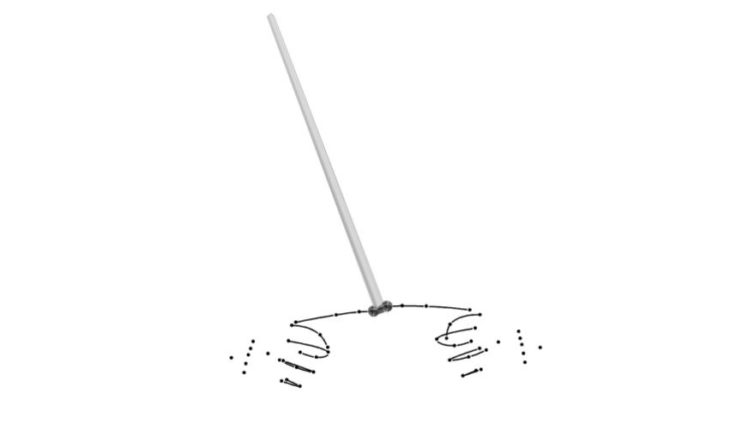

By measuring the distance between the points in 3D space, we can begin to infer certain gestures.

Looking at the proximity to, and away from other points, one is able to establish clear relationships such as a pointing finger, or two finger tips touching.

Particle System Simulation

Virtual Object Interaction

Second Iteration

Full scale design tool with dashboard and scaled digital twin

Setup

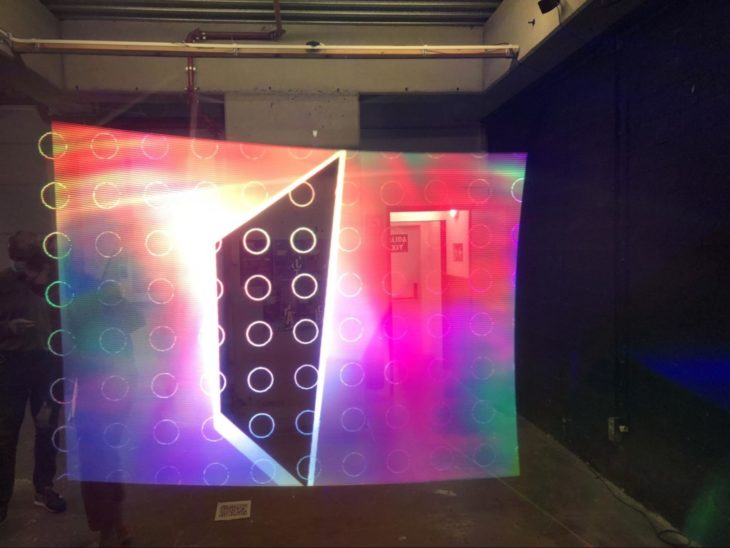

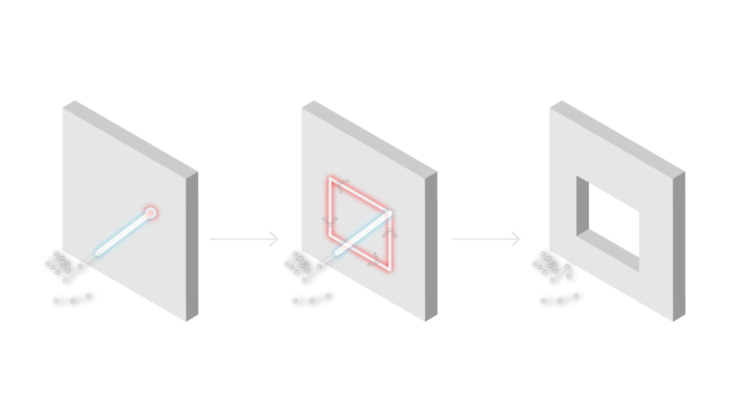

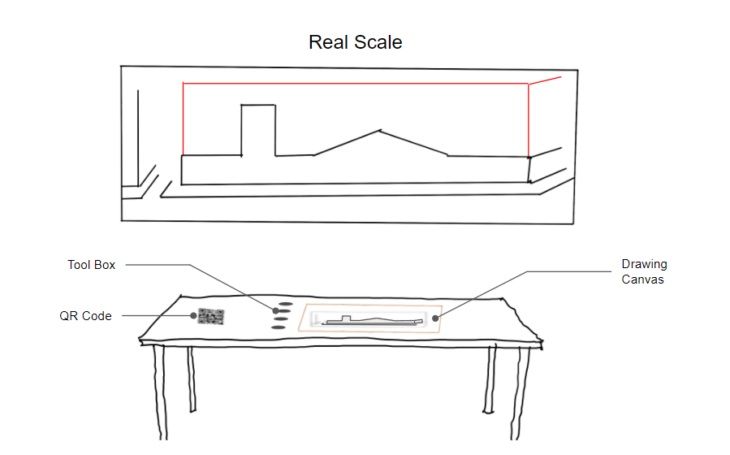

The goal of this project was to allow a user to visualize and manipulate their designs in the real world, whilst also providing an optional interface that would work on a human scale.

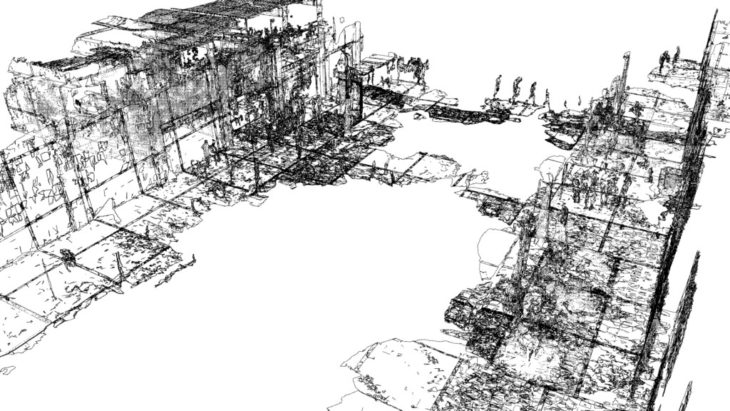

LIDAR scanning

Using the HoloLens embedded LIDARs, we mapped the space to create a context for our installation

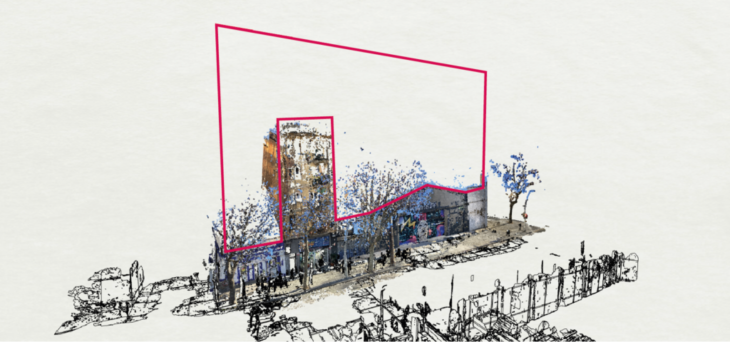

Photogrammetry

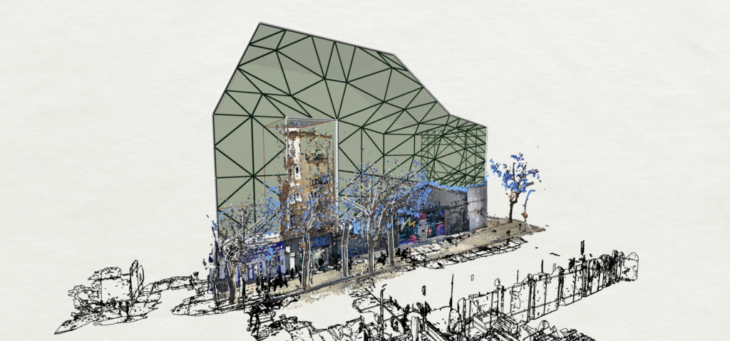

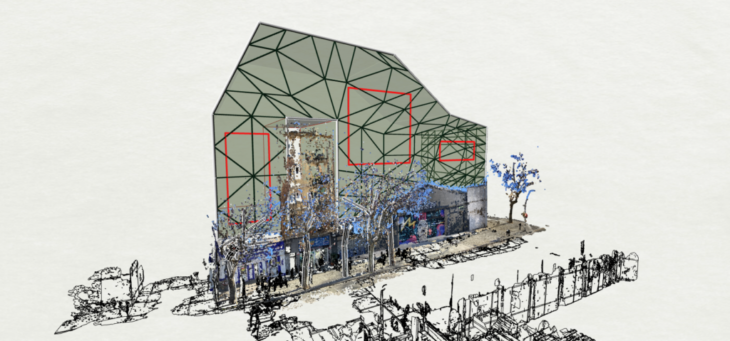

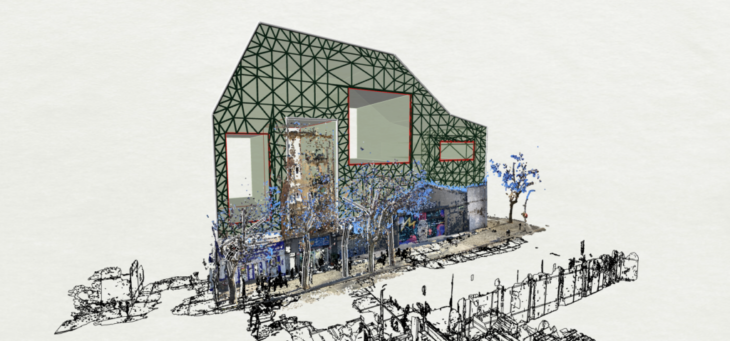

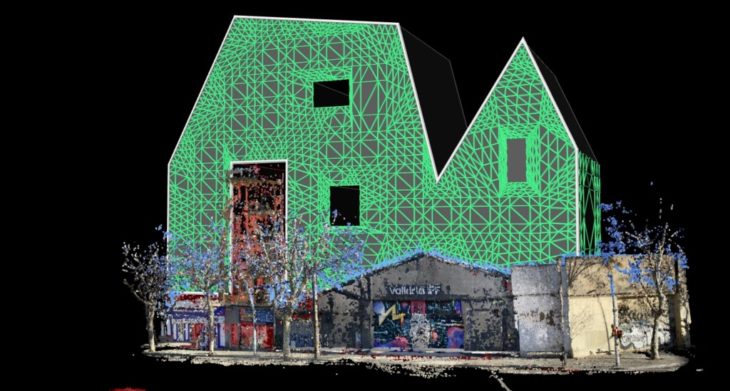

After taking around 100 pictures of the desired building, we use Metashape to assemble them into a single point cloud

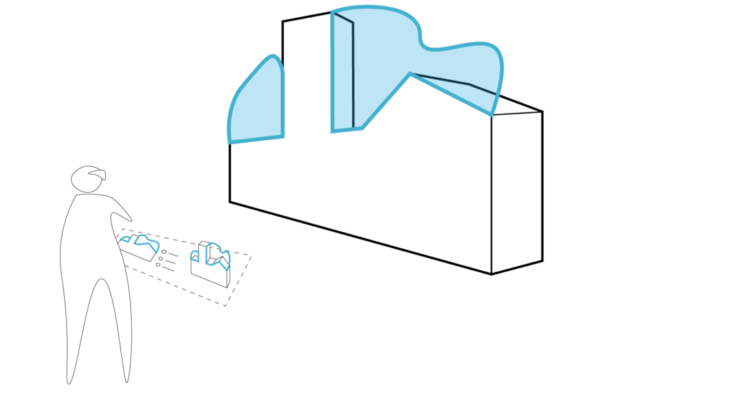

We then create our canvas or workspace on the roof of the building. This outline becomes the boundary where the user can create his addition to the building

This outline is also mapped to the table in front of the user to be used as a canvas where they could create their own roofscape.

Pointing at the canvas in front of him, the user adds points which are automatically projected to the building in front of him. As they are always simultaneously synchronizing, the user is able to view the drawings which he is creating on the canvas in front of him happen in real time on a 1-1 scale on top of the actual building.

Dashboard

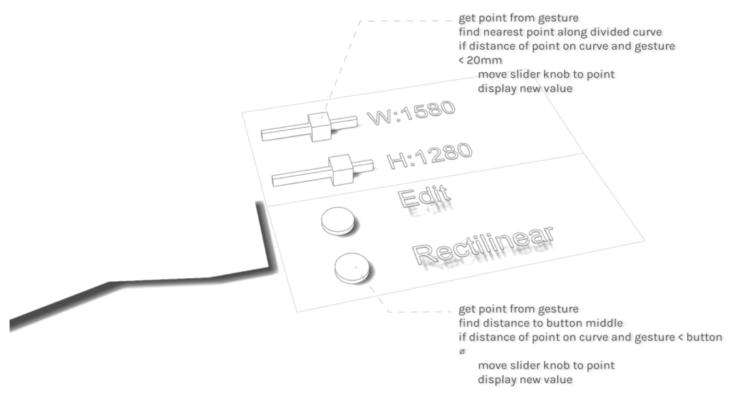

To keep interaction between model and user at an ergonomic scale, a dashboard was constructed using grasshopper and Fologram.

The dashboard sits on a table, giving the user a connection with the real world whilst interacting with the digital.

A series of buttons and sliders were created to control the various parameters set out in the sections above. Setting these controls in the virtual environment gives the user the ability to change these parameters without leaving the model to access a tertiary menu (which is currently how Fologram functions).

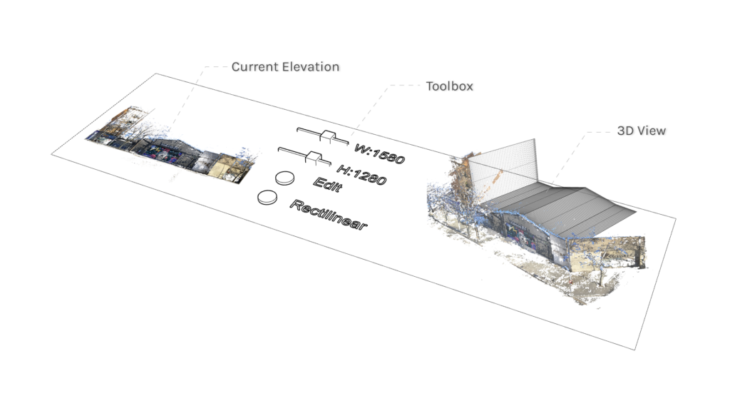

On either side of the control panel, the user has a digital twin of the current elevation that is in focus and a 3D model. This allows for choice between interacting with the larger, real-scale digital model, or the more manageable scaled digital twin directly in front the user.

</p>

HAD: Holographic Assisted Design is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Masters in Robotics and Advanced Construction, in 2021/2022

by:

Students: Alfred Bowles, Andrea Najera, Robert Michael, Shamanth Thenkan

Faculty: Kieth Kaseman

Assistant faculty : Daniil Koshelyuk, Will Reynolds