Objective

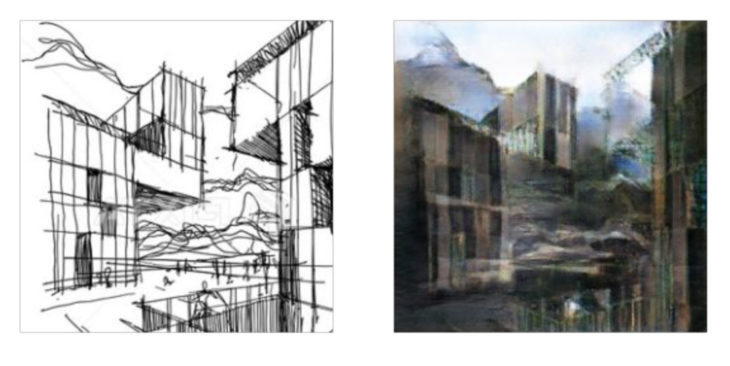

The intersection of architecture with artificial design is challenging the notions of creativity and agency. With this project we decided to speculate a bit around the topic of sketching in architecture in order to see, if there is a place for a machine to help architects express their ideas. What if a simple fast sketch can be more than that?

Can AI models inspire?

Method

In order to answer that question we decided to try using Pix2pix algorithm, a Conditional Generative Adversarial Network, consisting of two networks that train together:

Generator — Given a vector of random values as input, and generates data with the same structure as the training data.

Discriminator — Given batches of data containing observations from both the training data, and generated data from the generator, this network attempts to classify the observations as “real” or “generated”

We relied on the parameters proposed in the paper for our project, since the pix2pix model has already been used by them in cases of translation from edges to images. In this slide we can observe the substantial changes that the proposed parameters cause. Besides the U-net architecture also the loss function plays a great role. Usual loss functions cause blurriness when applied to images, so in this case the proposed loss function mixes the gan objective with the mean absolute error. Maximum image sizes the model has been tested on is 286*286.

Dataset preparation

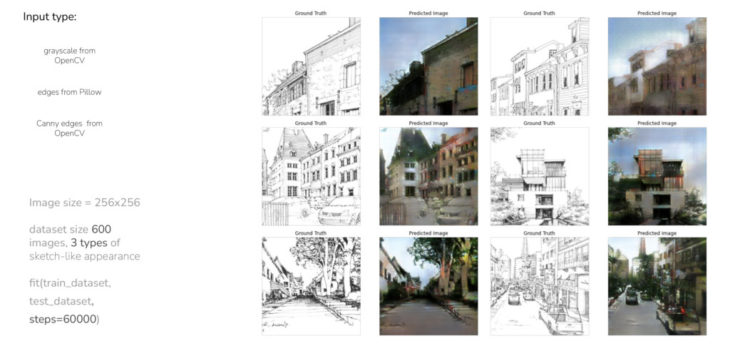

After deciding the model we prepared the dataset. We thought it would be best to work with street images from one city, as this would provide an interesting case of encoding the atmosphere of a place, in the context of architecture, and mapillary proved to be a good source of good images. Since time wise we couldn’t manage to make hand drawings of our street views, we tried several automated translations of the street images into sketch-like ones. We opted for 3 scenarios, using grayscale and canny edges from openCV and edges from the Pillow library.

Trials

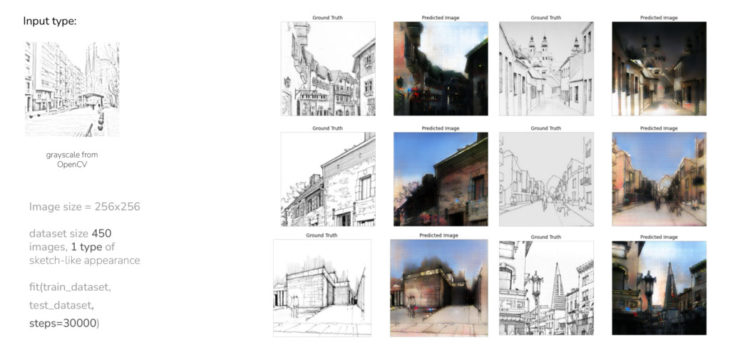

At first we tested a grayscale from OpenCV, our dataset type was around 450 images and we trained it for 30000 epochs. In the predicted images we can see the edges that are not close to the realistic visualization.

grayscale from openCV dataset

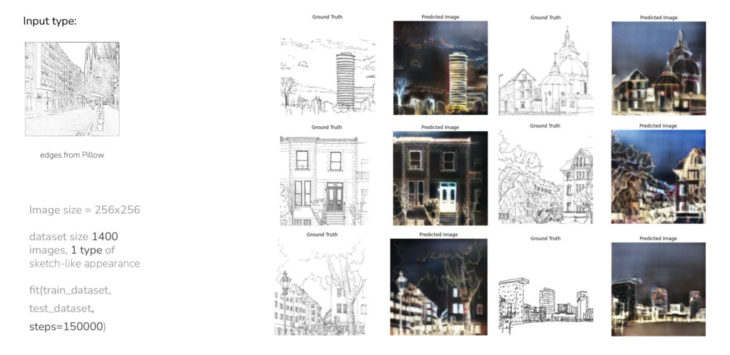

The next trial was made out of Pillow library, we used a bigger dataset and trained it for 150000 epochs and the result we`ve got was quite unexpected. It looks like the negative of the image. So the generated dataset did not reflect our hand drawings very well.

edges from Pillow

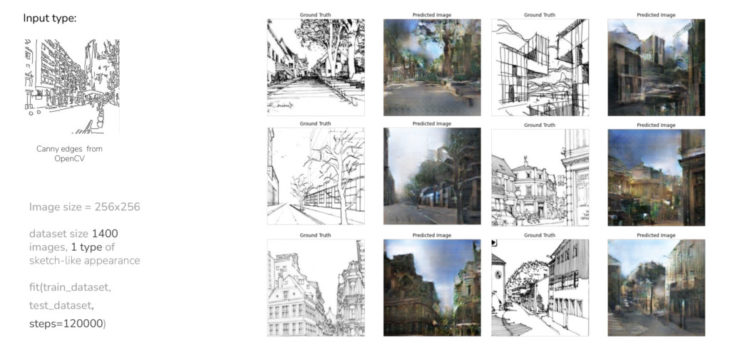

The next generated data set did not look promising at the beginning, but surprisingly gave us a better result. Here we lose the edgy part of our visualizations and get closer to the 3d drawings.

canny edges from OpenCV

We also tried the combination of three previously shown data sets and it also gave us some interesting outcomes. So working with different datasets we started to develop a feeling of what kind of visual input presentation could be relevant for a certain hand drawing type and with this knowledge we would like further experiment with the algorithm and see where we can get.

mixed dataset

Conclusion

In general we came to the conclusion that machine learning algorithms can be actually very useful real life tools in architecture practice today. We think that the creative part of sketching can largely benefit from using the data flow we just presented.

Generated Creativity is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at MaCAD (Masters in Advanced Computation for Architecture & Design) in 2022 by Students: Erida Bendo and Olga Poletkina and faculty: Oana Taut and assistant faculty: Aleksander Mastalski.