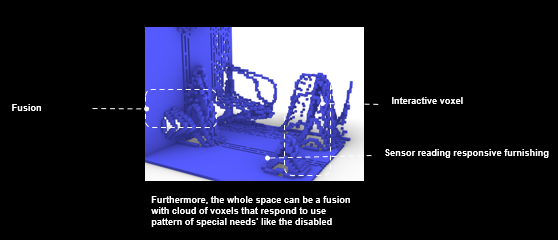

IWGANs Interior Design | Wall-2-Space Voxelization Fusion

Exploring space for the Disabled

Concept

This project addresses the Interior design problems as Interior design variations and styles are highly expanding recently. Multiple designers tend to get their own distinguished design signature. However, interior design is time-consuming, multistep/multi-layer through an iterative process of finding alternatives.

Interior design is a rich context to test generative adversarial networks, especially when dealing with 3d space representation. Moreover, it can accommodate creative wall-to-space augmented design to meet disabled needs / uses upon sensors reading from movement.

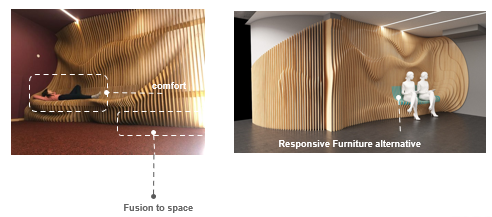

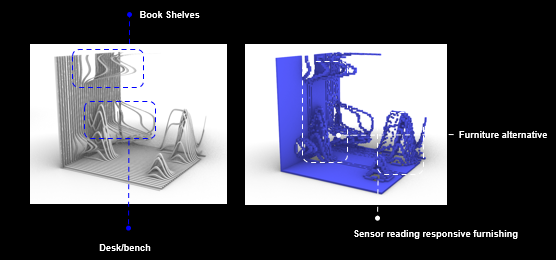

- Augmenting wall and floor design to include embedded surfaces that can accommodate the use of furniture.

- Hybrid-Use space to train the human brain and imitate the natural landscape which was the human first habitat.

- In addition, adding sensors and interactivity through this voxel-space to meet disabled-person needs in interior space matching their uses and lead their way.

Wall-2-Space: Augmenting multiple spaces

Parametric interior design has always been used for benches and bookshelves only. However, it could be more than that. Wall-2-space explores the fusion possibility of no furniture and space articulation with responsive furniture alternative to meet users’ usability in particular those of special needs by learning from space use patterns and sensor readings.

However, the whole space is evolved to be a fusion with a cloud of voxels that respond to use patterns of special needs like the ones of the disabled. Space voxelization is aimed to be interactive to sensors reading as well. This approach can generate more ISD to accommodate disabled usability

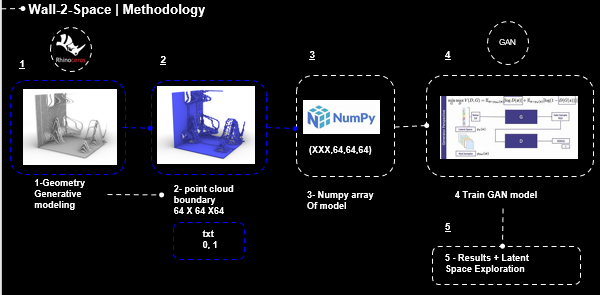

Methodology

The methodology undertakes 5 main steps. At first, the geometry generative modeling uses Gh scripting. Then, bounding generated models in 64 X64 X64 to get txt files of 0 & 1 . These models will be turned into a NumPy array which will be utilized to train the iWGAN model. Finally, results and latent space exploration takes place.

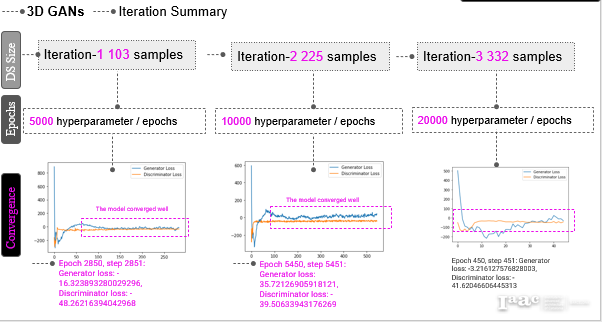

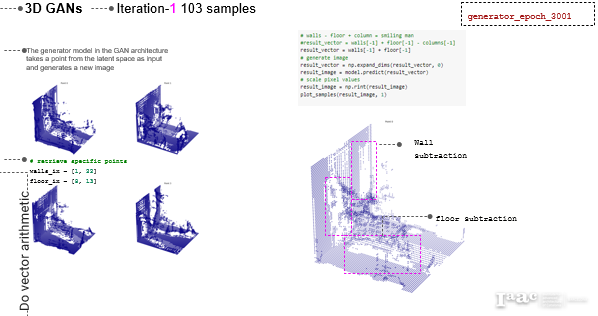

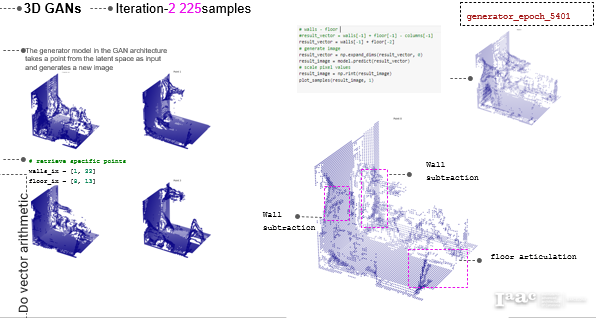

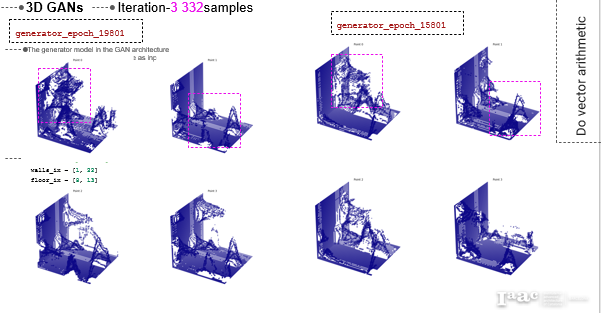

iWGANs Iterations

The project hypothesis undergoes 3 iterations. The first uses 103 samples to train 5000 hyperparameters. The second uses double the dataset with 10,000 hyperparameters. At last, in iteration 3 triple the samples with 20,000 hyperparameters.

Dataset Samples

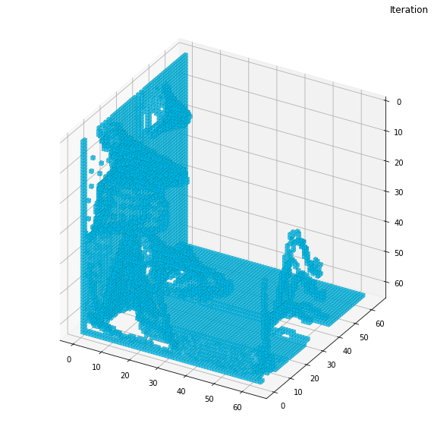

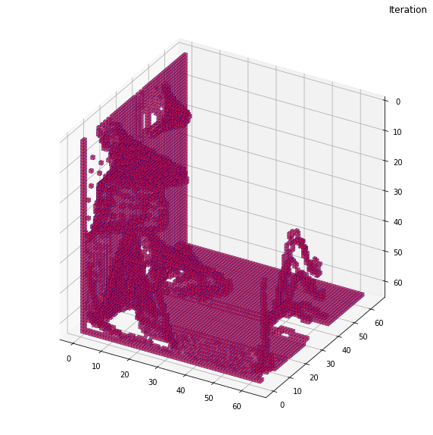

This figure delineates mesh versus voxels with meshes in red while voxels in blue. This simplifies organic form flexibility through cubic voxels articulation.

Numpy Array Samples

NumPy array is essential to train iWGAN mode. Samples of txt files from the GH script are then used to create a 64 X 64 X 64 NumPy array to train the model for the 3 iterations.

IWGAN Architecture

Inference Wasserstein Generative Adversarial Network (iWGAN) is adopted to apply generative ISD, hence extending the boundaries of space furnishing to space articulation as behaving like one. Three iterations with three different samples and hyperparameters are used to improve results. As iWGAN is trained more to learn both an encoder and a decoder simultaneously. This approach is adequate to train 3D datasets and learn in a 3-dimensional space. Results have delineated progress after 19,801 to resemble the trained data with almost 82% accuracy.

GANs are based on generators and discriminators. While the generator is trained, it samples random noise and produces an output from that noise. The output then goes through the discriminator and gets classified as either “Real” or “Fake” based on the ability of the discriminator to tell one from the other.

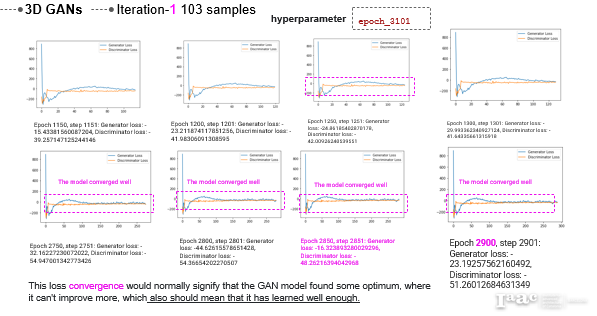

GANs Training Graphs

Iteration 1

This iteration successfully runs to epoch 3101. However, Epoch 2900 shows the best convergence with generator loss of -23 and discriminator loss of -51 which shows the need for more training.

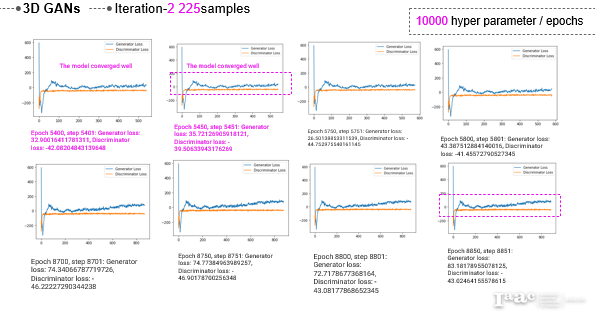

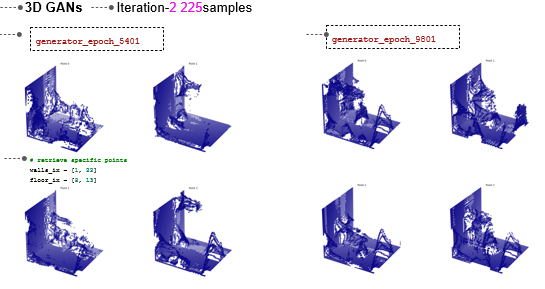

Iteration 2

Then, Iteration 2 successfully runs to epoch 10,000. However, the best convergence appears at epoch 5400 and 5450, with generator loss of 32 and discriminator loss of -42 at epoch 5400, while epoch 5450 shows generator loss of 35 and discriminator loss of -39.

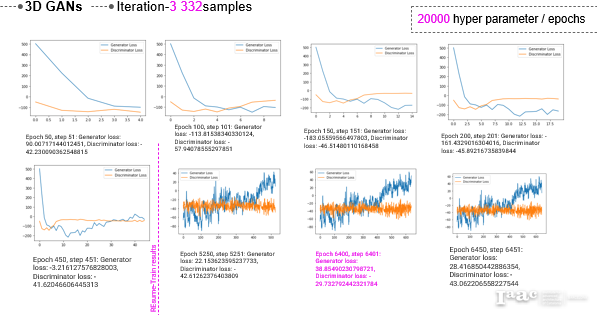

Iteration 3

At last, Iteration 3 successfully runs to epoch 20,000. As the model trains, it tries to reach a better convergence at multi-epoch as will be explained in the results.

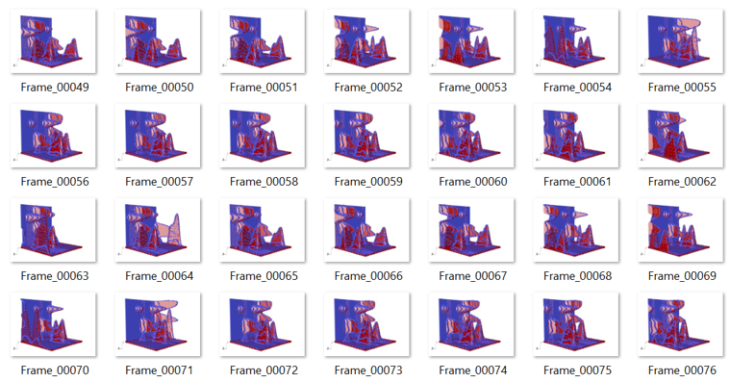

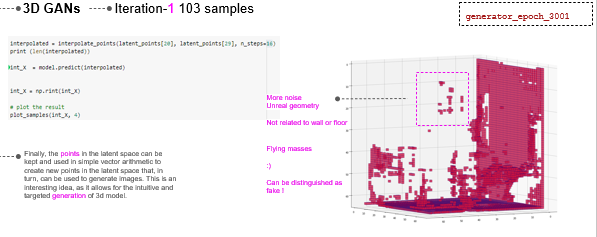

Latent Space Exploration

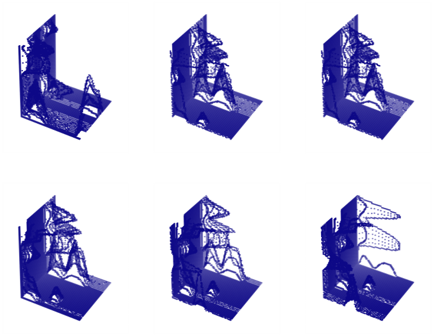

Iteration 1

Results from iteration 1 prove the hypothesis that a bigger dataset with more hyperparameters is essential to improve results. The interpolation between points 8 and 22 on the right-hand side is far different from the trained samples.

As delineated in the image on the right-hand side, epoch 3001 visualization shows flying objects which can not accommodate any uses or support space special needs of users.

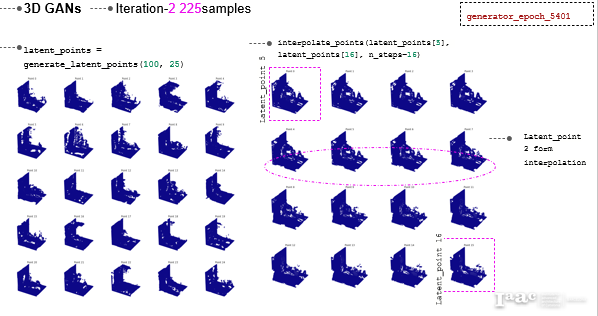

Iteration 2

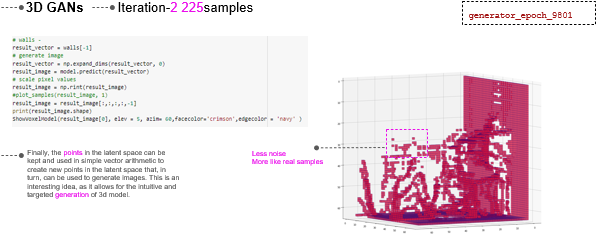

On contrary, from the discriminator loss and generator loss, epoch 5401 is not the best result. Many dense masses appear which block uses and mislead space articulation.

Although the fact that epoch 9801 visualization has no flying masses, still the space is insufficient to accommodate uses and provide functionalities. Hence, this proves the hypothesis to train the third iteration.

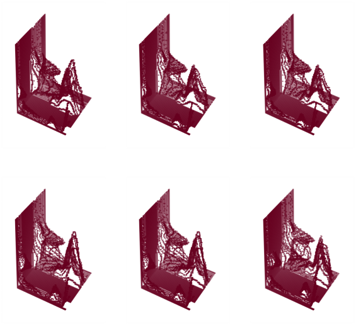

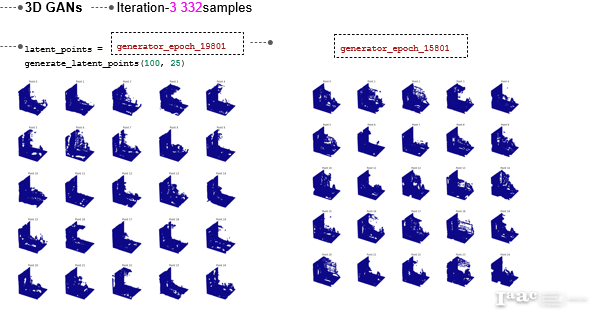

Iteration 3

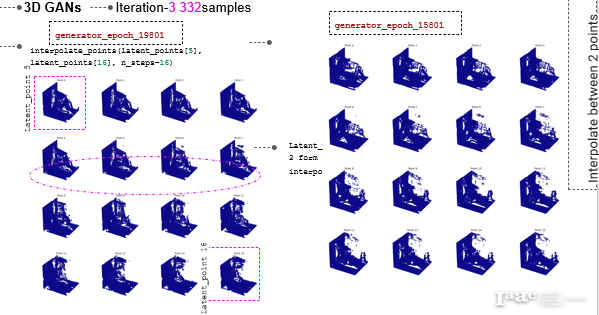

A comparative analysis between 2 selected epochs, 19801 and 15801 shows better results than the previous two iterations.

Again, a comparative analysis between epochs 19801 and 15801 through the interpolation between points 5 and 16 shows more dense space in the last epoch while more clear in the mid one.

At last, the visualization of epoch 19801 delineated at the right-hand side shows promising results, as the wall articulation resembles that of the trained samples. As for the floor, volumetric definitions are formed to prove the project undertaken hypothesis.

Vector Arithmetic

Iteration 1

The vector arithmetic in iteration 1, shows a lot of subtractive patches and a lack of homogenous voxels as yielded in the images below.

Iteration 2

Better results of the vector arithmetic can be obviously noticed in the second iteration. However, still missing voxels forming void patches.

A comparative analysis of vector arithmetic between epochs 5401 and 9801 shows better articulation and homogenous results at the last epoch.

Iteration 3

At last, a second proof of the assumed hypothesis, iteration 3 shows the best results so far. As delineated in the second image from the left side on the first row, highly detailed voxels appear compared to the corresponding ones on the right side.

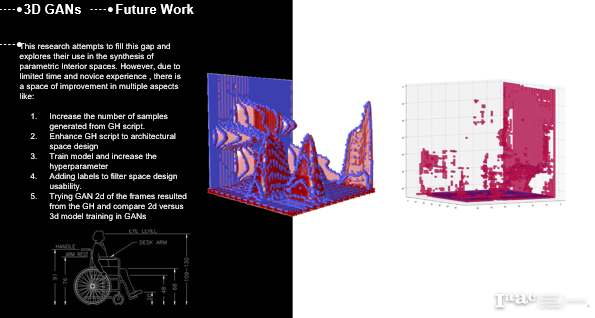

Future Work

Basically, this project explores iWGAN with 3d Datasets following a planned methodology with a proven hypothesis which raises more questions about hyperparameters and dataset labeling. Hence, future work will incorporate expanding the dataset, and increasing the number of hyperparameters, while adding labels to neet special needs usability and integrated disabled functionalities. Moreover, Investigating 2d versus 3D GAN through a comparative analysis between results.

Raised Questions:

Is 3D GAN a reliable approach for architectural design?

Does iWGAN a promising approach to implementing 3D spaces voxelization?

Can Computational methods accommodate Disabled needs and use-pattern?

Can Interactive space be rewarded through 3D GAN spaces?

How far can Designers deploy generative design in an extended context than luxury spaces?

Wall-2-Space is a project of IaaC, Institute for Advanced Architecture of Catalonia developed at Artificial Intelligence in architecture (AIA) module of the Master in Advanced Ecological Buildings in 2022 by:

Students: Sammar Z. Allam.

Faculty: Oana Taut.

Faculty Assistant: Aleksander Mastalsk.

Faculty director: David Leon.