The Objective

Our exercise for this seminar consisted in the objective of exploring a basic Image Classification of an Architecture Style Dataset using Machine Learning and CNN.

Even though the original paper tries predicting the classification of the dataset with a Multinomial Latent Logistic Regression Method, we wanted to test how it would perform with a basic CNN.

Multinomial logistic regression is used when you have a categorical dependent variable with two or more unordered levels (i.e. two or more discrete outcomes). It is practically identical to logistic regression, except that you have multiple possible outcomes instead of just one.

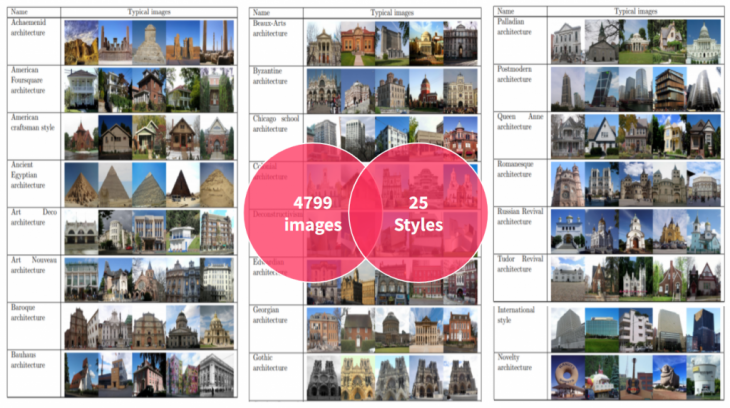

The Data Set

Originally developed from the researchers in 2014 (Xu, Z. (2014) Architectural Style Classification using Multinomial Latent Logistic Regression, ECCV.), it has 25 folders with 4799 images, separated by architectonic styles from around the world.

This data set can be found online in kaggle repository, under the link:

https://www.kaggle.com/dumitrux/architectural-styles-dataset

Project Methodology

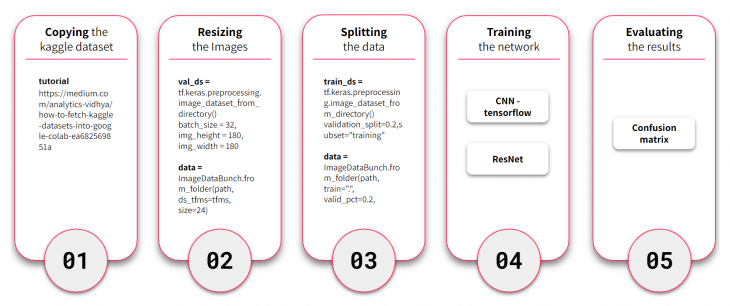

Copying the dataset from Kaggle requires the following of some basic steps, in orther to run the notebook shared here in your own machine, in case of need, the workflow followed the one descripted in this post:

https://medium.com/analytics-vidhya/how-to-fetch-kaggle-datasets-into-google-colab-ea682569851a

For the next tasks we took two approaches, one for the tensorflow CNN, and the other for ResNet network. We resized the images, split it into training and validations, structured the model, trained, and captured the results.

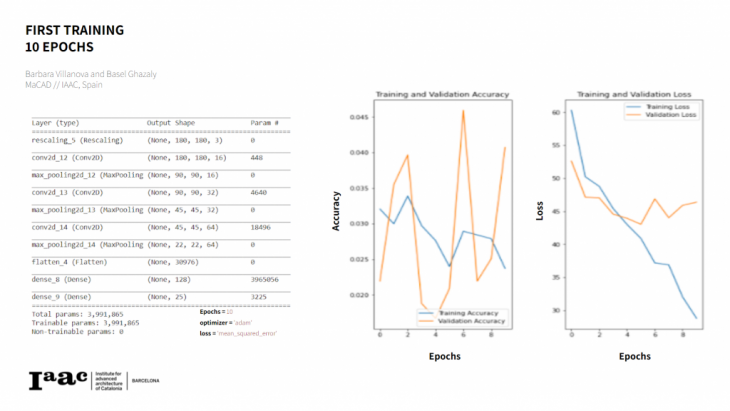

First CNN training

Our first approach used a basic model consistent of three convolution blocks with a max pool layer in each of them activated by a relu activation function. (The utility of pooling layer is to reduce the spatial dimension of the input volume for next layers). The use of the wrong loss function, and the complexity of the task of classifying into 25 different categories the architecture styles, which differs from standard classification tasks due to the rich inter-class relationships between different styles, resulted in this non efficient ML model.

The confusion matrix is showing the bad prediction capability of the network.

Improving the Data set and NN Architecture

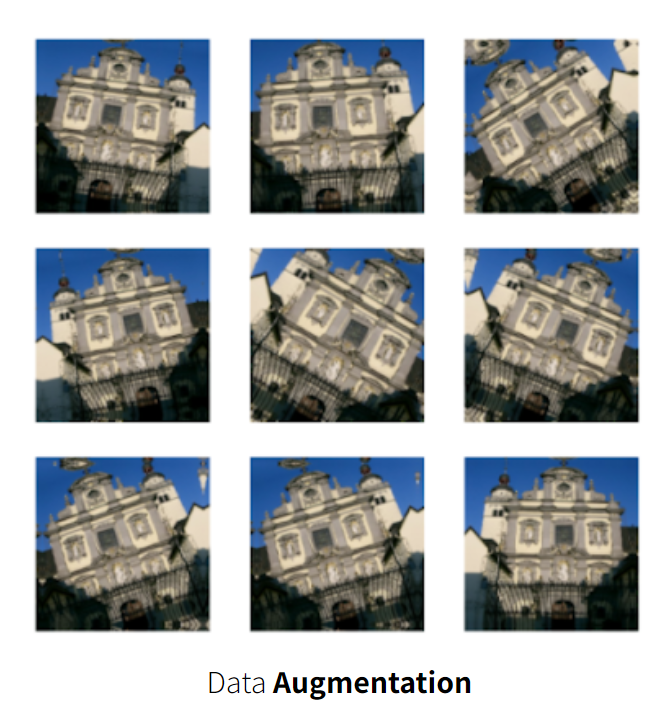

The first improvement was performing a Data Augmentation. With some few lines of codes we can mirror, rotate, crop and create “fake” new data from the original samples, while still manteining the same quality.

Another technique to reduce overfitting is to introduce Dropout to the network, a form of regularization.

When you apply Dropout to a layer it randomly drops out (by setting the activation to zero) a number of output units from the layer during the training process. Dropout takes a fractional number as its input value, in the form such as 0.1, 0.2, 0.4, etc. This means dropping out 10%, 20% or 40% of the output units randomly from the applied layer.

Let’s create a new neural network using layers.Dropout, then train it using the augmented images.

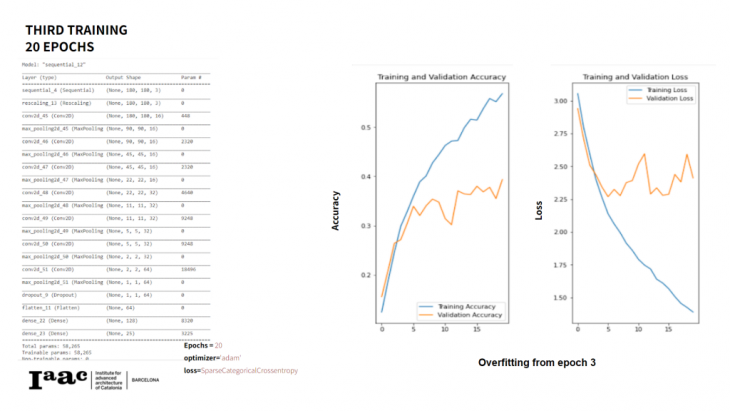

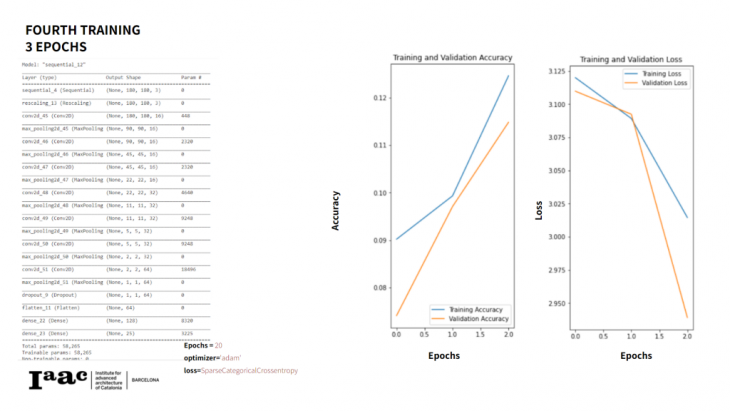

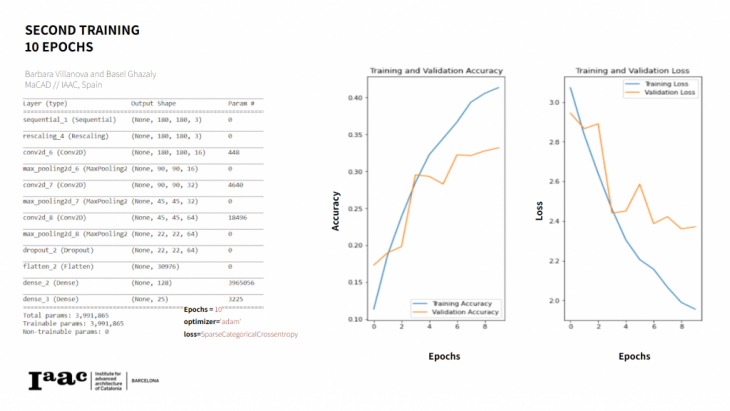

Second CNN training

Our second attempt added the dropout layer to the ones already used before and corrected the loss function, from ‘mean square error’ to ‘spatial categorical cross-entropy’. The Dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Even so, the model shows the same overfitting from 3 epochs on. The accuracy curve looks better, but the result is under 0.35. Other attempts, deepening the CNN with more blocks of layers, understanding that each layer can learn a different feature, got worst results, with validation accuracies around 0.10.

We have some improvement on the results, but still not good enough to predict.

We tried some other tests deepening the network, but it only accentuated the overfitting.

Even if we stop the training a little before overfitting, around epoch 3, as in this example, We have some improvement on the results, but still not good enough to predict.

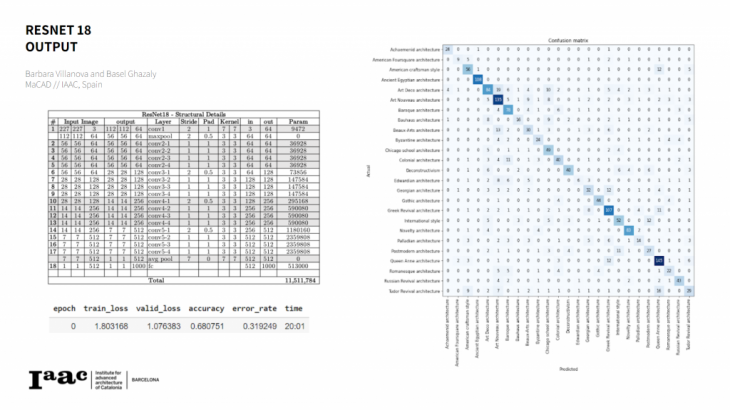

ResNet Layer

For improving the results of the classification we needed to use another network architecture since deeper CNN architectures were hard/impossible to train due to the nature of backpropagation algorithm (vanishing /exploding gradients). From the available architectures that we considered we chose the Residual network architecture since we had access to a code that uses it.

Residual Network (ResNet) is a way to handle the vanishing gradient problem in very deep CNNs. They work by skipping some layers assuming the fact that very deep networks should not produce a training error higher than its shallower counterparts.

ResNet Residual Block architecture makes use of shortcut connections to solve the problem of vanishing gradient that usually happens in regular CNN while trying to increase the number of layers, which is very important when trying to make the machine learn many complex features, as in Architecture Styles.

Using the ResNet CNN, the results with 1 epoch were significantly better. The validation accuracy reached 0.68, without any tuning.

Next steps should be training the model with a higher ResNet, and also

possibly explore others networks like Inception and VGG.

A comparative study of CNN architectures for Image Classification of an Architecture Style Dataset is a project of IAAC, Institute for Advanced Architecture of Catalonia developed at Masters in Advanced Computation for Architecture and Design) in 2021 by students: Barbara Villanova and Basel Ghazaly, and faculty: Stanislas Chaillou and Oana Taut.