“Having in mind the necessity and difficulties of detecting rust in complex environments in a heavy industry environment our program proposes a solution for automated rust detection and mapping.”

This project demonstrates the possibilities for detecting rust inside factories with the use of drones and machine learning.

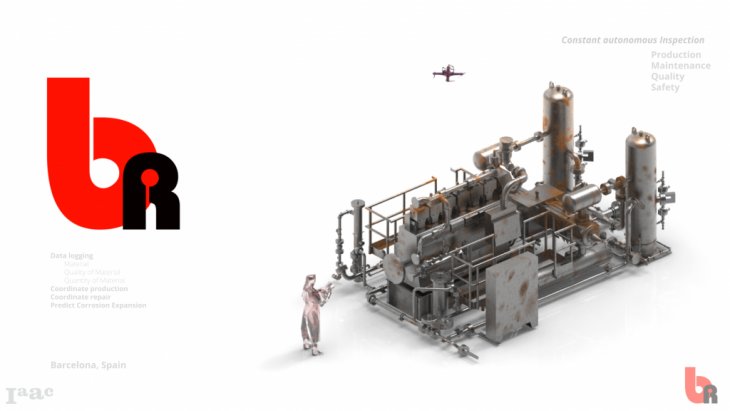

Here we have the overall concept of our project, First we have the field where we are offering our service in the heavy industry its common to find Complex structures of steel and it becomes tedious to make a visual inspection of structural elements. This environment creates a common problem Rust in steel. This takes us to the service we are offering autonomous drones for inspection.

Here we have the overall concept of our project, First we have the field where we are offering our service in the heavy industry its common to find Complex structures of steel and it becomes tedious to make a visual inspection of structural elements. This environment creates a common problem Rust in steel. This takes us to the service we are offering autonomous drones for inspection.

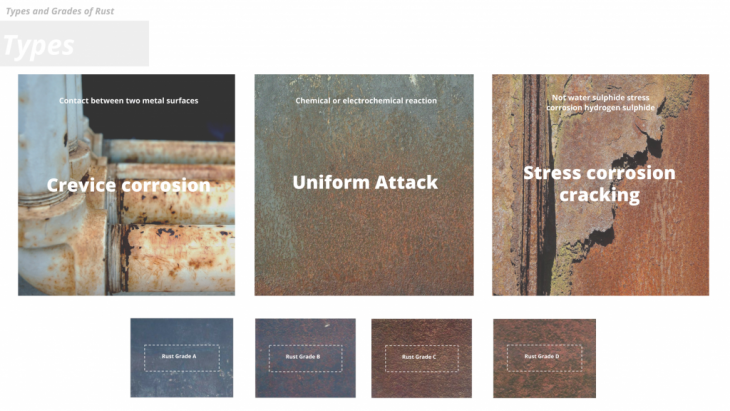

Rust gets categorized into 3 main groups Crevice, Uniform & Stress corrosion cracking.

These again get graded from A to D depending on their deformation and depth of attack.

Here we have the effects of corrosion in the industry-In the left side we can see an example- The chevron oil refinery had an oil spill in 2012 due to corrosion in their pipes. This ended up in a fire putting human lives at risk and causing a huge damage in their facilities in the right side we find a diagram of the overall economic cost of corrosion, Being a considerable economic cost globally.

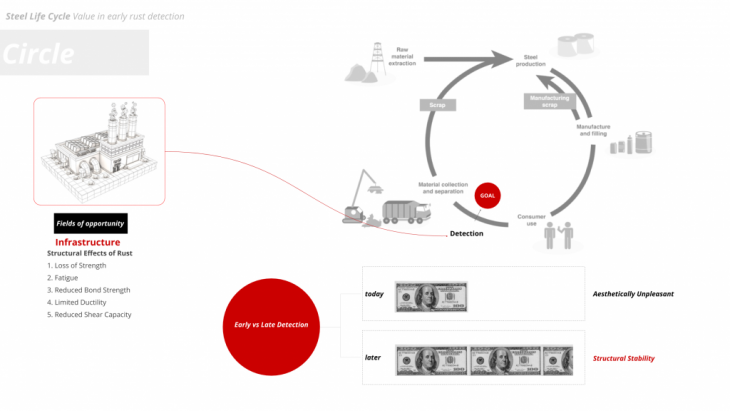

In this diagram we can see the life cycle of steel from raw material through production; use, and recycling. Our project focuses on corroded steel structures in current use, before the full need of recycling or repair of the material. It is economically much more beneficial to repair the facilities before it affects the structural stability.

In this diagram we can see the life cycle of steel from raw material through production; use, and recycling. Our project focuses on corroded steel structures in current use, before the full need of recycling or repair of the material. It is economically much more beneficial to repair the facilities before it affects the structural stability.

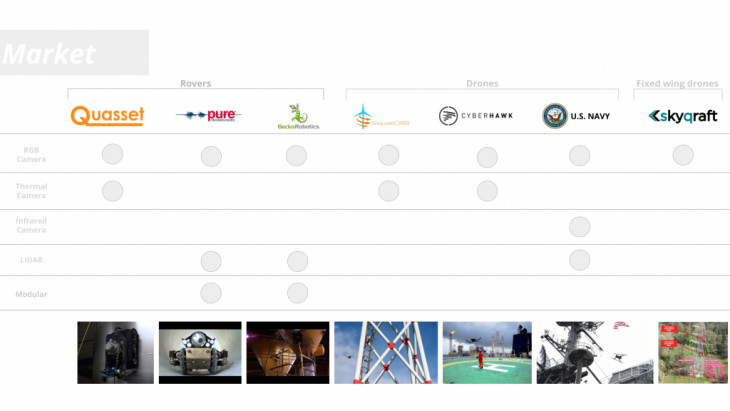

We’ve studied and compared the current market in the field. The different types of vehicles they use and the sensor they attach to them.

We’ve studied and compared the current market in the field. The different types of vehicles they use and the sensor they attach to them.

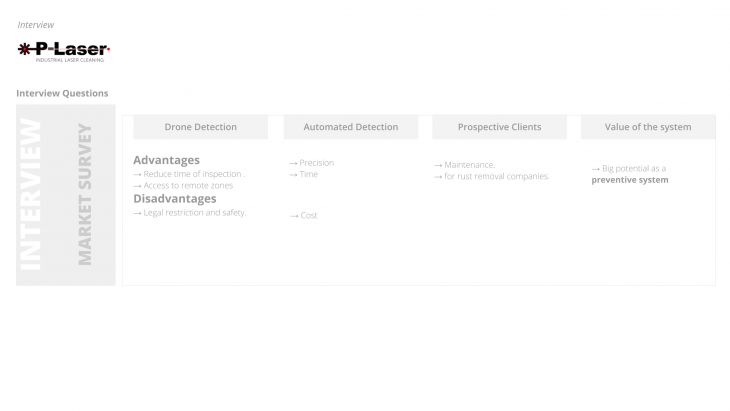

They all provide a 3D model interface as feedback to the costumer but Only Skycraft is able to facilitate autonomous flight, and the rest relies on manual flight by pilots. To have a more clear idea about the current market we contacted a company in the field of rust removal.The most valued points from the conversation we had were:

To have a more clear idea about the current market we contacted a company in the field of rust removal.The most valued points from the conversation we had were:

- that the use of drones can help the difficult access to steel structures.

- And we could offer to build a preventive system for facilities.

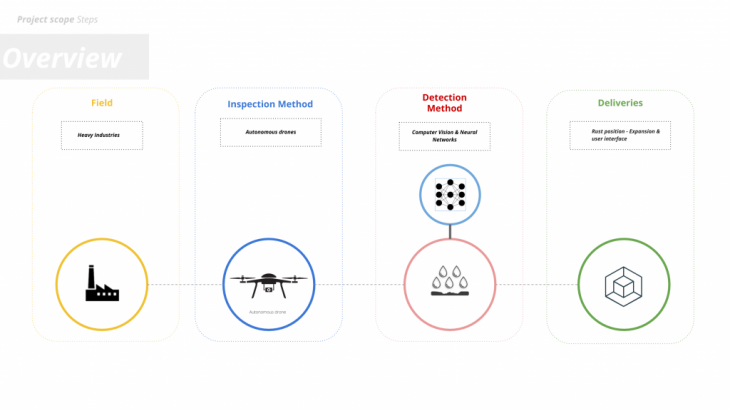

Our field of work is based in the heavy industry, our inspection method depends on drones, for detection we use different computer vision methods And we deliver a 3D form and rust expansion interface for the user.

Our field of work is based in the heavy industry, our inspection method depends on drones, for detection we use different computer vision methods And we deliver a 3D form and rust expansion interface for the user.

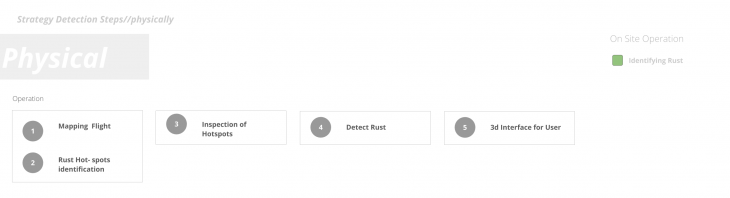

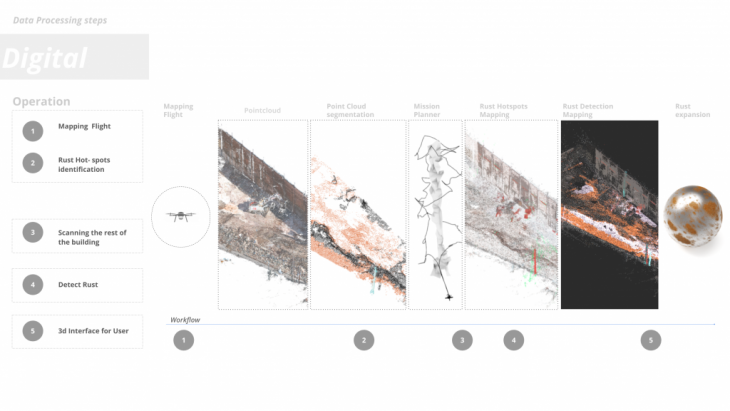

We can see the task arrangement in the physical environment, The first step is a manual mapping flight, generating a point-cloud of the facility. Then marking the hotspot areas in our point-cloud, where rust is most likely to be detected. Next autonomous inspection flight takes place at these hotspots to collect detailed information from rust location. After collecting all needed information, the data get processed and published in a 3D interface.

Furthermore, we see here the data processing diagram in relation to the physical workflow. So the analysis starts by generating a point-cloud and isolate key areas contain rust. Then we find the optimum way of scanning those areas, next we map precisely location of rust in 3D and at the end we offer an estimation of rust expansion.

Furthermore, we see here the data processing diagram in relation to the physical workflow. So the analysis starts by generating a point-cloud and isolate key areas contain rust. Then we find the optimum way of scanning those areas, next we map precisely location of rust in 3D and at the end we offer an estimation of rust expansion.

PointCloud Generation & Segmentation

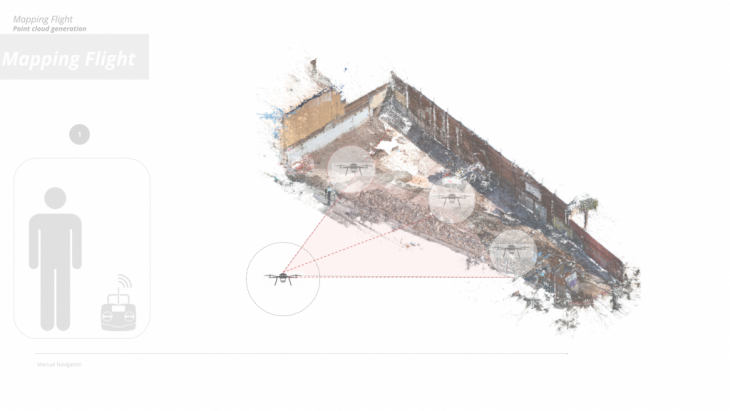

The first step of our process as the guys mentioned before is the manual mapping flight where the initial point-cloud gets generated.

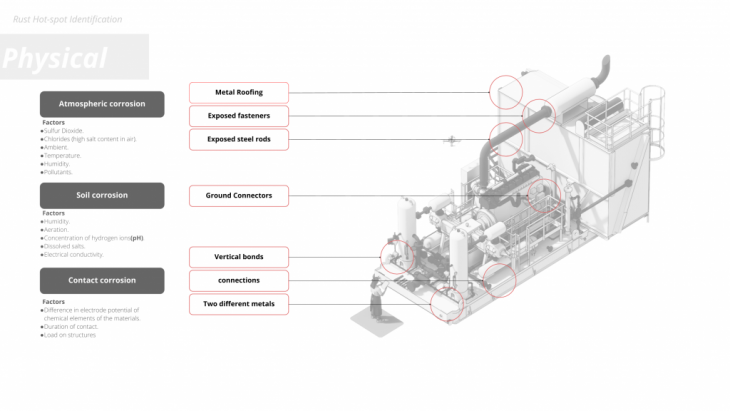

On the second step we identify the key rust hot spot areas according to environmental conditions and physical position in space, these vary from large steel surfaces to connections between elements which leads to different types of corrosion.

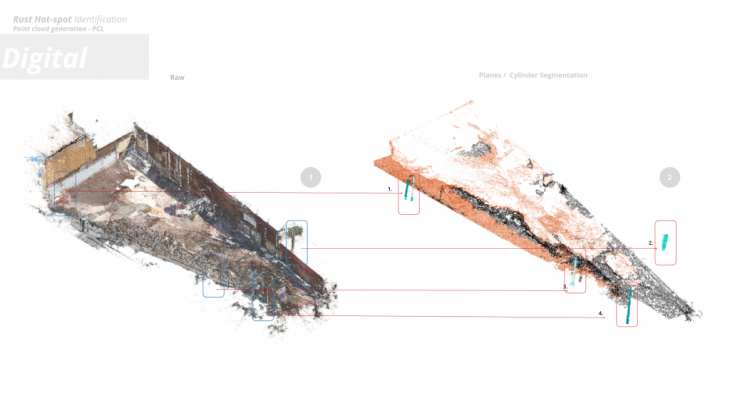

And after we identify those positions in space we segment the initial point-cloud isolating these geometries.

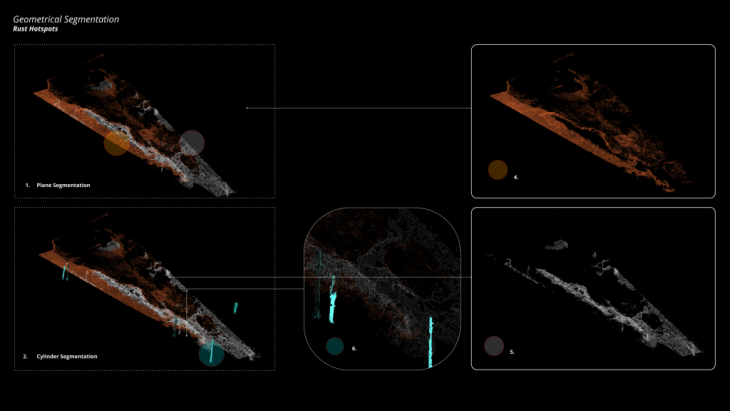

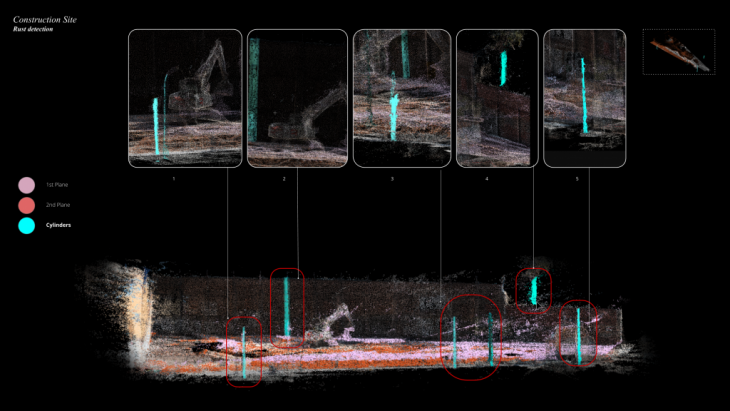

So to test our process we generated a point-cloud of a construction site which was easy accessible and we tried our segmentation algorithm as a result we got 2 planes and 5 cylinders.

In this figure we see in orange and white the 2 planes and in blue the 4 cylinders we identified.

Some more close ups of the test and an elevation on the bottom side of the page.

INSPECTION

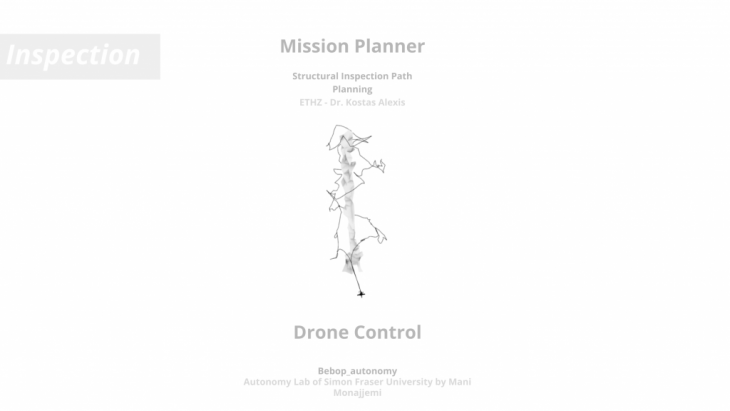

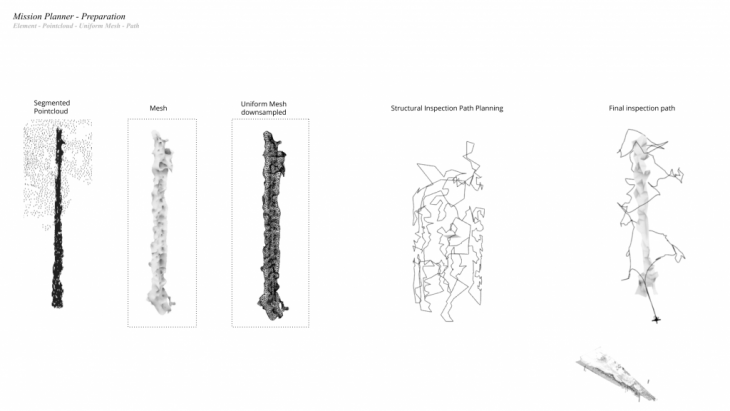

For the autonomous inspection of the “Hotspots” a Mission Planner is needed to generate a path that allows the drone to take pictures of every surface of the object in question.

Also a controller is needed to send continuous commands to the drone to flow this path.

The mission planner that got implemented is the “Structural Inspection Path Planner” developed by Dr. Kostas Alexis and his team at ETHZ. The controller got developed by the Autonomy Lab of the Simon Fraser University with additional scripts of Soroush Garivani of IaaC and our own research.

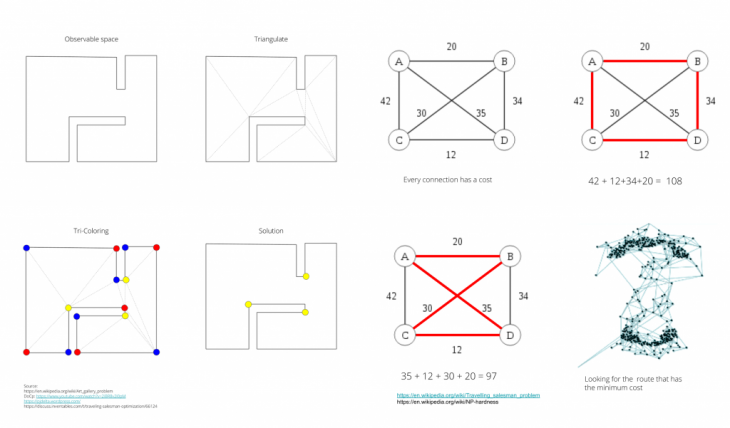

First an introduction into the mission planner. The mission planner needs a environment formatted as a Octomap and the object to inspect needs to be a down-sampled uniform mesh. The algorithm is split into two steps one is looking for the least amount of points around the mesh that is needed for the drone to be able to see every face of the mesh. The other is generating the shortest path through these points. These two steps are based on two known math problems the Art Gallery Problem and the Traveling Salesman Problem.

First an introduction into the mission planner. The mission planner needs a environment formatted as a Octomap and the object to inspect needs to be a down-sampled uniform mesh. The algorithm is split into two steps one is looking for the least amount of points around the mesh that is needed for the drone to be able to see every face of the mesh. The other is generating the shortest path through these points. These two steps are based on two known math problems the Art Gallery Problem and the Traveling Salesman Problem.

This algorithm is split into two parts first points get generated from which each face of the mesh is visible and in a second step these points get connected in an efficient way. At the core of this 2 step process are two math problems at the the “Art Gallery Problem” and the “Travelling salesman Problem”.

The easiest way to solve the traveling salesman problem is to assign a cost for each connection between each point. Then iterating through all possible paths connecting all the points looking for the path with the lowest cost.

This process becomes increasingly more difficult the more points one wants to connect. An interesting further study in our project would be assigning cost for each transition between paths. This because a drone has specific flight properties making sharp turns cost more time and energy where as smooth curves are more efficient.

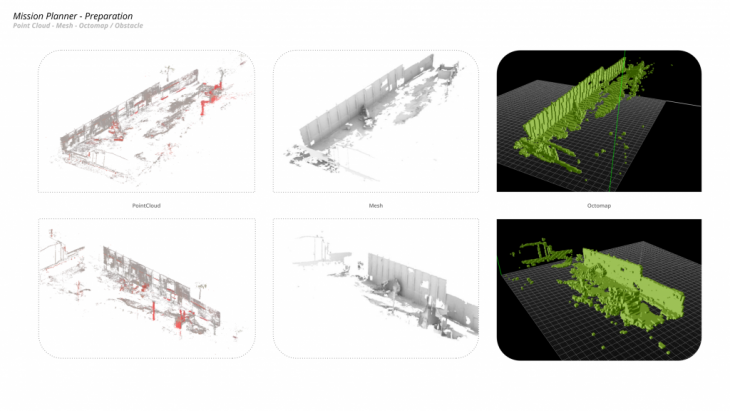

To take into account the existing environment in which the inspection is going to take place. The point-cloud from chapter (X.x) needs to be processed into a octomap. An octomap is a 3D mapping framework based on Octrees.

An octree is a data structure to represent 3D space. Converting the point-cloud first into a Mesh and then into a Octomap. We get a voxelized representation of the environment. Where each voxel can be either occupied or empty.

Is a voxel occupied it means there is some sort of structure inside this space. Is it empty it means it is free for the drone to fly.

For this process we used Agisoft and Binvox.

To speed up the inspection we already segmented the environment into geometries that are more likely to have rust see chapter (x.x). These geometries we call “hotspots” for example beams, columns, connections etc.

From the segmentation we get a point cloud of said hotspot. This point cloud we need to process into a Mesh. For the algorithm to work efficient we need to set up some requirements for the mesh. The triangular faces of the mesh need to have sides of similar length. And must be inspectable from multiple points in its entirety. This leads to more option for the algorithm to iterate through.

To achieve this we tried different process like ACVD and Kangaroo in Grasshopper. Both gave good results. For us Grasshopper was easier to use.

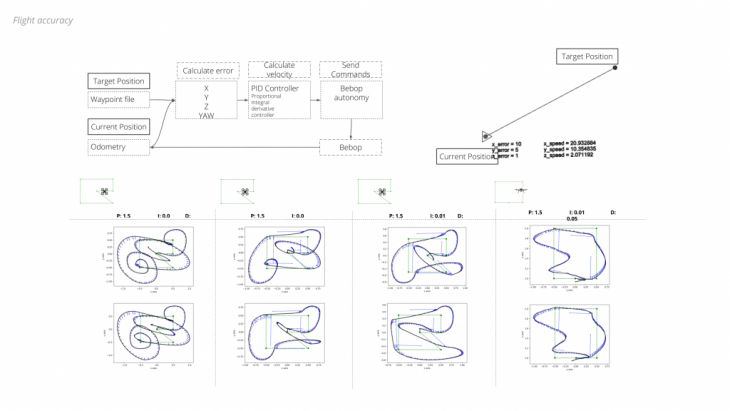

Bebop_autonomy is a ROS driver developed by the Autonomy Lab of the Simon Fraser University. It is a driver for the Parrot Bebop drones. It enables the user to connect directly to the drone to send and receive data.

In addition to the driver Soroush Garivani added a Position Control script to be able to send commands to the drone to change its position. In our research we added functionality to the Position Control.

The script manly receives two inputs one being a way-point file which is the path we generated with the mission planner.

The other is the position of the drone. This we read from the odometery of the drone.

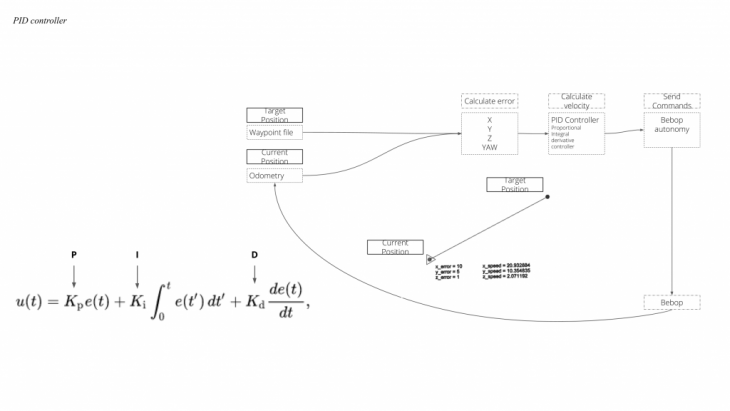

At the heart of the control is the Position controller which compares the current position of the drone to the target position. From the error velocity commands are calculated using a PID controller and send to the drone.

A PID controller or Proportional–Integral–Derivative controller is a control loop which is commonly used in different industrial applications.

A PID controller or Proportional–Integral–Derivative controller is a control loop which is commonly used in different industrial applications.

As explained before it calculates the difference between the current position and the target position. This error gets translated into velocity commands using a proportional, a integral and a derivative parameter. These parameters need tuning for which it is best to have outside control. In case of the drone a 3D tracking system like HTC Vive is helpful.

Detection

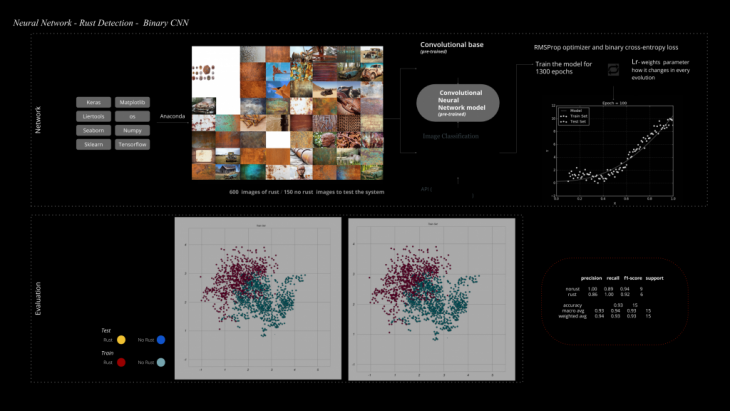

So in order to be more accurate on the detection part a more complex featured – based model was needed and for that purpose we initially built a binary neural network which is able to identify identify rust on images. We used a data set of 600 pictures of rust and 150 images for testing and we trained the system for 1.300 epochs while it performs on a 94% accuracy level. Detecting corrosion and rust manually can be extremely time and effort intensive, and even in some cases dangerous. Also, there are problems in the consistency of estimates – the defects identified vary by the skill of inspector. In infrastructure spectrum there are some common scenarios where corrosion detection is of critical importance. The manual process of inspection is partly eliminated by having robotic arms or drones taking pictures of components from various angles, and then having an inspector go through these images to determine a rusted component, that needs repair. Even this process can be quite tedious and costly, as engineers have to go through many such images for hours together, to determine the condition of the component. While computer vision techniques have been used with limited success, in the past, in detecting corrosion from images, the advent of Deep Learning has opened up a whole new possibility, which could lead to accurate detection of corrosion with little or no manual intervention.

Detecting corrosion and rust manually can be extremely time and effort intensive, and even in some cases dangerous. Also, there are problems in the consistency of estimates – the defects identified vary by the skill of inspector. In infrastructure spectrum there are some common scenarios where corrosion detection is of critical importance. The manual process of inspection is partly eliminated by having robotic arms or drones taking pictures of components from various angles, and then having an inspector go through these images to determine a rusted component, that needs repair. Even this process can be quite tedious and costly, as engineers have to go through many such images for hours together, to determine the condition of the component. While computer vision techniques have been used with limited success, in the past, in detecting corrosion from images, the advent of Deep Learning has opened up a whole new possibility, which could lead to accurate detection of corrosion with little or no manual intervention.

To be more accurate, a more complex feature-based model will be needed, which will consider the texture features as well. Applying this to rust detection can be quite challenging since rust does not have a well-defined shape or color. To be successful with Computer Vision techniques, one needs to bring in complex segmentation, classification and feature measures. This is where Deep Learning comes in. The fundamental aspect of Deep Learning is that it learns complex features on its own, without someone specifying the features explicitly. This means, that by using a Deep Learning model will enable us to extract the features of rust automatically, as we train the model with rusted components.

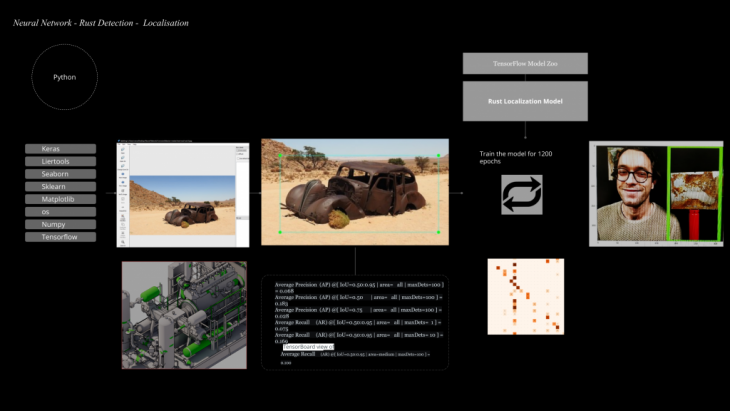

Apart from a yes or no answer on each image we wanted a more precise identification from where rust occurs so we built another system for localisation so essentially a system which recognizes and identifies the rust on an image and places a bounding box around it.The model was trained again with the same data set as before for 1200 epochs and on the right side you can see some initial tests we did.

Apart from a yes or no answer on each image we wanted a more precise identification from where rust occurs so we built another system for localisation so essentially a system which recognizes and identifies the rust on an image and places a bounding box around it.The model was trained again with the same data set as before for 1200 epochs and on the right side you can see some initial tests we did.

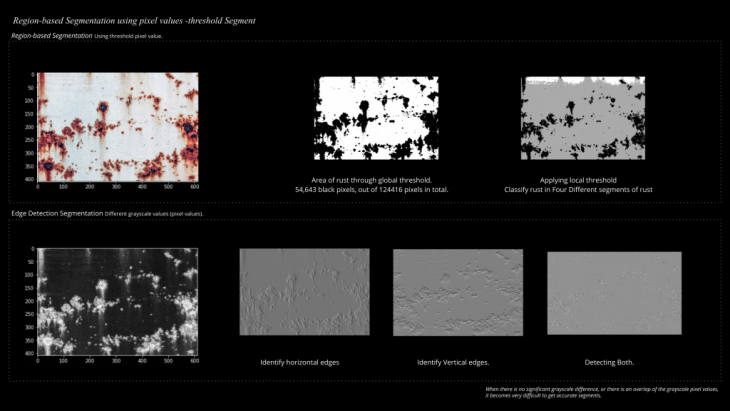

Image segmentation is the process of partitioning a digital image into multiple segments (sets of pixels, also known as image objects). The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries (lines, curves, etc.) in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

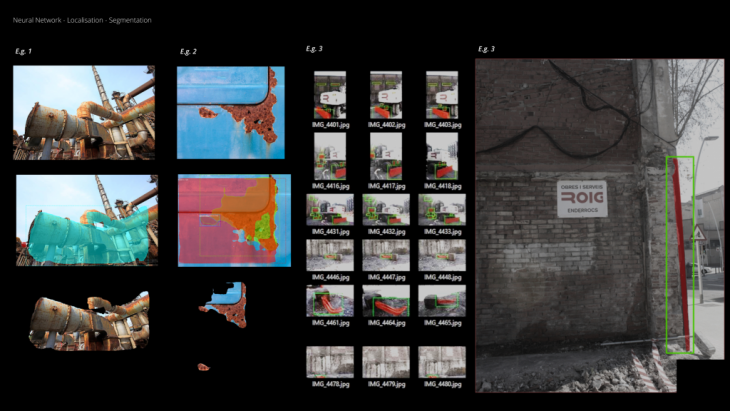

In our case semantic segmentation was used to accurately identify rust position in space . Mask-R-CNN was the tool and by using a large data set of rust images and corrosion textures we trained a model able to accurately identify and isolate rust in images.

But the localisation wasn’t enough as we wanted to find another way to segment precisely the exact rust pixels from each image. For that purpose we started by using 2 different methods for segmentation Region based segmentation and edge detection segmentation with which we were able to measure the exact area on the pixel on an image so essentially of the overall rust in space.

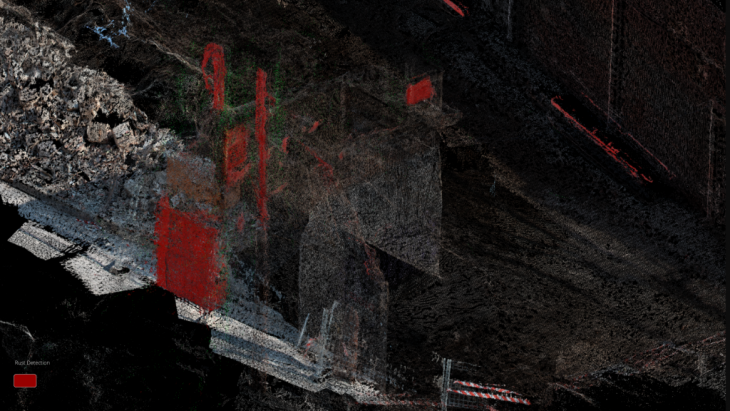

The precision of those two techniques were not satisfying so we started looking into different ways of segmentation and we ended up using the semantic segmentation with we were able to accurately identify all the rust locations on the construction site we scanned. So here you can see some initial tests on the left side and on the right the detection we applied on the site we scanned.

Slicing is another technique we used to increase the resolution of the detection by dividing each image into an x amount of columns and rows and apply the system on each one of those separately.

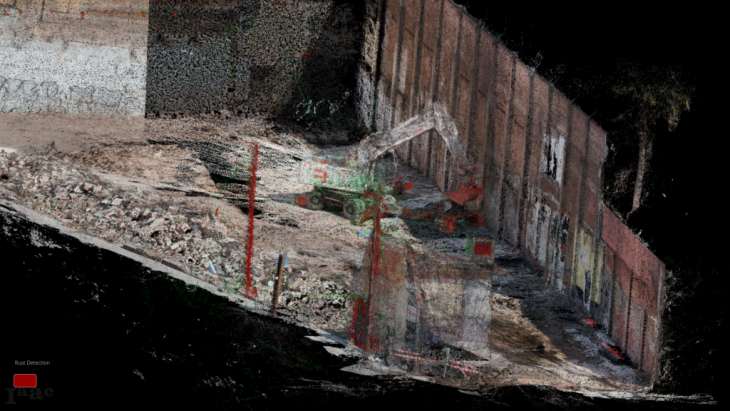

So after filtering all the images we took from the construction site through the various systems we mentioned before we rebuild the pointcloud identifying the positions of rust in a 3 dimensional space this time. Here on the left site you can see the axo of the site, close ups and an elevation of where the main quantity of rust occurs on the site.

Some close ups of the systems, with red you can see where exactly rust sets

Some close ups of the systems, with red you can see where exactly rust sets

Some close ups of the systems, with red you can see where exactly rust sets

RUST EXPANSION

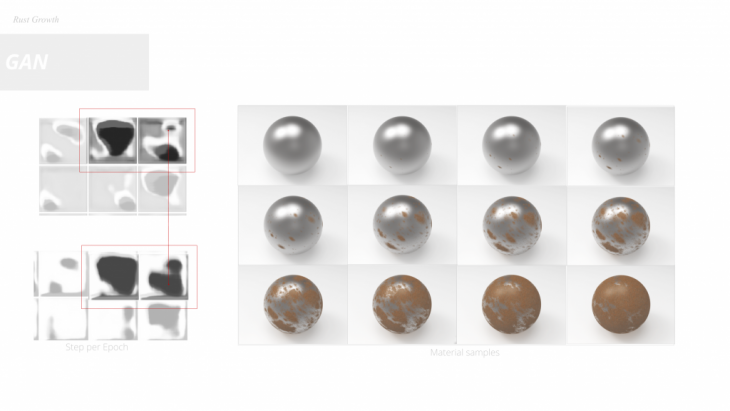

Generative adversarial networks are a type of deep learning – based generative model.

They are remarkably effective at generating both high-quality and large synthetic images in a range of problem domains which in our case is the prediction of rust expansion,Instead of being trained directly, the generator models are trained by a second model, called the discriminator, that learns to differentiate real images from fake or generated images.The model we used was trained for 1000 epochs generating an image or rust expansion per epoch.By then counting the difference in the pixels we estimated the rust growth by generating an equal amount of textures we simulated the growth of corrosion on a generic surface.

So for that purpose we built a different model again trained with the same data set as before which is able to synthesize an image of rust given a large amount of time. Using this model we were able to measure the step per evolution of the rust which we then mapped on 500 new materials and simulate the growth.

.

USER INTERFACE

</p>

The user interface works with some primitive features highlighting the different hotspot categories which is likely for the rust to occur, And also select the elements that are affected by rust.

This concludes our project and the services we are able to provide.Which range from Logging different steel components in a heavy industries structure,measure structural quality in terms of rust attack and future rust expansion, to bundle all these information into a 3D user interface. This allows potential clients to manage their facilities regarding the maintenance. While they are able to assure quality control and safety checks on their infrastructure.

Blossomrust is a project of IaaC, Institute for Advanced Architecture of Catalonia developed at Masters of Robotics and Advanced Construction (MRAC) in 2019-2020 by Students: Abdelrahman Koura, Alexandros Varvantakis, Cedric Droogmans, Luis Jayme Buerba Faculty: Aldo Sollazzo (IAAC, NOUMENA) – Daniel Serrano (EURECAT Technology Centre of Catalonia)