Autonomous-navigation

To explore more potentials of ROS and how ROS can play important role in converting a normal drone to a mapping drone. the same is applicable to UGV and other robots. ORB_SLAM is used to generate point clouds in real-time using a monocular camera. It has the capability of loop closure, map saving, and using the same map to improve the quality of the point cloud/model. The final goal of the project is to create autonomous photogrammetry with ROS.

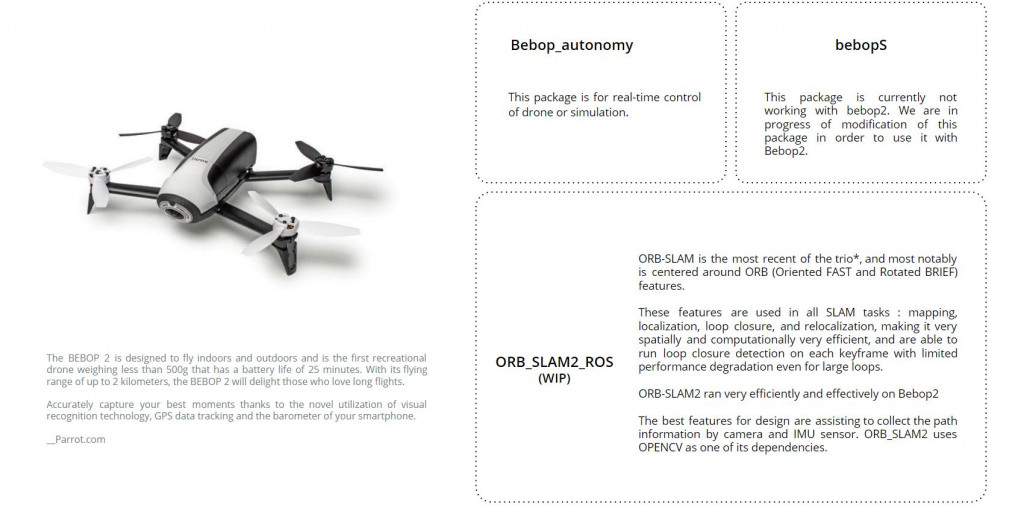

Hardware and software are used: PARROT AR DRONE

Parrot Bebop2 was used for this project along with ROS, Bebop_Autonomy & ORB_SLAM2

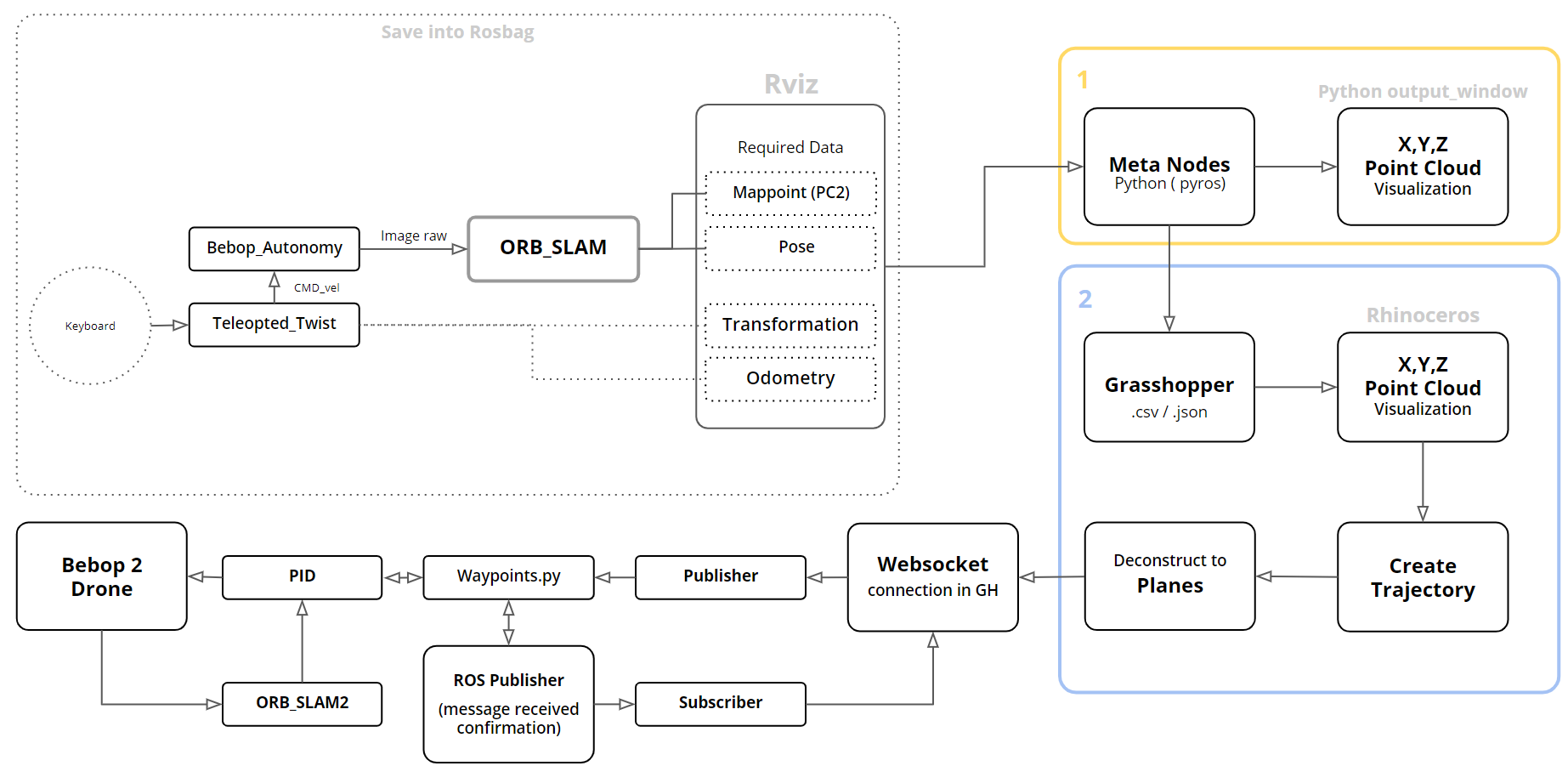

Software Diagram and algorithm

The process involves using ORB_SLAM2 with ROS in order to create the point clouds in real-time. We created a python script that can read the point clouds in real-time. ROS is connected with grasshopper using python & WebSocket components of “bengesht” plugin, which can subscribe to the topics published by python scripts and ROS/ORB_SLAM2. As a result of which we can visualize the point cloud in grasshopper in real-time. A small part of the target area is manually scanned and the trajectory is generated using grasshopper, which works as a starter for autonomous navigation. Once the drone takes off on the trajectory, the algorithm can process on itself in real-time and the trajectory keep on generating automatically(ROS, ORB_SLAM2 & Grasshopper communicated continuously)

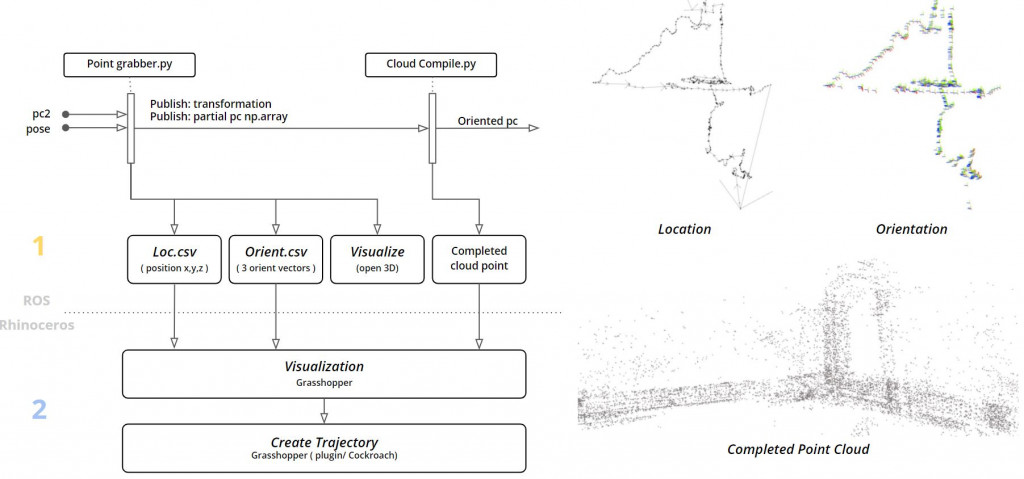

How does the point cloud grabber works?

There are 2 python scripts, one reads the point clouds in real-time and saves them to a CSV file. One CSV file contains information about orientation, another CSV saves information about the position of the PC. The second script can read the point cloud data saved by the first scripts in order to visualize them in 3d.

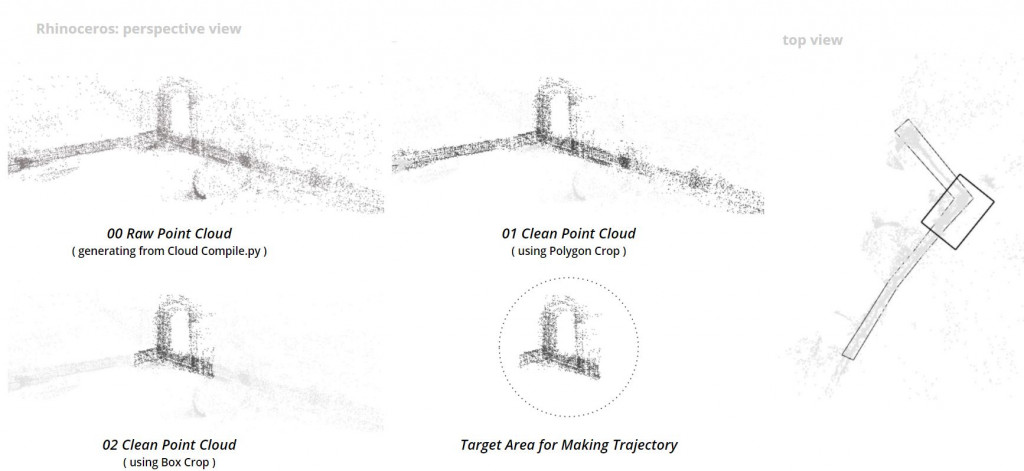

The first Manual point cloud extraction process?

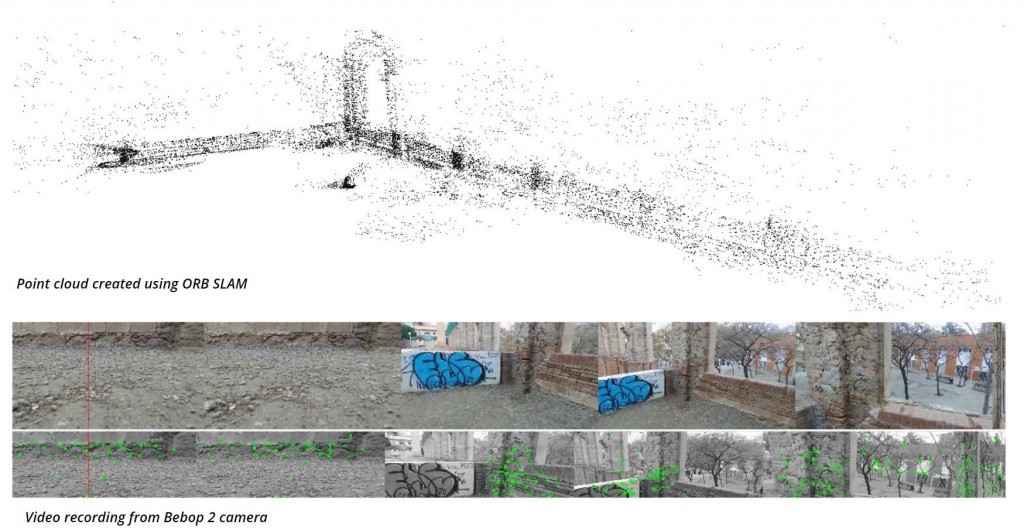

Rosbag is saved from ROS to the computer and point clouds(PC) are extracted

using the process mentioned above (using the point grabber scripts).

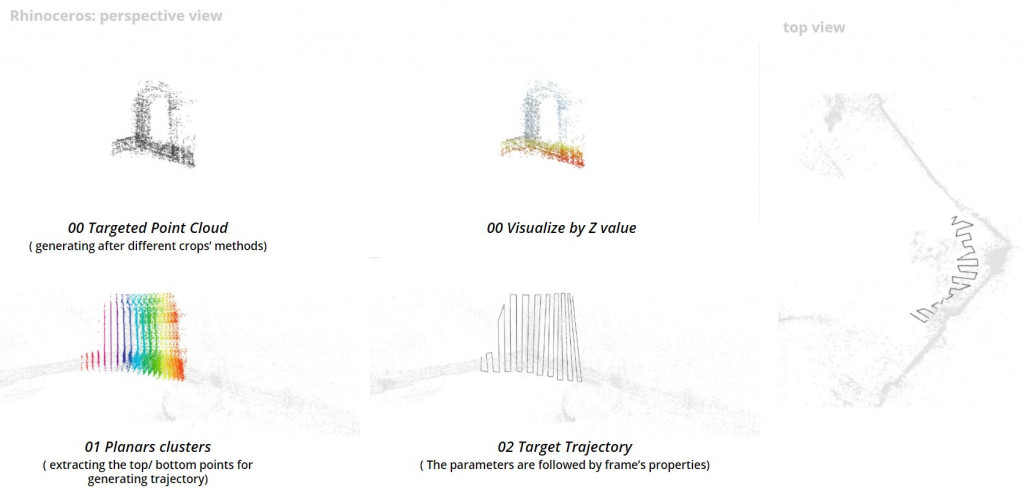

After PC is extracted in Rhino/grasshopper using python script. it is cleaned w.r.t the target area where the trajectory has to be initiated. The trajectory can be generated using the points cloud (reference image below). We extracted points at regular interval to keep the photogrammetry overlap (minimum 70%) and used those points for the trajectory keeping a distance of approximately 1 m from the point cloud (i.e. target)

After trajectory completion in grasshopper, the drone can be connected through ROS and grasshopper and the process is ready to be initiated.

Project files can be accessed from –

https://github.com/MRAC-IAAC/Autonomous-navigation

Following steps to be followed:

Note: you need to have 2 pc or a virtual machine to run Ubuntu (ROS) and the grasshopper in windows.

- Connect the bebop to laptop wifi.

- Start the Bebop_driver to connect the bebop to ROS.

- Run the ORB_SLAM2_ROS.

- Initiate the pointgrabber.py, visualizeptcloud.py & pointcloudcompiler.py in separate terminals.

- Initiate the bebop_position_controller.py. This file contains multiple publishers and subscribers which send/receive info such as the position of bebop to ROS, point clouds to ROS, etc. It takes the information of the trajectory from GH as waypoints/coordinates and passes it to bebop as a command to go to those points. This happens in real-time and the waypoints are passes one after another. Also, it sends an “Arrived” message once it reaches the waypoint which acts as a request to receive the next waypoint.

- Run the ROS bridge in ubuntu PC.

- start grasshopper and connect it to ROS pc using the WebSocket component from Bengesht plugin follow the rosbridge and bengesht guide from the link provided.

- Once ready with all the setup. Select the bebop_position_controller.py and you can take off with “T”. After the drone takes off. It can be set to navigation mode using “N”. The navigation mode is to start the trajectory. The drone will start the trajectory and will return to its start position.

- to interrupt the process at any time, you can press “L” to land.

Autonomous Navigation // S.2 is a project of IAAC, Institute for Advanced Architecture of Catalonia

developed at Master in Robotics and Advanced Construction Software II Seminar in 2020/2021 by:

Students: Shahar Abelson, Charng Shin Chen, Arpan Mathe, Orestis Pavlidis

Faculty: Carlos Rizzo

Faculty Assistant: Soroush Garivani