+ ABSTRACT

To best accommodate rapid urbanization while making cities more sustainable, livable, and equitable, designers must utilize quantitative and qualitative tools that allow them to have real-time results to make informed decisions at the design stage. On the other hand, the current urban design process is often a long and time-consuming workflow that typically involves a team of architects and urban planners who conceive a handful of schemes based on zoning requirements with the help of CAD systems. Due to the scale of the problem as well as its complexity, designers have really limited time and capacity to try to design and test as many iterations as possible. This often results in just a few different options that do not resolve the problem in its full spectrum.

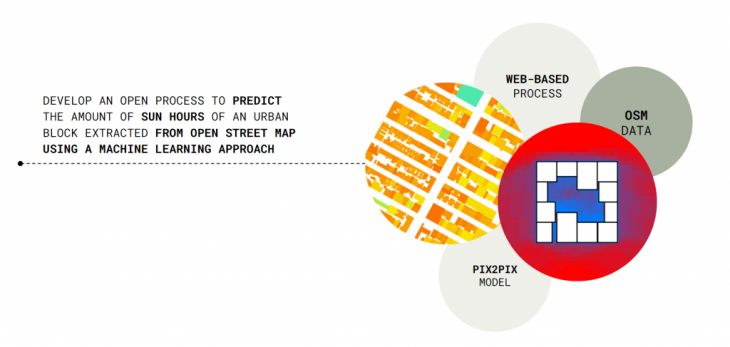

Based on this challenging scenario, we wanted to research and develop a new methodology using machine learning that can help us to predict urban radiation in real-time without the need for time-consuming computational models.

+ CONCEPT

Based on this challenge, we wanted to develop a process that will allow us to take existing urban blocks from a city, in this case, the city of New York, and then use a real-time machine learning workflow to predict the number of sun hours that the ground surface will receive.

+ METHODOLOGY

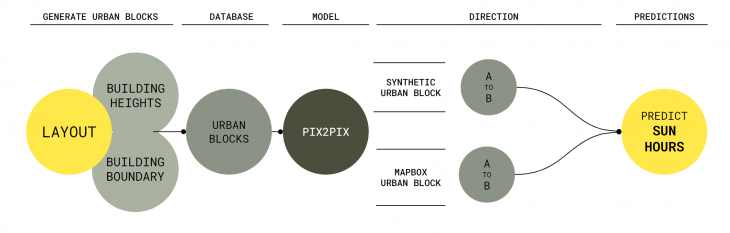

Once the challenge and the concept were defined, we then develop the pseudo-code that is going to allow us to test and develop our machine learning approach. The process starts with the creation of our own dataset, using an urban block generator created on grasshopper. After that, we use the dataset to train our pix2pix model using 800 images for train and 200 images for validation. Once we achieved the accuracy levels we wanted, we then proceed to split the workflow into two possible scenarios. The first one, to predict the number of sun hours on new urban blocks created from the generator that can be used as a design workflow. And the second approach, that this process can now be used as a surrogate model to predict sun hours on existing blocks extracted from open street maps.

+ DATA GENERATION

We developed a custom Grasshopper script that allowed us to generate diverse urban blocks that imitates the grain and composition that we can find in the city of New York. For this, we allowed the script to iterate through multiple parameters like width, length, plot amount, heights, plot depth, green spaces, etc. Also, we wanted to be able to indicate the height of each building. To achieve this, we decided to use a color-coded approach that displays the height of each building by color, training all the way from 5 to 80 meters. The final datasets have been uploaded onto a Github repository that can be accessed via this link.

+ TRAIN MODEL

For our machine learning process, we choose to use the Pix2Pix model developed in 2017 by Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei Efros at the University of California (link). Pix2Pix is an Image-to-Image translation process with conditional adversarial nets that not only learn the mapping from input to output images but also learn a loss function to train this mapping. The reason we chose it is because of its capacity and flexibility to learn and predict from custom datasets that do not require a complex architecture to run. Once we tested the model using multiple hyper-parameters, we choose the configuration below as the optimum set:

- MODEL HYPERPARAMETERS

- TRAINING EPOCHS: 10

- LEARNING RATE: 0.001

- BATCH NUMBER: 40

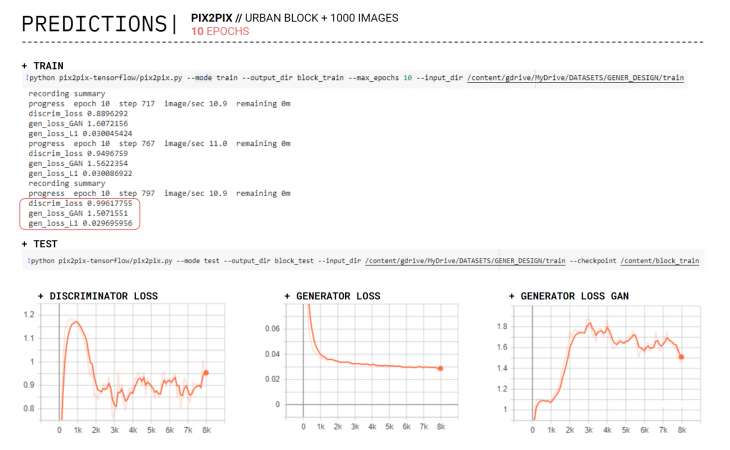

+ Trained model using 10 Epochs

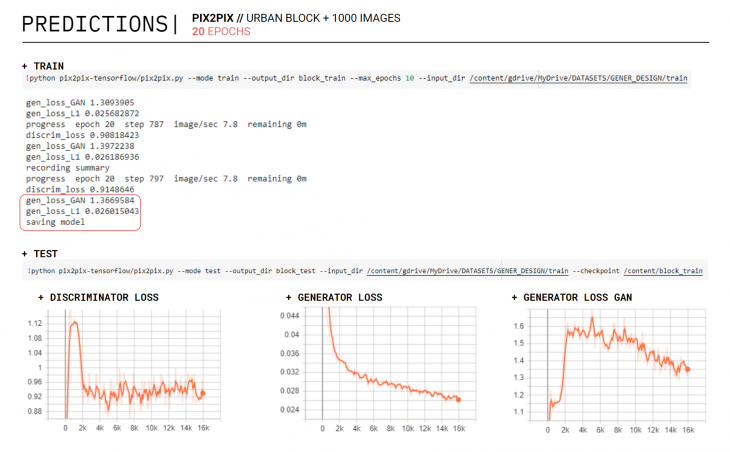

+ Trained model using 20 Epochs

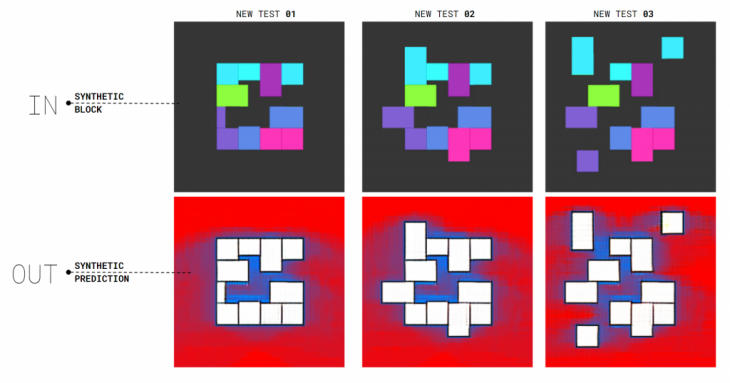

+ TEST MODEL

Once the model has been trained using multiple configurations and achieved a good generator loss, we then proceed to test the model using our test dataset. These are a series of tiles that the model saw before, and we can use them to understand and compare how well is the model predicting. We tested on three different sets of tiles. The first set has presented a small variance in the parameter space, having almost square and most typical urban block layouts. The second set has more diverse urban blocks with irregular dimensions that resemble the rectangular blocks found in the city of new york. In the third dataset, we broke almost completely the urban block to then test the limits of this prediction model.

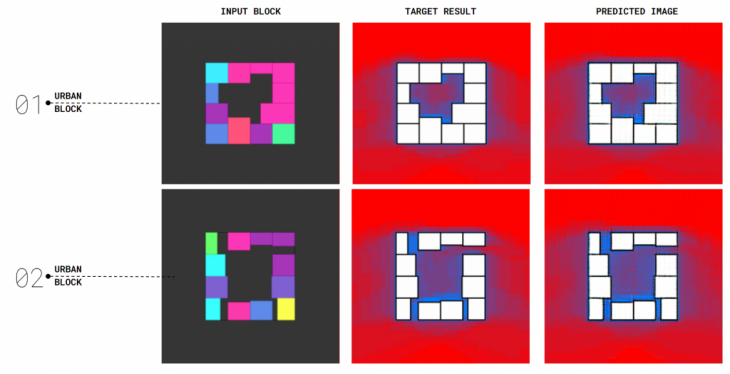

+ First test set using a conventional square urban block

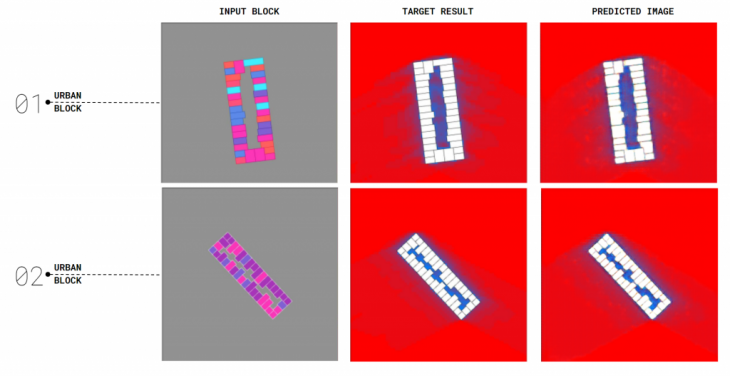

+ Second test set using a more diverse rectangular block inspired on New York’s urban fabric

+ Third test using a completely fragmented urban block

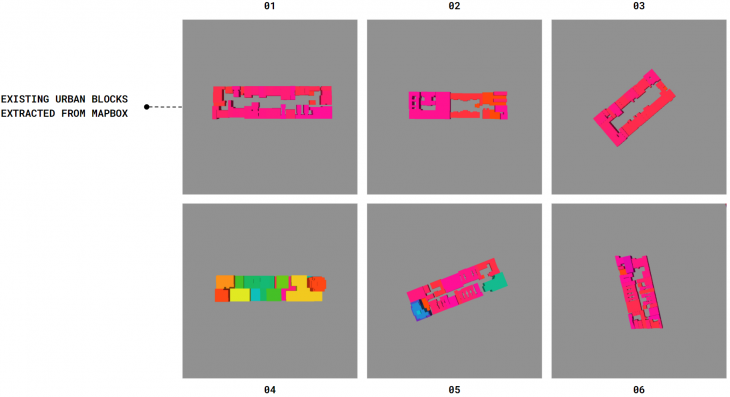

+ SURROGATE MODEL // EXISTING BLOCKS

As a final step of our research, we wanted to investigate the possibility of using our new pix2pix trained model as a surrogate machine learning model to predict sun hours on existing urban blocks extracted from open street maps. As a starting point, we first created a custom web-based map of New York using Mapbox and an open street map 3D building dataset. We then styled and colored all the existing buildings based on their height to match the same color range that we had on our synthetic block generator in grasshopper. This new web-based map allows us to then see and extract any existing urban block from the city of New York and then use feed it onto our pre-trained model.

+ New York’s custom web-app to extract existing urban blocks to predict sun hours

+ A series of blocks extracted from the web-app to use as test sets

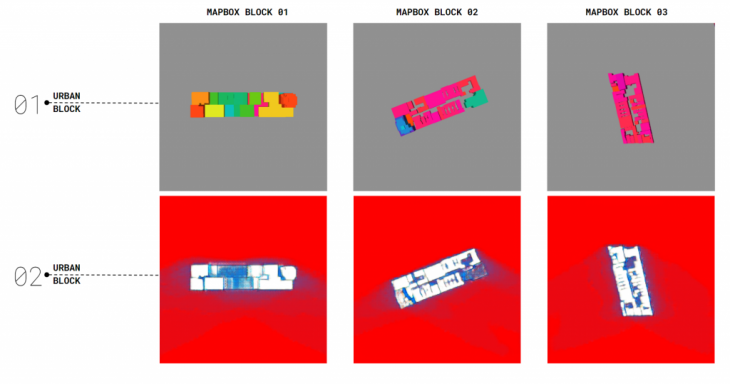

+ SURROGATE MODEL // PREDICT SUN HOURS

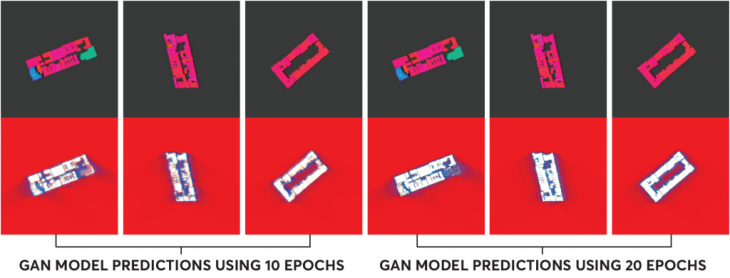

Once we extracted existing urban blocks from the City of New York using the same graphical style as our synthetic dataset, we then proceed to use them as inputs test on our pre-trained machine learning model. We used the same model trained before but with two tests it using 10 and 20 epochs for training in order to see if it was any major improvement on the quality of the final output.

+ First prediction test using 10 Epochs

+ Second prediction test using 10 and 20 epochs

+ CONCLUSION

As a final conclusion of our research, we have realized that the use of machine learning models in complex design and architectural workflows can not just fast-track the computational times but also open the door to new and broader opportunities that can disrupt the way we do architecture. We also found that generating a synthetic dataset using generative models inside Grasshopper might be not the best solution. And that we can achieve better training using geographical data exported from OSM where we can extract boundaries and metadata from real urban blocks.

+ GITHUB REPOSITORY: LINK

+ PROJECT PRESENTATION: LINK

+ PROJECT VIDEO: LINK

+ CREDITS

Urban Lux is a project of IAAC, Institute for Advanced Architecture of Catalonia developed in the Master in Advanced Computation for Architecture & Design in 2020/21 by Hesham Shawqy and German Otto Bodenbender. Faculty: Stanislas Chaillou and Oana Taut