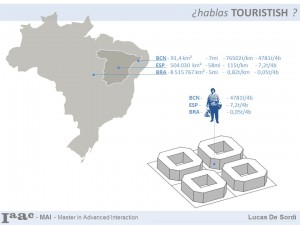

THE VISIBLE CITIES This project consists in a system to use cameras to collect data and comprehend the metabolic dynamics on people’s movement and enhance geo-position tools. It aims to be implemented on Smart Citizen platform as an urban tool to identify the where amount of people, but also, to be as useful as an installation for those who experience the city. WHY? To track down cars, buses, bikes, metros and so on, is easy on Internet of Things times, but to track the most important player in the city, the human, is a much harder task. It usually involves peoples’ collaboration, technology limitations and privacy implications. A good example of this, are the tourists, they are not part of local communities, but they play a very important role in the big cities, especially in Barcelona, a city that receives around 5 times its own population of tourists. That’s more visitors per year than the whole Brazil!

THE VISIBLE CITIES This project consists in a system to use cameras to collect data and comprehend the metabolic dynamics on people’s movement and enhance geo-position tools. It aims to be implemented on Smart Citizen platform as an urban tool to identify the where amount of people, but also, to be as useful as an installation for those who experience the city. WHY? To track down cars, buses, bikes, metros and so on, is easy on Internet of Things times, but to track the most important player in the city, the human, is a much harder task. It usually involves peoples’ collaboration, technology limitations and privacy implications. A good example of this, are the tourists, they are not part of local communities, but they play a very important role in the big cities, especially in Barcelona, a city that receives around 5 times its own population of tourists. That’s more visitors per year than the whole Brazil!

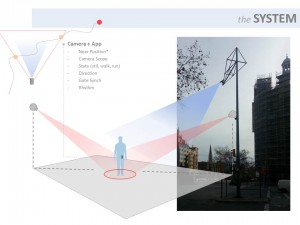

DO WE HAVE THE TOOLS FOR IT? Cameras have an enormous potential as they are already there, thousands spread all over the cities, running 24h a day, and their capacity to collect data is amazing. It’s difficult but once the tool is prepared, it can be implemented everywhere.

DO WE HAVE THE TOOLS FOR IT? Cameras have an enormous potential as they are already there, thousands spread all over the cities, running 24h a day, and their capacity to collect data is amazing. It’s difficult but once the tool is prepared, it can be implemented everywhere.

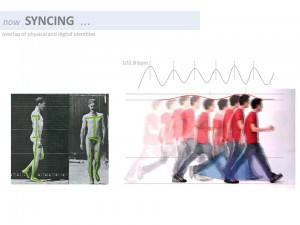

Using cameras and Computer Vision application, it’s possible to establish the position of individuals with absolute precision, much better than mobiles’ GPS. On the other hand, mobiles carry much more information like the user’s ID, preferences, social contacts and many other sensors. <p>

Using cameras and Computer Vision application, it’s possible to establish the position of individuals with absolute precision, much better than mobiles’ GPS. On the other hand, mobiles carry much more information like the user’s ID, preferences, social contacts and many other sensors. <p>

</p> XL. CITY SCALE The goal here is to overlap both in a platform that can identify the person for the camera, and provide precise position for the mobile app. This platform is also necessary to filter user’s privacy and returning data feedback for the Smart Citizen map and the city council with the information that the user decides to provide, such as sex, age, and communities it participate. On the map it will be possible to see hotspots in real-time, pedestrian space usage over time, number of visits in touristic place, and so on. The data can get much more specific and complex than today’s systems like the time spent by men about 20-30 years old in front a billboard’.

THE APP – MUYBRIDGE’S LOGBOOK! To sync this data between camera and an app is a bit tricky. The method I’m trying to develop involves stabilizing the approximate position, direction, speed, walking frequency and timing (or Gait) to the platform to be sure that ‘person one’ on camera is the right device. For that I developed an Android App on Processing that collects data from device’s sensors such as GPS, compass and gyroscope (used to identify walking bouncing rhythm, for example) and sends the data to the server in real time. The server uses a probability equation based on the number of people and the sensors ‘reliability’ and precision to do the match. The app is meant to go under the hood of the previously app interface I proposed for the Smart Citizen.

THE APP – MUYBRIDGE’S LOGBOOK! To sync this data between camera and an app is a bit tricky. The method I’m trying to develop involves stabilizing the approximate position, direction, speed, walking frequency and timing (or Gait) to the platform to be sure that ‘person one’ on camera is the right device. For that I developed an Android App on Processing that collects data from device’s sensors such as GPS, compass and gyroscope (used to identify walking bouncing rhythm, for example) and sends the data to the server in real time. The server uses a probability equation based on the number of people and the sensors ‘reliability’ and precision to do the match. The app is meant to go under the hood of the previously app interface I proposed for the Smart Citizen.

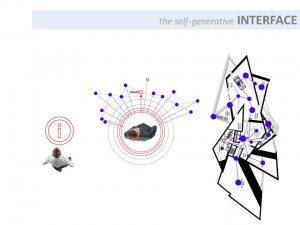

M. AN HUMANCENTRIC INTERFACE Taking advantage of this technology, the final part of this work is an installation that displays with projectors a dynamic interface guiding and informing a target individual. Could be set inside museum halls, or just pointing directions with a laser projector in touristic outdoor places.

M. AN HUMANCENTRIC INTERFACE Taking advantage of this technology, the final part of this work is an installation that displays with projectors a dynamic interface guiding and informing a target individual. Could be set inside museum halls, or just pointing directions with a laser projector in touristic outdoor places.