The MatterSite project focuses on automating the acquisition and analysis of material data to promote material reuse from demolition waste streams. This occurs at two main scales; firstly a site scale, to determine location, volume and accessibility of re-usable materials before demolition occurs, and secondly an element scale to extract exact dimensional, qualitative, and aesthetic data from individual pieces post-demolition.

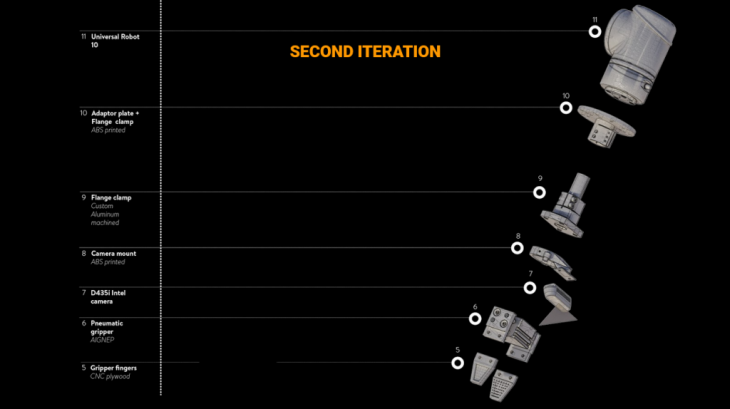

This Automated Element Analysis Module implements an automated system for handling a single relevant material from a single source, in this case the IaaC Digital Urban Orchard Pavilion, disassembled in 2021. Recovered materials consist of Redwood timber framing with a 45mm cross section, ranging from ~50cm to over 2m. With this stock, analysis and sorting are carried out with a Universal UR10 robot, standard pneumatic gripper, and Realsense D435i camera.

Scanning

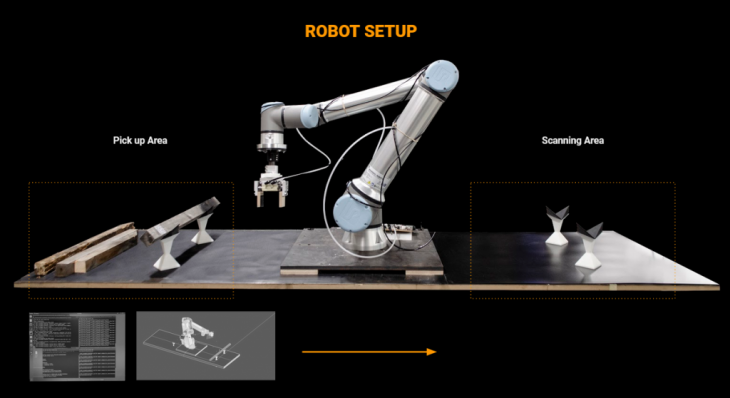

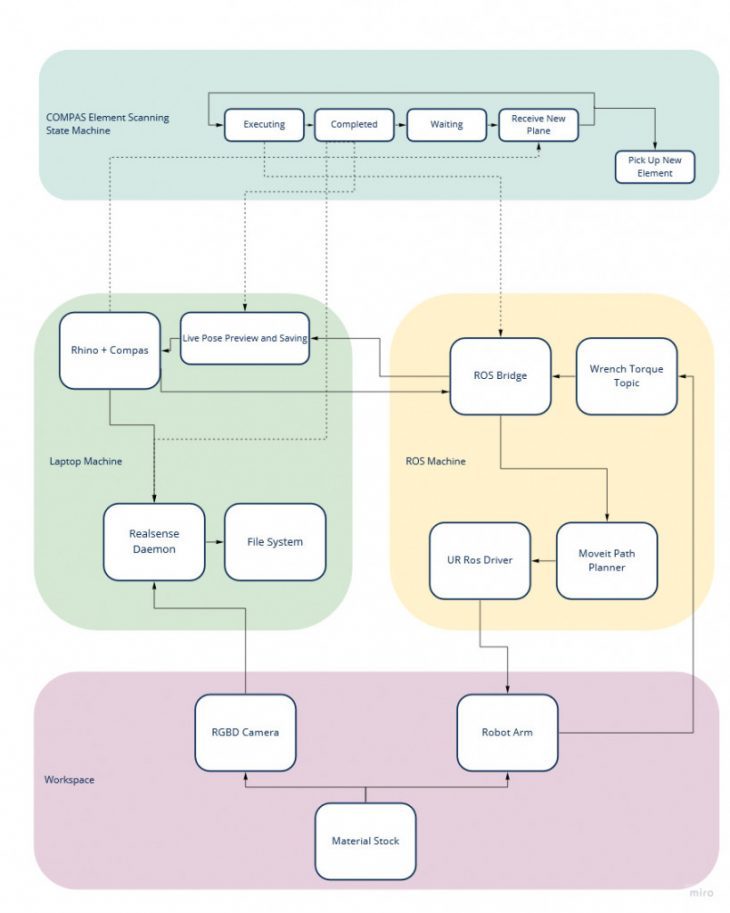

The workcell is divided into two areas, a dropoff/pickup area where new pieces are added, and a scanning area where the piece is held for analysis. For each piece, its arbitrary position is detected in the dropoff area, where it is moved to the scanning area and scanned. This workflow is implemented using a live-control system connecting ROS and Rhino/Grasshopper using the CompasFAB framework. As Grasshopper is built using a single-shot dataflow model, ad-hoc systems have to be written in to handle persistent state and live feedback. The system is built on initial live control demos developed by Grammazio and Kohler for the CompasFab system.

Ultimately, each Grasshopper solve becomes a tick in a Finite State Machine, where the state moves from grabbing a new goal position, executing, executing external tool commands at each position, and waiting for new commands. To store this state and both efficiently and controllably run this State Machine, the system makes constant use of GHPython’s persistent scriptcontext features as well as more-explicit-than-normal control of downstream expiry to ensure components execute in the correct order.

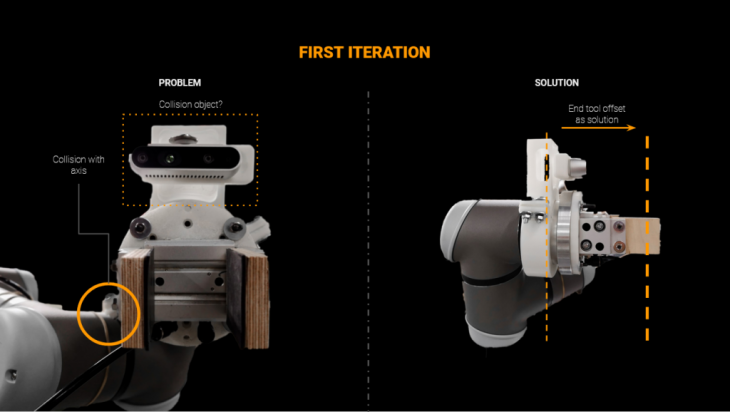

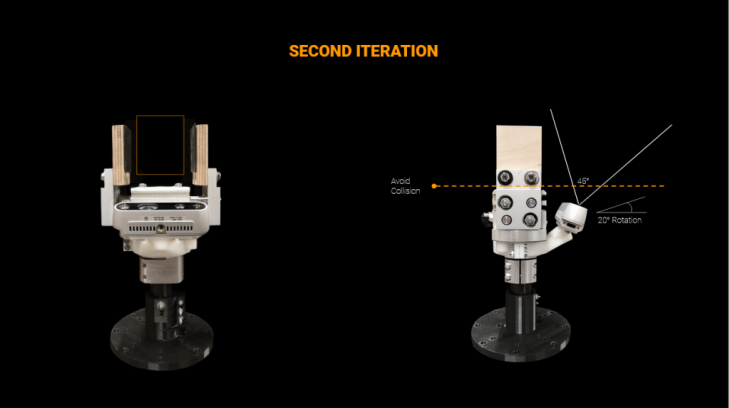

Physically, the system mounts both important tools (gripper and depth camera) on the robot flange. These are laid out to avoid both collision between picked elements and the camera, and to keep the tool fingers out of the camera’s field of view. This also allows basic camera calibration to be read directly out of the CAD model. The table setup uses 3d-printed holders for the timber pieces, printed with embedded magnets to make them orientable, but stable on the work surface.

During the scanning phase, each element must be scanned twice (as only the top half is visible at a time in the holders), with the robot performing two 90-degree rotations on the element in between.

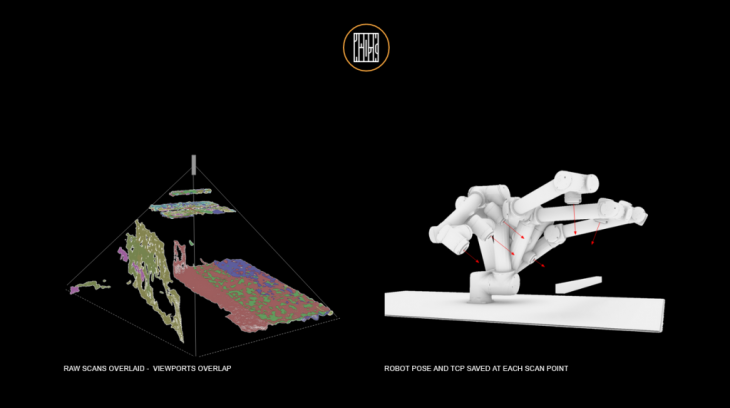

The scanning of each side consists of an arbitrary number of captures of the realsense RGBD field. Generally these are performed in a line above the piece, although any set of positions can be used. Whenever a new scan position is reached, the system also stores the current plane orientation of the tool.

When finished, each captured cloud is then re-oriented from world-space to its associated stored orientation, automatically registering the points into real space.

While the system was developed with the Realsense camera, initial results showed its accuracy and resolution as less than ideal, so moving forward additional RGBD camera models will be tested for practicality in this system. Additional, photogrammetry has been tested as an alternate method of obtaining point clouds, though this hasn’t been fully integrated with the realtime setup.

Analysis

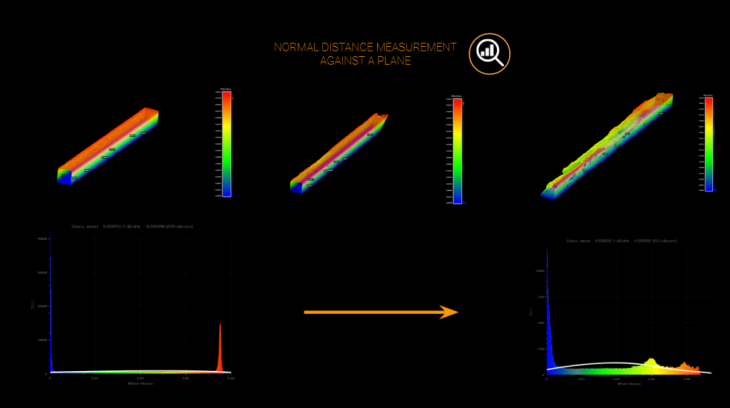

Once each piece is scanned, its captured data is put through various analysis steps. Firstly, we perform geometric surface quality analysis on the point cloud. With the cloud lying on its ‘side’, each point is compared to a flat ground plane, with the distances stored in a histogram. In an ideal piece, the histogram should show strong spikes at either end, representing the top and bottom of the piece, with a constant level in the middle, representing the slices of the sides. However if the piece has surface damage, the histogram will show stronger results in the middle, which can be measured.

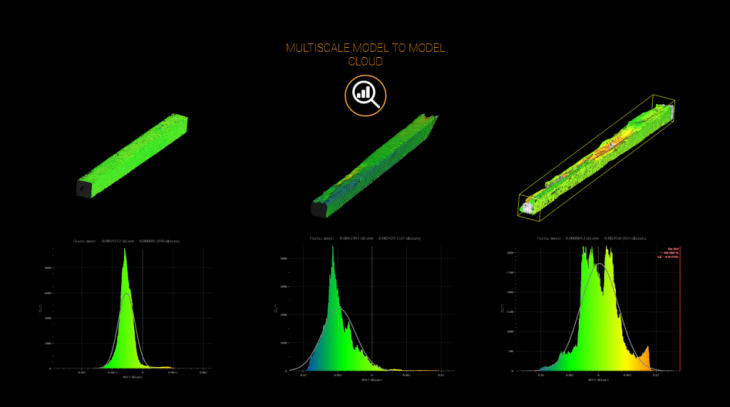

To account for the elements natural texturing and even out results within a site’s materials, each piece is also compared to a manually selected ‘best’ quality’ piece from the scans. Here, the cloud goes through a point-to-point distance measurement to the best-piece-cloud, generating another relative histogram of the associated distances.

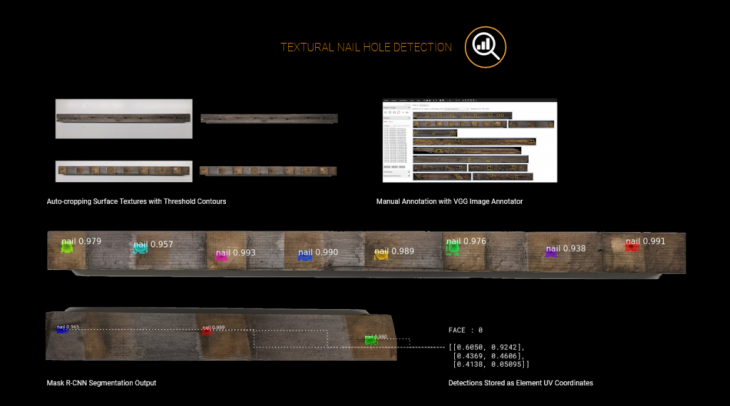

Additionally we perform visual analysis from the image textures extracted from each face. The first analysis here is to find nail holes, knots and other defects left from connective hardware. We use the MaskRCNN localizer to extract the UV position of each defect, which is stored alongside the other analysis data.

Finally, as each piece is picked up and moved into position, we can also measure its mass from the UR10’s torque feedback system. This measurement is combined with the measured dimensional volume and the average density based on species, to estimate decay in the piece.

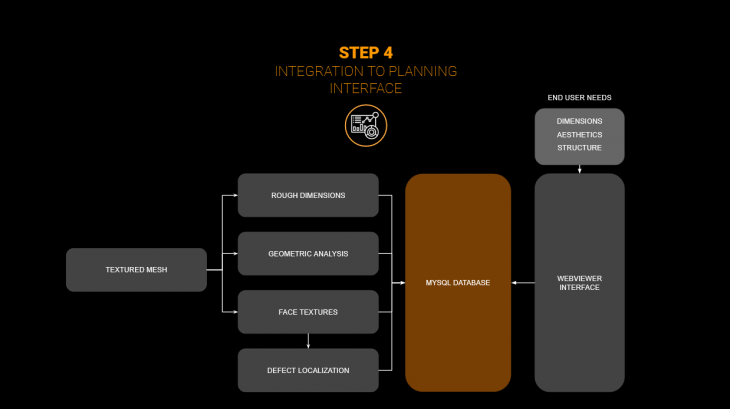

Data

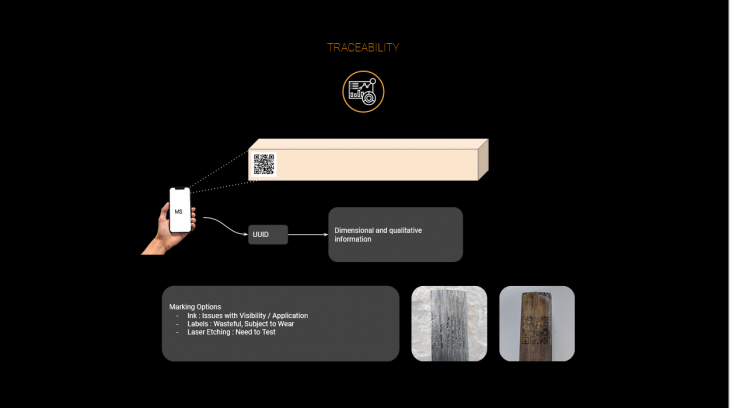

To compile this information for the end user, all of these extracted values are stored in a MySQL database, with each analyzed piece assigned a unique UUID. QR codes were chosen to store this id on each piece simply and easily. For the moment, printed tags have been found to be the most reliable method of application, as direct ink printing and laser etching were found to be too susceptible to the natural grain features of the wood.

A database viewer website was also written as a mockup of how an end user may interact with this data. The viewer allows searching and filtering by all the major datapoints stored per piece, and displays surface texture and defect locations.

MatterSite is a project of IAAC, Institute for Advanced Architecture of Catalonia developed in the Master in Robotics and Advanced Construction 2019/21 by:

Students: Matt Gordon and Roberto Vargas Calvo

Faculty: Raimund Krenmueller, Angel Muñoz, Soroush Garivani