“We seek to escape the dark cave of a despondent mind by either dulling oneself mentally or through imaginative acts. One form of escapism is daydreaming.”

? Kilroy J. Oldster, Dead Toad Scrolls

ABSTRACT

According to Westerhoff (2012), in all the definitions that he considers about reality, one of them highlights, which is: “reality is what appears to the senses”. However, if we stick to this idea, the hallucinations in a mirage could be considered as real, because they exist for our senses, but in fact, we know that they are not real at all. Thus, what’s reality?

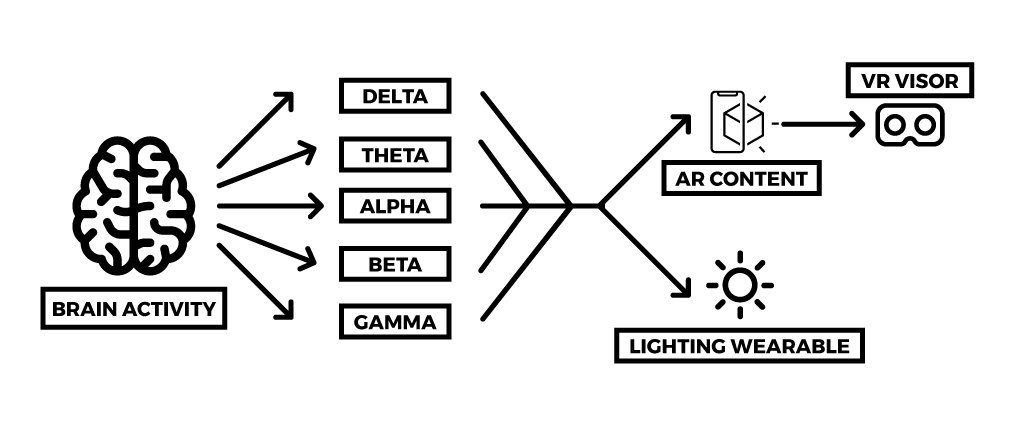

This project attempts to build a new perception of reality, proposing the construction of the world that surrounds us as a direct response to our brain waves whose can be explained as emotions that we are experiencing. By using an EEG headset as the sensor, the user will see custom digital content based on the data recorded by this sensor, this content will be set in the real world using Augmented Reality as a visualization platform.

Daydreamers will be a new platform to interact with the world through your emotions.

Westerhoff, J. (2011). REALITY: A VERY SHORT INTRODUCTION. Oxford University Press.

PERSONA AND CONCEPT

For this project, the Bio-Media emerges and with this, the opportunity to convert the people in artists and prosumers of their bio-signals, in this case, brain waves that will be used to modify and create digital content in the real world using Mixed Reality techniques and SLAM technologies.

The persona, it’s a fictional race called “Sensatorians” which lost the ability to express emotions and understand them. But with the Daydreamers headset, they will be able to regain this capacity again by being able to see their own thoughts in the real world and interact with them.

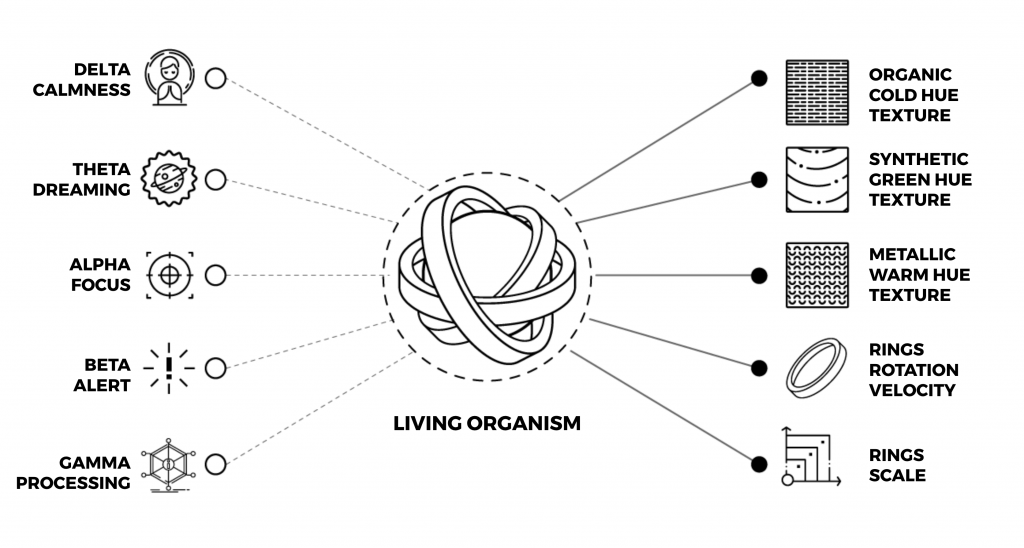

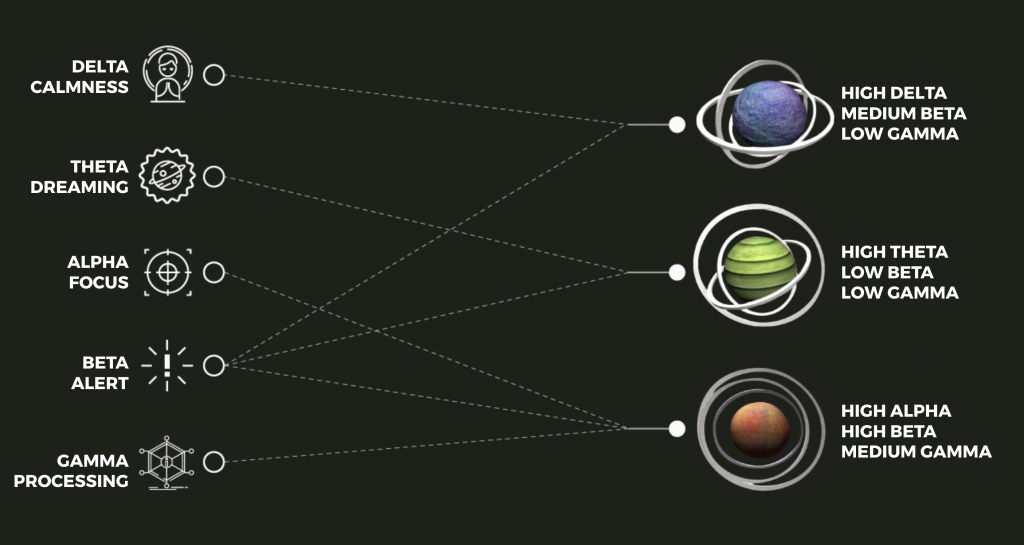

LEARNING THE TOOLS

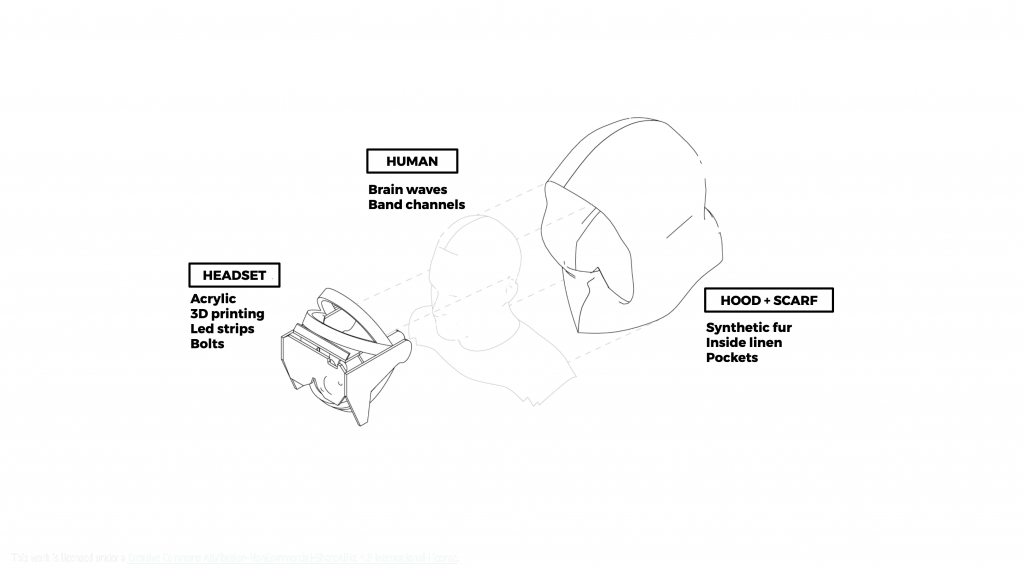

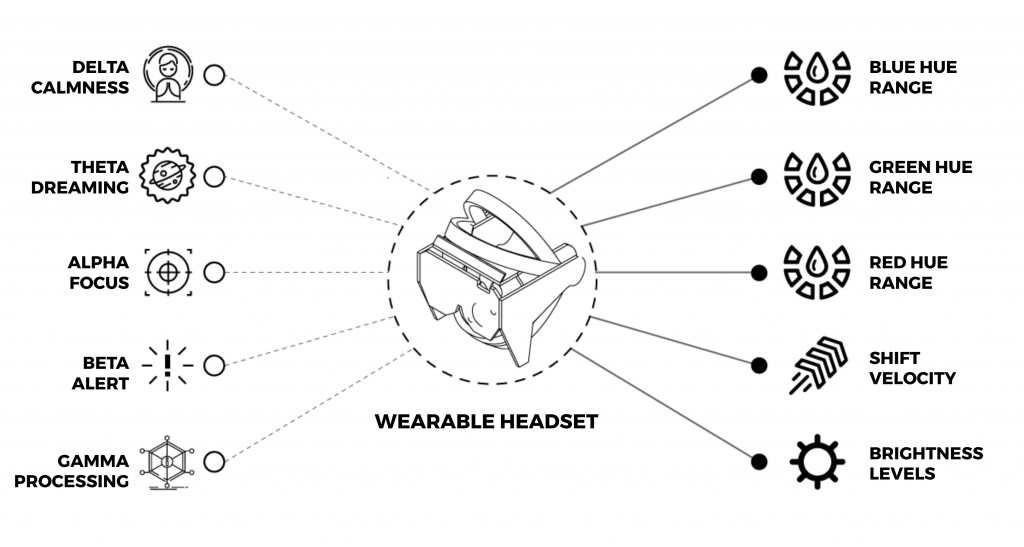

Brain waves are the sense that will be augmented and visualized using the platform. Also, the perception of the world (sight) is enhanced using Augmented Reality. The brain waves are divided in 5 classes, whose are: Delta (0.5hz – 3hz), Theta (3hz – 8hz), Alpha (8hz – 12hz), Beta (12hz – 38hz), Gamma (38hz – 42hz). These waves mean the brain activity level of a user which can give an understanding about how he feels about a situation or in a determined context. With this data, the main idea is to show augmented reality content that will be transformed according to the brain waves feedback. By example, if the user is feeling a calm state of mind, the objects that will be placed in his surroundings will be a tree alike shape which is being transformed (scaled, twisted, deformed, change the color) according to the user’s feedback. By measuring the brain activity and using the band channels that the Muse headset provides, the channels modify specific characteristics of the content to visualize and interact with. The real-time data gathered from the EEG sensor is parsed using Unity which provides a mobile app that handles all the required events for the AR content and also the LED lights in the wearable, whose change it’s color and pattern according to the data recorded from the brain waves.

CLAIMS

Claim #1: The Day Dreams prototype will allow me to see a representation of my own brain waves in Augmented Reality

Škola, F. (2016). Examining the effect of body ownership in immersive virtual and augmented reality environments. Vis Comput (2016) 32:761–770. Retrieved from:

https://www.fi.muni.cz/~liarokap/publications/VisualComputer2016.pdf

This reference gives an insight about how the body can interpreted the new realities, such are VR and AR as part of itself and also understand this content as something that belongs to it. Also, the research support data validation using an EEG sensor recorder.

Coogan, C., He, B. (2018). “Brain-Computer Interface Control in a Virtual Reality Environment and Applications for the Internet of Things,” . In IEEE Access, vol. 6, pp. 10840-10849, Retrieved from:

https://jitectechnologies.in/wp-content/uploads/2018/12/IOT12.pdf

This research supports the benefits to create a BCI interface that can control digital and physical content. Which serves to create autonomy in the interactions that the user needs to perform to achieve an action. Even if the digital context is in a virtual reality world, the research offers a good insight about the mixture between the physical and digital worlds.

Molina, G. G., Tsoneva, T., Nijholt, A., (2009) “Emotional brain-computer interfaces,.”. 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, 2009, pp. 1-9. Retrieved from:

https://core.ac.uk/download/pdf/11472551.pdf

This research supports theories about how the emotions and brain activity of an user can be represented in the sense to test the correlation between what the user sees and how he interprets this content.

Claim #2: The Day Dreams prototype will allow to any user to see different content of their brain waves

Valencia, C., Micolta, J. (2015). Interacciones emocionales entre un videojuego y su videojugador. Cali : Universidad Icesi, . Retrieved from:

https://repository.icesi.edu.co/biblioteca_digital/bitstream/10906/79266/1/valencia_interacciones_emocionales_2015.pdf

This research gives insight about how to create content that evolves with the user’s brain waves. By creating a ever changing video game environment, the paper portraits the deep interaction that occurs when an user see content that belongs to his emotional state.

Daly, I. (2008). Towards natural human computer interaction in BCI. The Society for the Study of Artificial Intelligence and Simulation of Behaviour. Retrieved from:

https://www.researchgate.net/publication/220049307_Towards_natural_human_computer_interaction_in_BCI

This research supports analysis of the pragmatic and psychological consequences of the BCI interfaces and how this can affect the future of our interactions. These theories help to understand that the visualization of content that is created from the brain waves, has an impact in the user and also in the society that surrounds him.

E. Muñoz , E. R. Gouveia, M. S. Cameirão and S. Bermudez I. Badia. (2016). The Biocybernetic Loop Engine: An Integrated Tool for Creating Physiologically Adaptive Videogames. Madeira Interactive Technologies Institute, Funchal, Portugal Universidade da Madeira, Funchal, Portugal. Retrieved from:

http://www.scitepress.org/Papers/2017/64298/64298.pdf

This research supports benefits about the usage of BCI to create deeper user experiences. Taking video games as reference, the research propose a loop that is created with the user interactions that leads to a change of content inside the videogame, which creates a feedback that generates another input in the brain waves, creating a loopable emotional system

Claim #3: The Day Dreams prototype will change the user’s brain activity in real time

Tarrant, J., Viczko, J., Cope, H. (2018) Virtual Reality for Anxiety Reduction Demonstrated by Quantitative EEG: A Pilot Study. Frontiers in Psychology. Retrieved from:

https://www.frontiersin.org/articles/10.3389/fpsyg.2018.01280/full

This research supports the effectiveness of the use of new realities to change a determined group of brain waves in an user. By making a deep research about how to reduce anxiety, the research helps to understand what changes occurs in the mind of an user when he is experiencing a digital experience.

Aftanas, L., Pavlov, S., Reva, N., Varlamov, A. (2003). Trait anxiety impact on the EEG theta band power changes during appraisal of threatening and pleasant visual stimuli. International journal of psychophysiology : official journal of the International Organization of Psychophysiology. 50. 205-12. 10.1016/S0167-8760(03)00156-9. Retrieved from:

https://www.researchgate.net/publication/9035458_Trait_anxiety_impact_on_the_EEG_theta_band_power_changes_during_appraisal_of_threatening_and_pleasant_visual_stimuli

This research supports information about which are the brain waves bands that are altered with a determined visual input to the user. The paper provides a validation in the change of the alpha theta (mainly) when a pleasant visual input is given to the user, which helps to prove that the user’s brain waves changes according to what he is seeing.

Gruber, T., Müller, M., Keil, A., Elbert, T. (1999). Selective visual-spatial attention alters induced gamma band responses in the human EEG, Clinical Neurophysiology, Volume 110, Issue 12, Pages 2074-2085, ISSN 1388-2457. Retrieved from:

https://eurekamag.com/pdf/011/011338102.pdf

This research supports validation in the changes of the brain waves depending of the spatial attention of the user. As the prototype propose an alteration in the user’s physical space, the paper gives validation in the sense to demonstrate which brain waves change when the user’s space is altered and when he is focused on it.

Claim #4: The Day Dreams prototype will serve as an artistic light representation of the user’s brain activity

Claim #5: The Day Dreams prototype will create empathy when other person uses the EEG headband

W.O. A.S. Wan Ismail, M. Hanif, S. B. Mohamed, Noraini Hamzah, Zairi Ismael Rizman(2016). SHuman Emotion Detection via Brain Waves Study by Using Electroencephalogram (EEG). International Journal on Advanced Science, Engineering and Information Technology. Retrieved from:

http://insightsociety.org/ojaseit/index.php/ijaseit/article/view/1072/pdf_297

This research supports the use of EEG as a method to define emotions that are not visible just be looking at someone’s face. By making use of AI techniques, the study aims to detect human emotions by reading the brain waves activity of an user. With this, the research also compares the analysis of the neurofeedback with the faces of the users to find the relation between the visible emotions and the invisible ones. The paper proves the accuracy of the EEG sensors to understand others emotions.

Myers, J. & Young, J. S. (2012). Brain wave biofeedback: Benefits of integrating neurofeedback in counseling. Journal of Counseling and Development. 90(1), 20-29., Retrieved from:

https://pdfs.semanticscholar.org/831f/c423f91a217c3573448a06df39ac0669f8a4.pdf

This research supports the usage of an EEG sensor as a helping mechanism to help to understand others conscience and mental state. The EEG improves the counseling sessions of psychiatrists by letting them know how his patients behave in their deepest thoughts and emotions.

Neumann, D., Chan, R., Boyle, G., Wang, Y., Westbury, H. (2015). Chapter 10 – Measures of Empathy: Self-Report, Behavioral, and Neuroscientific Approaches, Editor(s): Gregory J. Boyle, Donald H. Saklofske, Gerald Matthews, Measures of Personality and Social Psychological Constructs, Academic Press,Pages 257-289. Retrieved from:

https://www.researchgate.net/publication/286221523_Measures_of_Empathy

This research supports the measuring of empathy levels by converting subjective questions into objective data that serves to validate the empathy level in a group of test subjects. The paper helps to understand and prove that measure and create empathy is something achievable using an EEG sensor.

FINAL ITERATION

Credits for the renders to Andres Valencia

Hypothesis

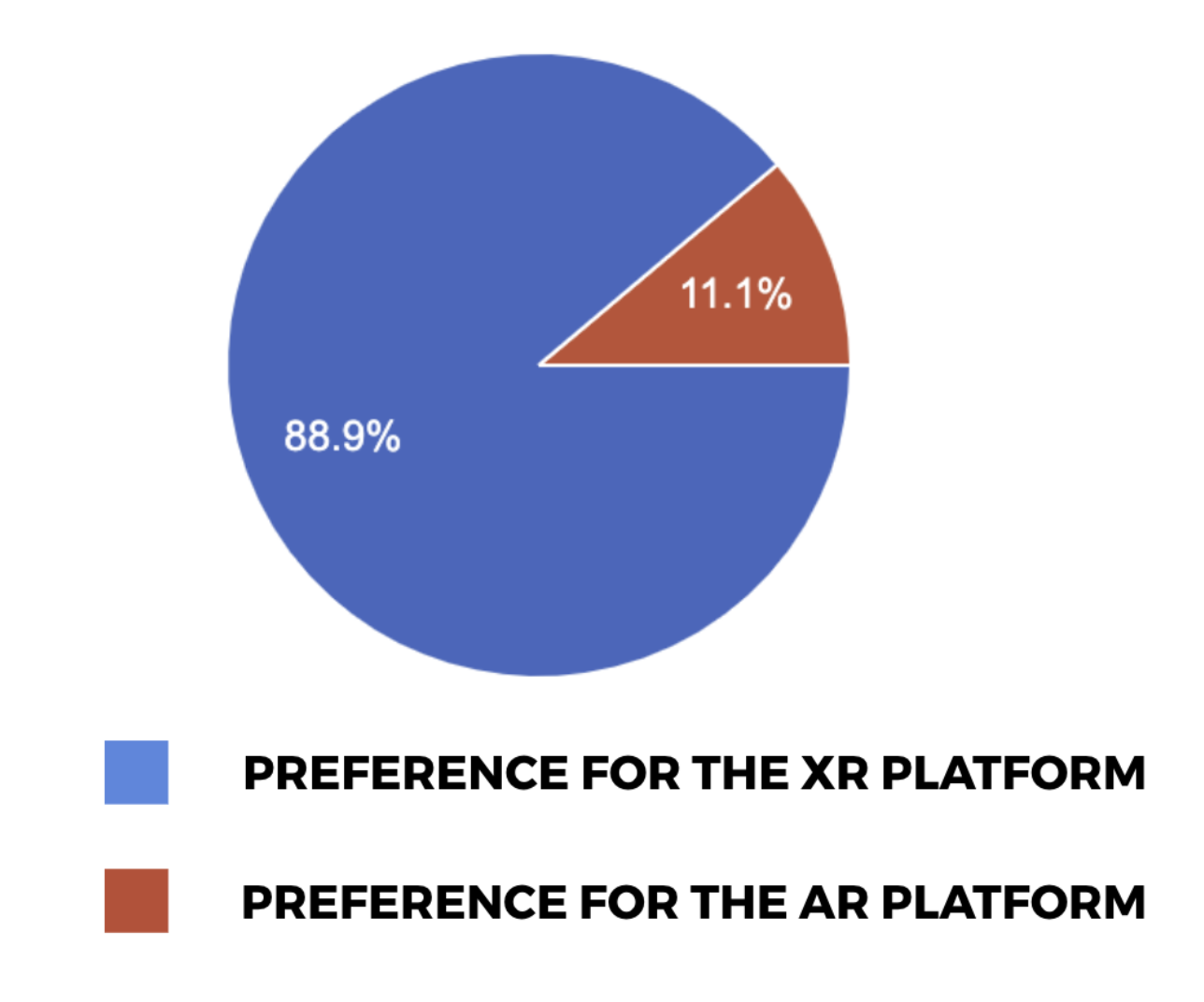

Hypothesis #1: A XR platform (AR mixed with VR) will provide a deeper grade of immersion than a normal AR platform. This should be testable by making user’s tests on different people giving them at least 2 minute session for the both platforms. The order how the platform are provided should be equitative.

Conclusion: With a total number of 9 persons which the data was gathered from, 88,9% of them found the XR mode more immersive and interesting to use. This data validates the hypothesis about the importance to create a new augmented experience that allows the user to feel in a completely different world.

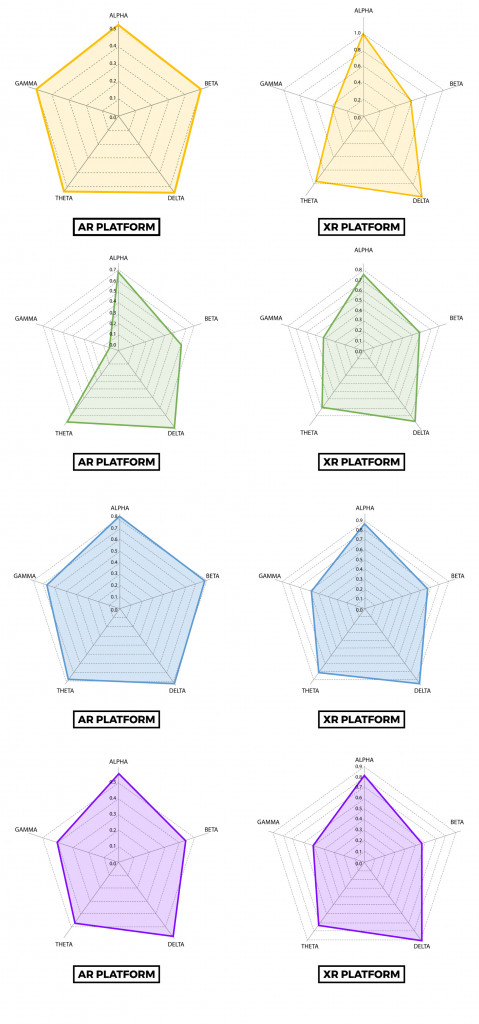

Hypothesis #2: By showing AR content in the real world, the brainwaves of the user will throw different data even if he is in the same environment. This should be testable by putting the user under a 2 minutes session in the same location and sensing his brain waves using the XR app and without using it. There should 4 locations to do the tests with different users: one of this should have the user alone under a calm indoors environment, another should have the user under a calm outdoor environment, another should have the user under a noisy indoor environment, and the final one should have the user under a noisy outdoor environment.

Conclusion: With a total number of 9 persons which the data was gathered from, the following graphs represent their brainwaves during a session of 1 minute per each user. The results indicates that in fact, their brainwaves changes according to the platform that their are experiencing, even if it is in the same physical space. However, the data is not completely reliable in matter that it starts to record the brainwaves from the first connection with the Muse headband, and not from the starting point of the app, which can explain why the results looks so homogeneous between them.

Hypothesis #3: By showing AR content the Alpha waves (…Alpha is ‘the power of now’, being here, in the present…) or the Beta waves (…Beta is a ‘fast’ activity, present when we are alert, attentive, engaged in problem solving, judgment, decision making, or focused mental activity.) should have an increased value than if the user is not seeing the AR content. This should be testable by putting the user under a 2 minutes session under a controlled environment where he can interact with the content without any distraction and recording his brain waves using the content and when he is not using it.

Conclusion: With a total number of 9 persons which the data was gathered from, the following graphs represent their brainwaves during a session of 1 minute per each user. The results indicates that the alpha waves generates increased values when the user is on the XR mode in comparison with the traditional AR mode. Even if this change is not really significative, it provides valuable data about the reaction of the mental activity when the user has his senses focused in a more immersive experience. As for the beta waves, they got a lower value in the XR mode, which if it invalidates the hypothesis, it concludes that the users are in a more meditative and focused state when they experience the XR content.

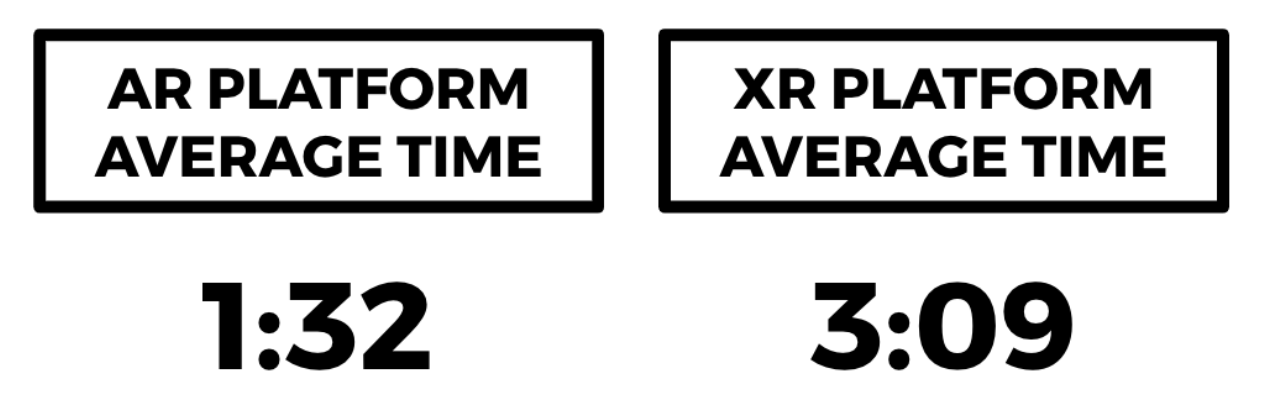

Hypothesis #4: A XR platform will increase the amount of time that the user spends using the platform than using a normal AR platform. This should be testable by recording the time that different users spent seeing and interacting with the same content on different platforms.

Conclusion: With a total number of 9 persons which the data was gathered from, all of the users spent more time using the XR platform than the traditional AR. Some of them provided feedback about this and they noted that they felt more captivated by the XR experience because it allow them to explore the content as it was part of real life.

SOCIETY ISSUES

Take the platform as a judgment tool

Lost of privacy, giving the brain waves as a mechanism of communication

Perception immersion, which can lead to the user to ignore real world feedback

Alien being in society, as the wearable requires that the user always use a headphone in his head, society can feel uncomfortable about this

Project name: Daydreamers // Advanced Interaction Studio

Student: Kammil Steven Carranza Vivas

Developed at :Master in Advanced Architecture

Faculty: Elizabeth Bigger, Luis Fraguada

Music: “amb: delirium” by Le Gang – soundcloud.com/thisislegang

Special thanks : Gabriele Juriviciute (model) – Eliana Quiroga (patterns advisor)