AUTONOMOUS ROBOTIC PAINTING explores the possibility of autonomous navigation to detect and repair damaged patches on any wall, which can be repaired through spraying paint. The robot does so, by exploring an alien frontier/ environment, all the wile mapping and avoiding obstacles to isolate and recognise walls which need repairing. The rover/robot with its sensors, manoeuvre the mounted paint gun to spray on the damaged wall to give a clean finished surface.

Some of the references for the research include the Industrial Paint Line in the automotive industry and a research project at ELID-NTU, Singapore. Where there is a clear lack of autonomy through the lack of sensors in the prior, while the former despite ints autonomy lacks the ability to navigate freely. Therefore the areas of interventions of our research were:

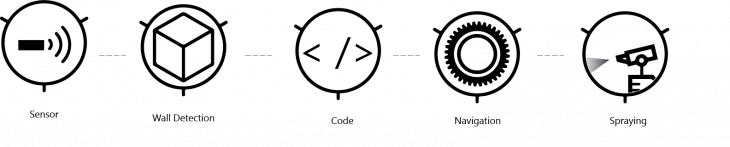

The working process involves a 360° LiDAR, for Navigation and SLAM, exploring its environment while simultaneously creating a map of the area. When this sensor picks up any anomalies on the wall surfaces in its periphery, the patches are detected for the robot to position and deposit paint on the damaged wall.

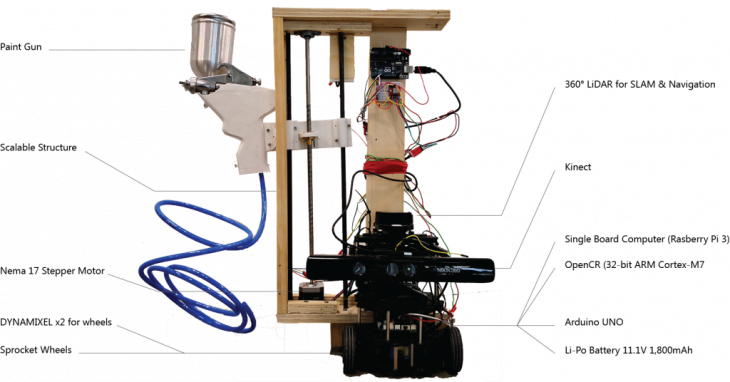

HARDWARE

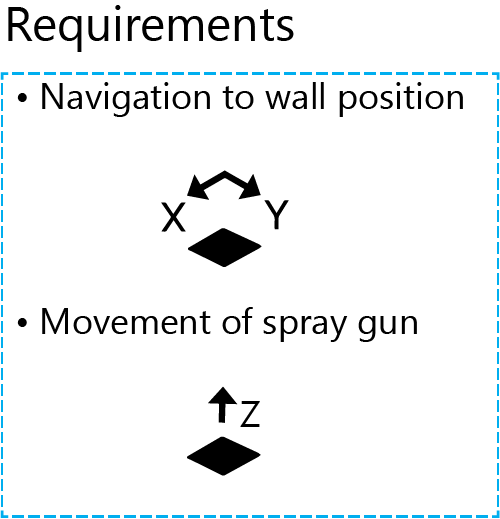

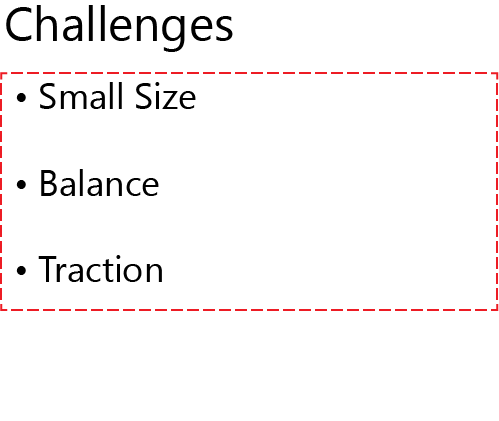

The initial build considered the following requirements and challenges:

Considering all the requirements the hardware build was enumerated to this:

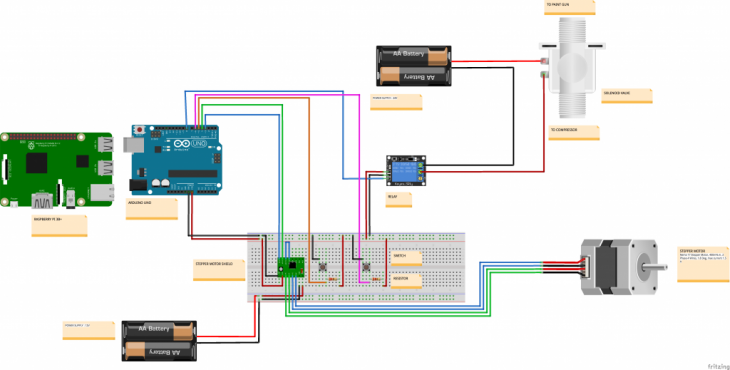

Electronic Schematic for the proposed Hardware

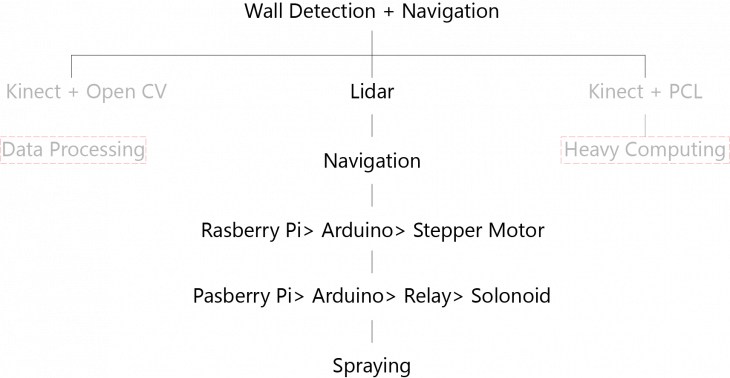

SOFTWARE AND FIRMWARE

In addition the navigation of the robot in any environment, it is programmed to detect unpainted patches on walls then set course to move the robot horizontally, while the vertical movement of the mounted spray-gun is done so by a stepper motor reading the data from the sensor through the Raspberry Pi; then Arduino. To make effective depositions on the detected patches, a Solenoid Valves dictates the release of paint, when the paint gun aligns correctly in the horizontal and vertical coordinates read from the sensor. Finally, spaying on the unpainted patches, the robot continues exploring for any damages on the walls of the environment its exploring. The current process of detection seeds down from the LiDAR being the primary sensor, but further possibilities present itself with a Kinect utilising OpenCV or PCL.

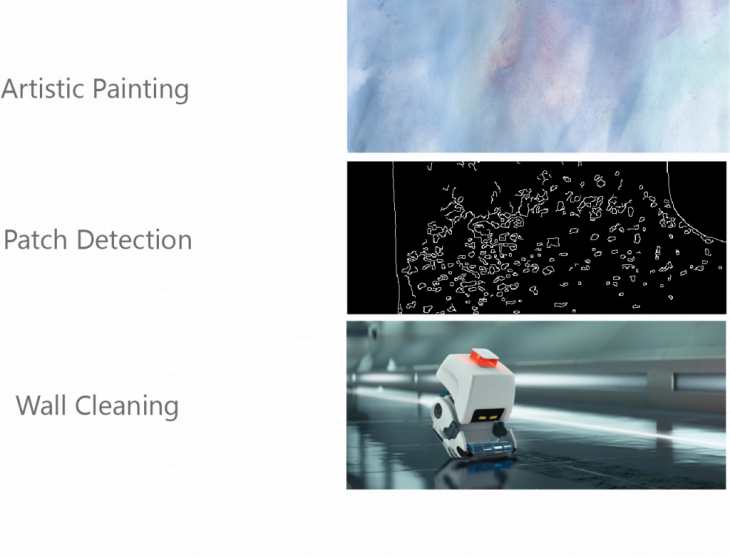

Other possible applications of the same process can be recreated in:

Students: SUJAY KUMARJI | OMAR GENEIDY | APOORV VAISH | OWAZE ANSAR